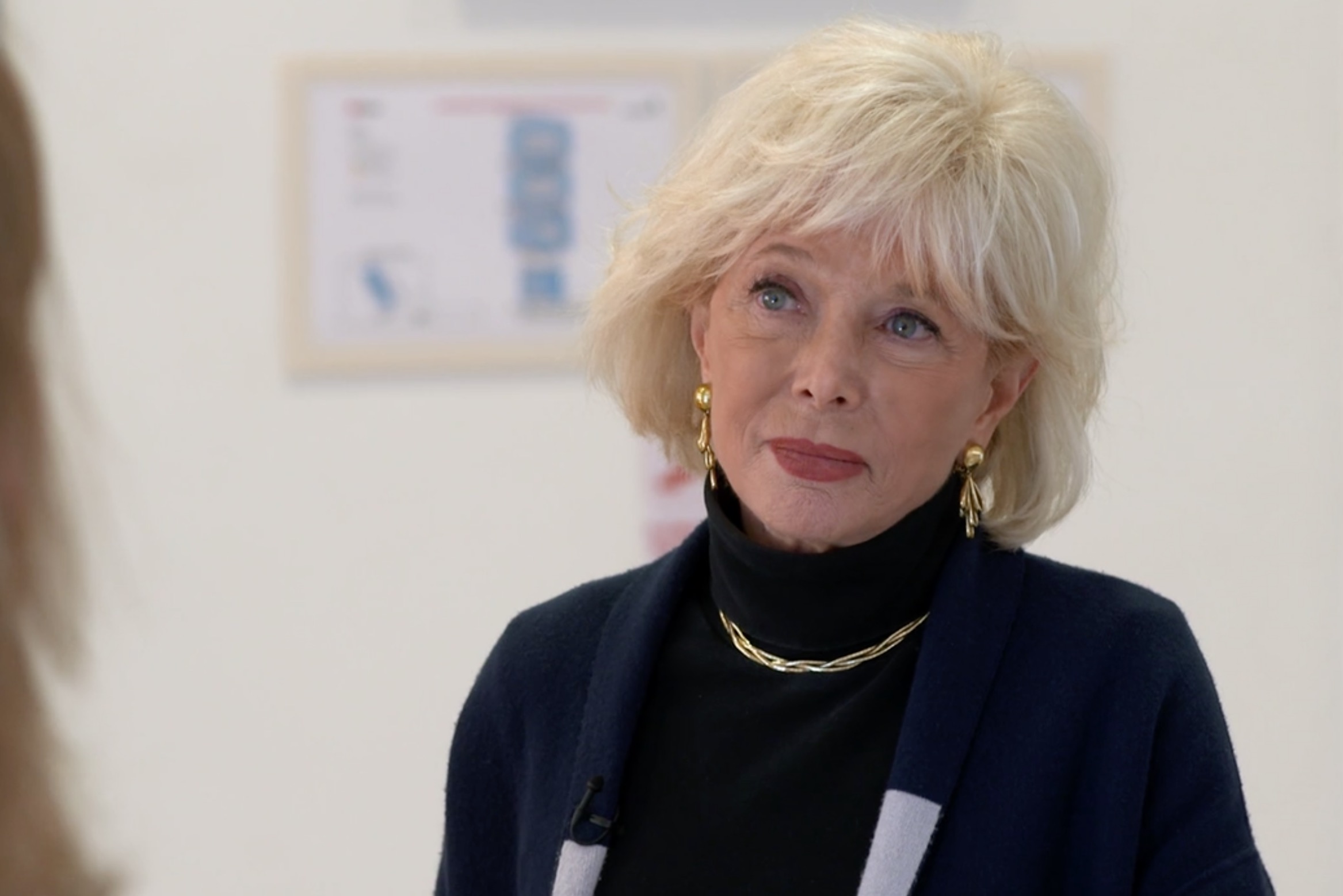

YouTube CEO Susan Wojcicki appeared on CBS’s 60 Minutes on Sunday evening, and instead of delving into all of the problems that YouTube creators are facing on the platform as a result of overzealous moderation and increasing restrictions, host Lesley Stahl wanted to talk about all the ways that YouTube could be moderated even further.

Opening the show, Stahl said that YouTube has “come under increasing scrutiny, accused of propagating white supremacy, peddling conspiracy theories, and profiting from it all,” setting the tone for what was 60 minutes of viewing that would leave YouTube creators frustrated that the mainstream narrative once again is that YouTube requires even more censorship.

Top YouTubers such as Jake Paul say they’re leaving YouTube, with Paul saying it’s become a “controlling and non rewarding platform to be a part of” and PewDiePie has urged YouTubers to not put all of their eggs in one basket with YouTube income and to instead diversify.

With growing discontent about YouTube censorship, Wojcicki’s interviews have been gaining more attention of late – as creators, frustrated at being demonetized at every turn and having their livelihood cut off with little help from YouTube, are wanting to hear what Wojcicki is going do to fix it.

But this wasn’t that type of interview. It was the usual type of interview where a mainstream media outlet calls on its new media competitor YouTube to moderate and restrict content further.

Stahl didn’t press Wojcicki about the upcoming COPPA changes that will cut creator revenue by up to 90% if their content is “made for kids” or how Wojcicki planned on helping creators with that.

Viewers who are even remotely familiar with YouTube and YouTube culture could get a good idea of what type of interview they were about to watch and that it would be a major culture clash when Stahl described people who watch unboxing videos (where people reveal their first impressions of their new products such as smartphones or laptops) as a phenomena where “millions watch strangers open boxes.”

Stahl described the popularity of ASMR videos as millions of people watching strangers “whisper”.

During the interview, Wojcicki announced that YouTube has removed “9 million videos” in just the last quarter.

The topic of “hate speech” is always one that YouTubers are looking out for. Many have had their videos demonetized or deleted for engaging in what YouTube says is hate speech.

Even those creating videos about historical modeling have had their videos removed as the algorithm has suggested that their content is somehow hateful.

Independent journalists have also suffered from YouTube’s algorithm determining that their content is “hate speech”, leaving them unable to report the news.

The more YouTube tries to crack down on “hate speech”, the more the platform appears to get it wrong and the platform doesn’t seem too quick to help those creators caught in the crossfire.

But during the 60 Minutes interview, Stahl was intent on encouraging the YouTube CEO to do more to tackle hate speech.

“You recently tightened your policy on hate speech,” Stahl said. “Why…why’d you wait so long?” she asked when Wojcicki agreed.

“Well, we have had hate policies since the very beginning of YouTube. And we…” Wojcicki attempted to answer but Stahl cut her off.

“But pretty ineffective,” Stahl cut in.

Wojcicki then tried to talk about the moderators and how YouTube is moderated by a combination of machine learning and human moderators.

Stahl suggested that, for the moderators, it must “very stressful to be looking at these questionable videos,” and that she’s heard they’re “beginning to buy the conspiracy theories.”

Wojcicki showed Stahl two videos to show how hard it is to moderate videos on the platform.

In one video, Wojcicki played a clip of a man being physically abused and kicked in the head in Syria that Wojcicki allowed to remain on the platform.

Wojcicki said that this video is allowed because it’s “uploaded by a group that is trying to expose the violence.”

Wojcicki then showed a video of Hitler and marching Nazi soldiers – one that didn’t contain any violence – and that looked like “totally historical footage that you would see on the History Channel,” Stahl said.

But Wojcicki explained that they removed that video because in the corner of the video it contained the number “1418.”

“1418 is code used by white supremacists to identify one another,” Stahl suggests.

Wojcicki explained that they work with “experts” so they know that’s what 1418 means, and that they also know the “hand signals, the messaging, the flags, the songs” that can be used to identify white supremacists.

But during the interview, Wojcicki didn’t seem totally unaware of the inconsistencies with her moderation policies. “You can go too far and that can become censorship,” she said.

Stahl reminded Wojcicki that she doesn’t have to support freedom of speech: “You’re not operating under some…freedom of speech mandate. You get to pick,” the host pressed to Wojcicki.

“We do. But we think there’s a lot of benefit from being able to hear from groups and underrepresented groups that otherwise we never would have heard from,” Wojcicki said.

“And what about medical quackery on the site? Like turmeric can reverse cancer; bleach cures autism; vaccines cause autism,” Stahl said. “The law under 230 does not hold you responsible for user-generated content. But in that you recommend things, sometimes 1,000 times, sometimes 5,000 times, shouldn’t you be held responsible for that material because you recommend it?”

Wojcicki: Well, our systems wouldn’t work without recommending. And so if…

Stahl: I’m not saying don’t recommend. I’m just saying be responsible for when you recommend so many times.

Wojcicki: If we were held liable for every single piece of content that we recommended, we would have to review it.

Stahl explained that “YouTube started re-programming its algorithms in the US to recommend questionable videos much less and point users who search for that kind of material to authoritative sources, like news clips.”

Data shows that it’s YouTube’s authoritative sources algorithm push that severely cut the recommendations of independent creators who are the backbone of the YouTube platform and began to push recommendations of platforms like CNN and MSNBC – which is the type of content that YouTube viewers are often looking to avoid when they come to YouTube.

Lesley Stahl’s style and line of questioning suggest she doesn’t understand YouTube, but she wouldn’t mind policing it.

Watch the full interview on CBS.

If you're tired of censorship and dystopian threats against civil liberties, subscribe to Reclaim The Net.