Australia’s eSafety Commissioner has issued new regulatory guidance tied to the Social Media Minimum Age (SMMA) law, offering what appears to be a soft-sell version of requirements that, in practice, are anything but.

While the public messaging attempts to downplay the law’s demands, the actual expectations placed on platforms point to a wide-reaching mandate for user screening and surveillance.

The press release insists, “eSafety is not asking platforms to verify the age of all users,” and cautions that “blanket age verification may be considered unreasonable.”

Despite that, the operational requirements suggest that age assurance will need to be applied extensively across platforms. Providers are told they must detect and remove underage users, stop them from creating new accounts, and avoid depending on self-declared age information.

Each of these expectations implicitly requires systems capable of identifying and monitoring user age at scale.

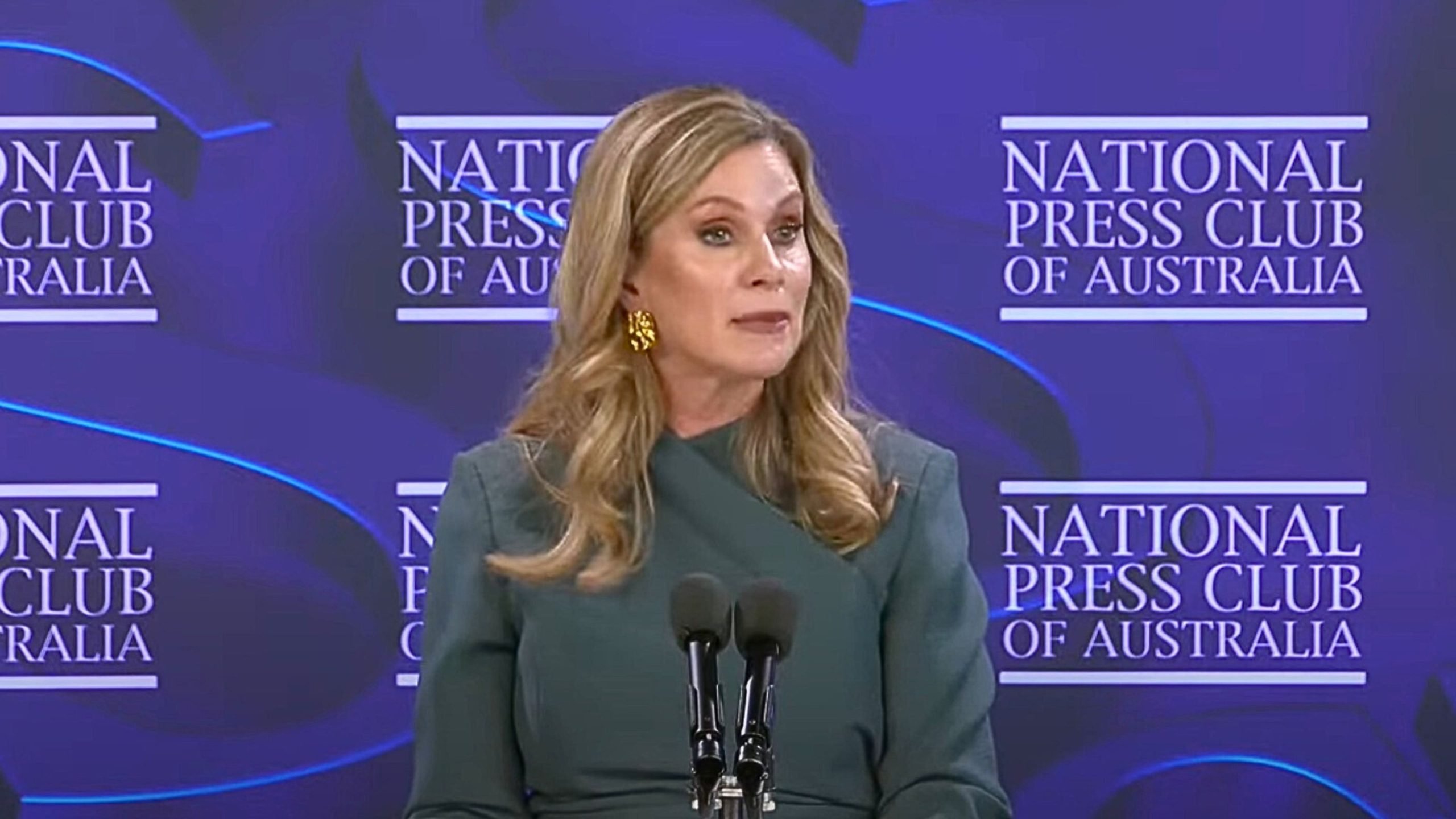

Commissioner Julie Inman Grant characterizes the guidance as principles-based, emphasizing flexibility and industry engagement. “We have encouraged platforms to take a layered approach across the user journey, implementing a combination of systems, technologies, people, processes, policies, and communications to support compliance,” she said.

The guidance is framed as a collaboration, not a command. But in reality, the “reasonable steps” that platforms are expected to take could demand broad, persistent age detection infrastructure.

Rather than mandating specific technologies, the document outlines a set of results that platforms are expected to achieve.

The contradiction begins with the expectations placed on platforms ahead of the December 10 deadline. “In the lead up to, and when the SMMA obligation takes effect on 10 December 2025, eSafety expects providers’ initial focus to be on the detection and deactivation/removal of existing accounts held by children under 16,” the guidance states.

Platforms are not only expected to find underage users but also to block them from coming back. “Providers are also expected to take reasonable steps to prevent those whose accounts have been deactivated or removed from immediately creating a new account.”

How are platforms supposed to accomplish that without verifying the age of everyone attempting to use their service?

The guidance shuts down the simplest approach: “eSafety does not consider the use of self-declaration, on its own without supporting validation mechanisms, to be reasonable for purposes of complying with the SMMA obligation.” In other words, simply asking users to state their age will not be enough.

Instead, platforms are told that age assurance will be required in nearly all cases. “It is expected that at a minimum, the obligation will require platforms to implement some form of age assurance, as a means of identifying whether a prospective or existing account holder is an Australian child under the age of 16 years.”

Age assurance, which may include age estimation via facial analysis, behavioral profiling, or third-party systems, becomes the default requirement, even as public statements attempt to paint it as optional.

Even as the guidance claims to be privacy-preserving and data-minimizing, it clearly expects providers to collect, analyze, and act upon a variety of user signals.

Platforms must demonstrate that their age assurance systems are reliable, accurate, and robust. They are also required to regularly monitor their systems, improve them over time, and report their effectiveness to the regulator.

While providers may not be compelled to verify every user individually, they are being pushed toward building systems that function in a very similar way.

The guidance also instructs providers to maintain review processes for users who believe they’ve been misidentified, and to offer options that do not rely on identity documents.

However, few of these mechanisms lessen the reality that broad age monitoring will likely be baked into every major platform’s infrastructure.

Although eSafety says its goal is to protect children, the practical result is a system that normalizes intrusive age estimation and surveillance techniques.

By requiring platforms to take “reasonable steps” while rejecting self-declaration and promoting behavioral monitoring, the law quietly shifts responsibility for user age from the individual to the platform, and in doing so, builds an environment where anonymous access becomes harder to sustain.