Some YouTube creators are calling foul after learning the platform has been quietly using AI to modify their Shorts without notification or approval.

For months, users have noticed odd visual quirks in some Shorts, such as skin that looks overly airbrushed, clothes appearing sharper than expected, or facial features slightly distorted.

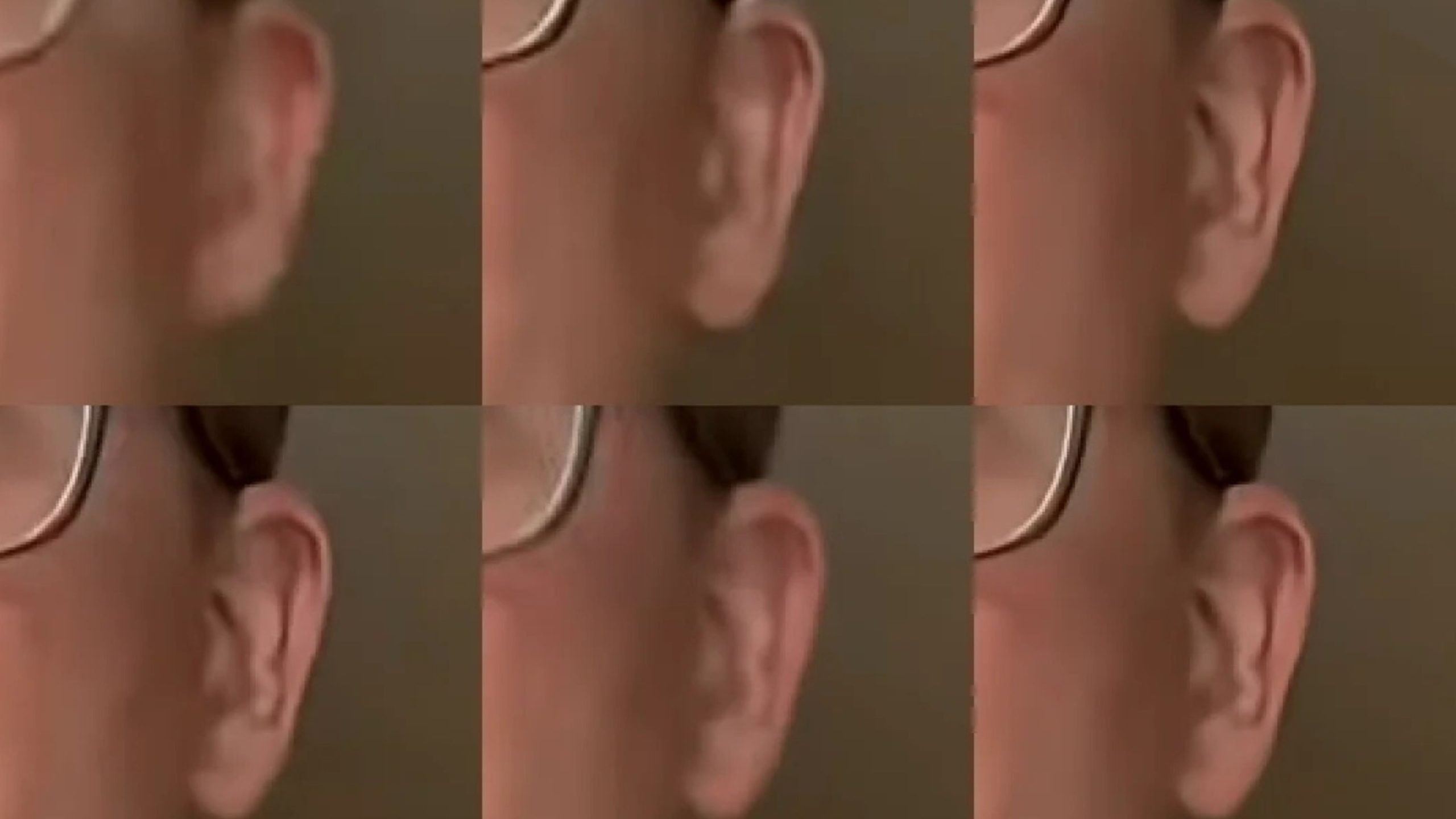

The alterations are subtle enough to go unnoticed in isolation, but side-by-side comparisons have revealed inconsistencies that many say make their videos feel unnatural or artificial.

Musician and creator Rhett Shull spotlighted the issue in a video that has drawn over 700,000 views. Comparing his uploads across platforms, he pointed out that YouTube had seemingly softened and retouched his Shorts without permission.

“I did not consent to this,” said Shull. “Replacing or enhancing my work with some AI upscaling system not only erodes trust with the audience, but it also erodes my trust in YouTube.”

That lack of transparency has provoked a wider conversation. In June, a Reddit thread titled “YouTube Shorts are almost certainly being AI upscaled” included frame-by-frame comparisons suggesting AI manipulation. The post drew widespread frustration, adding to the growing chorus of disapproval.

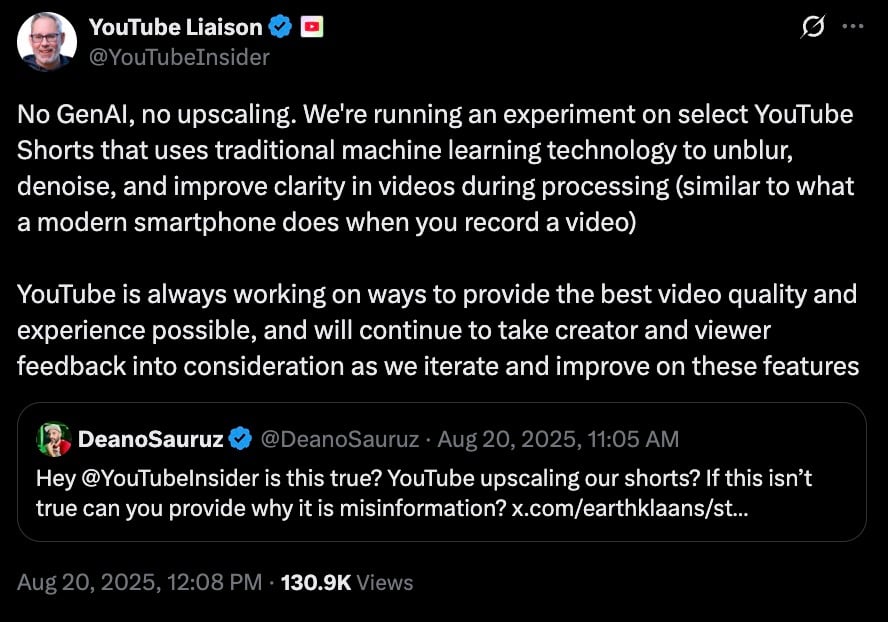

After staying silent for months, YouTube has now acknowledged that it has been conducting tests. Rene Ritchie, who serves as YouTube’s liaison to creators, addressed the concerns in a post on X.

According to Ritchie, the platform has been experimenting with traditional machine learning technology to unblur, denoise, and improve clarity on select Shorts, similar to automatic adjustments made by smartphone cameras.

Ritchie stressed that this is not generative AI, which produces new material from scratch.

But creators argue that the label is irrelevant if the platform is altering their content without their knowledge.

Content creators are now demanding more control, calling on YouTube to allow opt-out settings for any AI-based processing. They argue that unexpected alterations compromise both creative intent and viewer trust.

The backlash arrives not long after another controversial AI deployment by YouTube, a new system in the US that surveils users and estimates a viewer’s age and can block access to certain videos until the user has verified with some form of ID, if the AI suspects the viewer is underage.