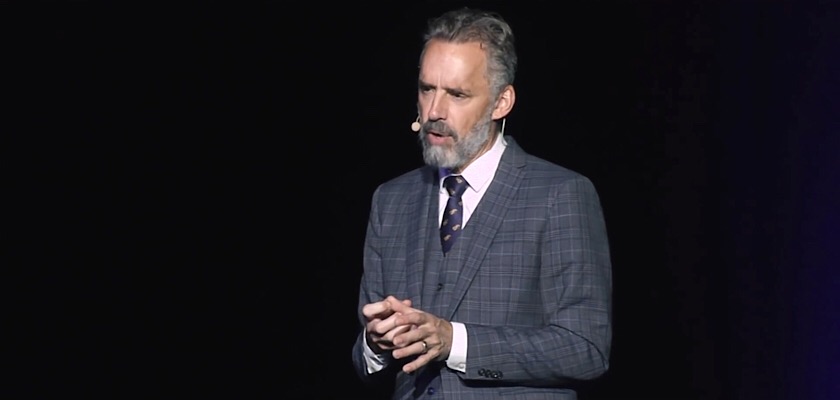

Over the last week, Not Jordan Peterson, a new deepfake voice simulator which accurately mimicked the voice of popular clinical psychologist Jordan Peterson, started to gain traction online.

Many websites and independent creators covered this deepfake voice simulator which allowed users to type phrases of 280 characters or less for the eerily accurate Jordan Peterson deepfake technology to read out loud. The coverage largely focused on how impressively realistic this Jordan Peterson deepfake is but some commentators also warned that this type of technology will “destroy our information ecosphere.”

Now Peterson himself has weighed in by expressing his concerns about deepfake technology and appears to threaten “Deep Fake artists” with legal action. In response, Not Jordan Peterson has disabled its deepfake voice simulator for the time being.

In a blog post about this and other deepfakes, Peterson described the technology as “something that is likely more important and more ominous than we can even imagine” and said: “It’s hard to imagine a technology with more power to disrupt.”

He goes on to discuss some of the malicious ways this technology could be used which include fraud and producing fake news based on audio or video segments that are indistinguishable from real audio or video recordings.

Peterson then suggests that a possible solution is to create an “exceptionally wide-ranging law” which seriously considers whether stealing someone’s voice is a “genuinely criminal act, regardless (perhaps) of intent.”

He finishes by saying:

“Wake up. The sanctity of your voice, and your image, is at serious risk. It’s hard to imagine a more serious challenge to the sense of shared, reliable reality that keeps us linked together in relative peace. The Deep Fake artists need to be stopped, using whatever legal means are necessary, as soon as possible.”

Shortly after Peterson published his post, Not Jordan Peterson took down its deepfake voice simulator and left the following update:

“In light of Dr. Peterson’s response to the technology demonstrated by this site, which you can read here, and out of respect for Dr. Peterson, the functionality of the site will be disabled for the time being.”

Not Jordan Peterson suggests that the site may be operational again and that a longer blog post will be published this weekend explaining the owner’s experiences with the site, the owner’s opinions on the future of deepfake technology, and the ways society can potentially adjust to the existence of deepfake technology.

Peterson’s concerns about the Not Jordan Peterson deepfake are reflective of wider concerns that have been raised as this technology develops at a rapid rate. Similar deepfakes such as Faux Rogan, a Joe Rogan audio deepfake, have shown how accurate this technology is getting in the area of voice replication and the YouTube channel Ctrl Shift Face regularly publishes highly realistic deepfake videos. New video editing technology is also being developed which allows users to create deepfake talking head videos which are almost indistinguishable from the original.

Lawmakers have responded to these concerns by pressuring tech companies to develop coherent strategies for handling deepfakes on their platforms in the run-up to the 2020 presidential elections. Congress is also considering a DEEPFAKES Accountability Act which would require mandatory labeling or disclosure on “advanced technological false personation records.”

However, digital rights groups such as the Electronic Frontier Foundation (EFF) have warned that these solutions could cause unwanted censorship of comedy or satire and have adverse effects on free expression.

Any rules and regulations around deepfakes could also cause serious problems if the meaning of “deepfake” isn’t clearly defined. While deepfake generally refers to content that is created by artificial intelligence (AI), when edited videos of Congresswoman Nancy Pelosi which made her appear drunk and rambling started to gain traction online in May, many people incorrectly referred to these videos as deepfakes. If regulations that are designed to tackle genuine AI-generated deepfakes start being applied to edited or slowed down videos, it could create more problems than the deepfakes themselves and lead to more of the unwanted censorship that the EFF warns of.