The World Economic Forum (WEF) annual gathering of elected and unelected elites from around the world is once more under way in Switzerland’s Davos, and this year AI is unsurprisingly garnering a fair share of attention.

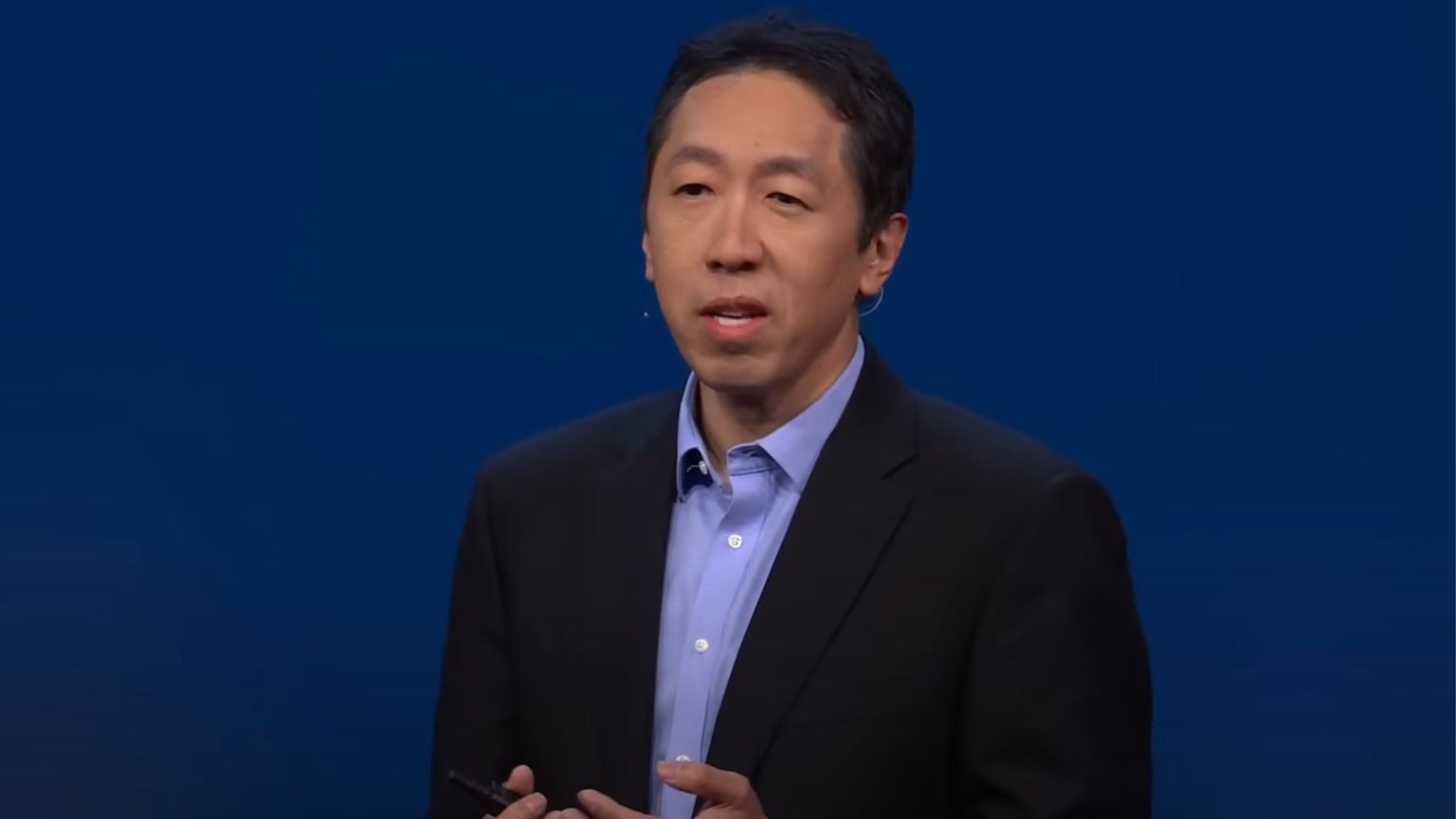

More precisely, how to harness its power, including through new regulation, but as one participant, DeepLearning.AI founder Andrew Ng put it, not of the tech itself, but rather of its “applications.”

Related: Bill Gates Hopes AI Can Reduce “Polarization,” Save “Democracy,” Ignores Censorship Implications

A natural stance, some may say, for a person selling some version of AI.

Ng told the WEF that while there is amplification of warnings about problems arising from AI, calling them hypothetical, and of risk assessments that are sensationalist – what he says as the real potential issues get overlooked.

So let’s not vilify AI – but let’s still seek to control, i.e., regulate it, via “applications.” And here Ng seems to go straight for the sensationalist, only under a different pretext, mentioning rather broadly defined “systems that can emotionally manipulate people for profit” as something that would be justified to regulate.

DeepLearning.AI founder’s opposition to regulating AI tech itself is explained touching on all the right concerns (in terms of hypothetical new rules), such as that they may stand in the way of open source, innovation, competitiveness.

So – let the technology run free, supposedly for these noble reasons – but then tighten the screws when it comes to how it can be used.

And here, Ng wants “AI applications,” (uses) to be designated according to “degree of risk.” The way to do it is to make this designation “clear” – and at first, Ng was doing rather well, mentioning “actually risky” cases, such as medical devices.

But then, his “clear” identification becomes muddier, and we finally meet our old friend “disinformation” again. According to him, “chat systems potentially spewing disinformation” is something that can not only be straight-forwardly identified, but is also “actually risky” – and needs to be censored, i.e., regulated in a “tiered” manner.

Ng is also not a fan of proposals to do this taking into account the size of a model used by an AI project, and believes that both small and large ones can be equally dangerous when it comes to the two things he seems “stuck” on – “bad medical advice” and, “generating disinformation.”