A recent exposé by James O’Keefe of the O’Keefe Media Group has brought to light more accusations after a Meta engineer admitted the company suppresses certain political content on Facebook. The senior engineer was covertly filmed during a discussion where he acknowledged that Facebook potentially demotes posts criticizing Kamala Harris and engages in shadowbanning.

During the undercover recording, the engineer alleged that criticisms of Kamala Harris based on her personal life are likely downgraded automatically. He explained, “Say your uncle in Ohio said something about Kamala Harris is unfit to be a president because she doesn’t have a child, that kind of shit is automatically demoted.” Further, he noted that such actions occur without informing the user, effectively reducing the visibility and interaction with the posts—a tactic he equated with shadowbanning.

Click here to display content from rumble.com

Further details emerged as the engineer discussed Meta’s internal strategies to control content. He spoke of the “Integrity Team” at Meta, which uses what he described as “civic classifiers” to manage posts. These classifiers are specifically designed to identify and demote political content. “That means if anything is related to political content, it’s automatically not shown,” he clarified.

Moreover, the engineer touched on measures Meta has implemented to prevent misuse of its platform, including a “SWAT team” that has been active since April to consider various scenarios in which the platform could be exploited. He suggested these efforts are part of a broader strategy to maintain control over the dissemination of civic content.

The implications of such disclosures are profound, especially as Gyawali hinted that Meta could influence the 2024 election, indicating support from Meta’s CEO, Mark Zuckerberg.

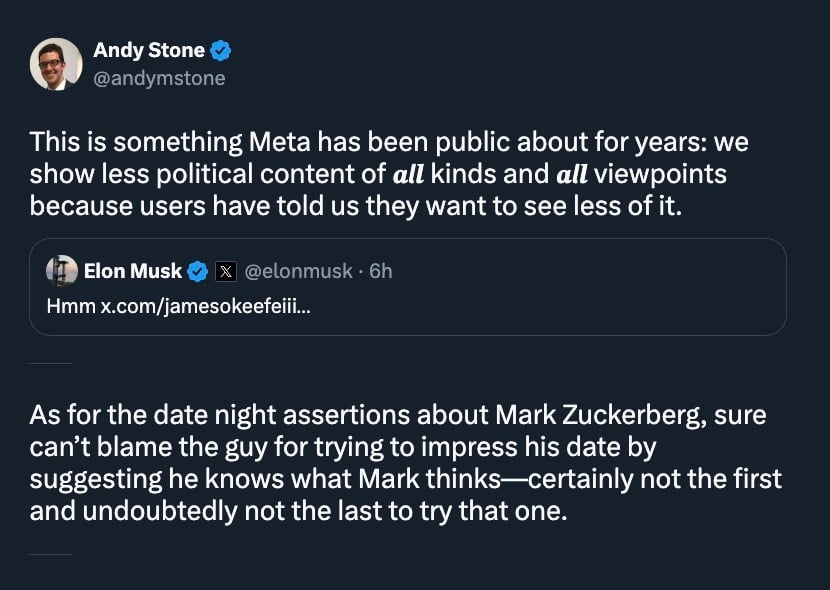

In response to the stir caused by these revelations, Meta executive Andy Stone addressed the issue on the social media platform X, minimizing the situation. He described it as an “undercover sting” that simply uncovered what has been Meta’s long-standing policy, acknowledged publicly, of reducing the amount of political content shown to users based on their feedback.

“This is something Meta has been public about for years: we show less political content of 𝒂𝙡𝒍 kinds and 𝒂𝙡𝒍 viewpoints because users have told us they want to see less of it,” Stone wrote, in an attempt to dismiss the claims that Meta was biased.