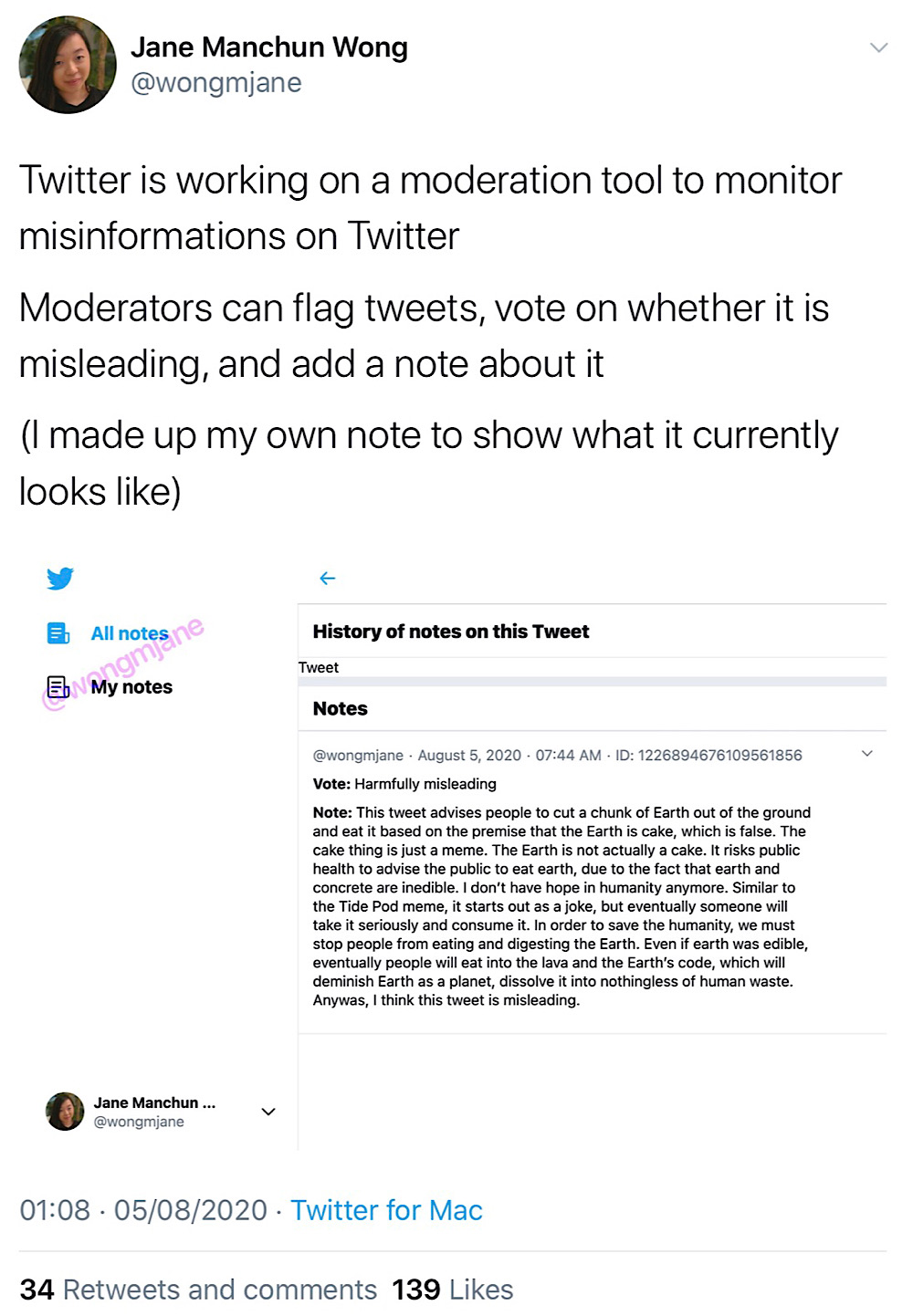

App researcher Jane Manchun Wong has discovered an unreleased Twitter tool that allows moderators to flag tweets containing “misinformation,” vote on whether the tweets are “misleading,” and add additional notes about the tweets.

The “Note:” section of this screenshot is just filler text created by Wong, and was not part of the discovery.

It’s unclear when or if this tool will ever be released and how such a tool could potentially affect tweets once they’ve been flagged and voted on as “harmfully misleading.”

A previously leaked screenshot has shown what appears to be an internal Twitter tool that can blacklist users from search and trends, indicating that Twitter moderators do have the power to selectively suppress content.

Twitter’s terms of service were also updated at the start of the year to include shadowbanning – a controversial practice that involves limiting the distribution of user posts in a way that’s difficult for them to detect. This serves as another indicator that Twitter staff can selectively suppress content on the site.

In addition to this, leaked documents from earlier this year revealed that Twitter was testing a social credit style fact-checking system for politicians and public figures.

At the time, Twitter confirmed that this leaked demo was “one possible iteration” of its new misinformation policy but it has yet to be released.

This social credit system had similar mechanisms to the tool discovered by Wong including the ability to rate whether a tweet is “likely” or “unlikely” to be harmfully misleading. However, the social credit system was community-based while Wong reports that the tool she discovered will be used by Twitter moderators.