Gaming platforms are increasingly rushing to please media spectators and tackle what it calls “toxicity” in gaming and work to address it through various censorship proposals.

In November, Ubisoft and Riot Games partnered on such a project. However, gaming developer Electronic Arts (EA) has been promoting the strategy for several years now through its “Positive Play” program, according to gaming news outlet IGN.

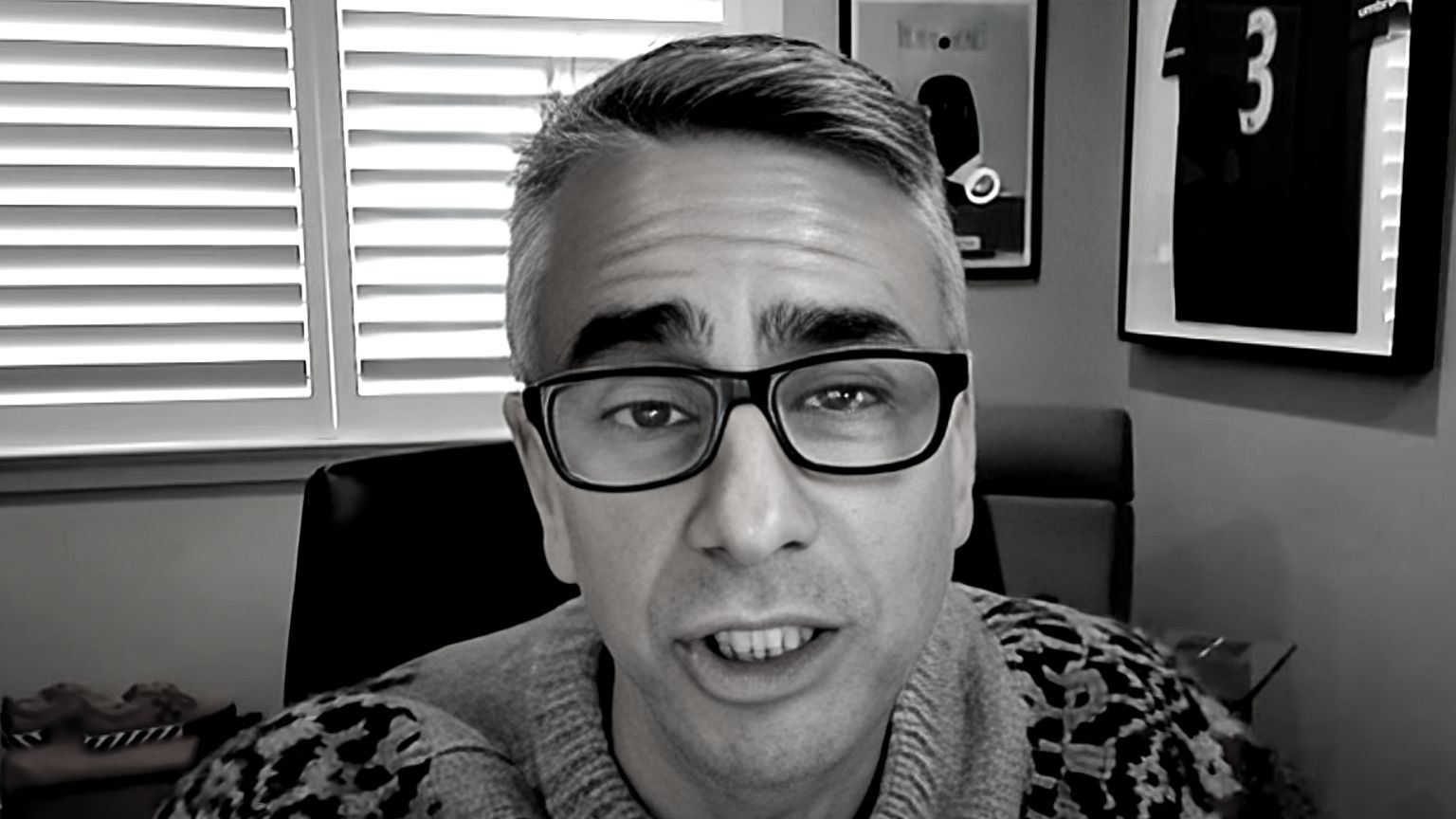

The program was spearheaded by EA’s chief experience officer Chris Bruzzo, who served as the company’s chief marketing officer for six years. He said that he and current CMO David Tinson started conversations that led to the formation of Positive Play.

Bruzzo was convinced that EA could do something about “toxicity” following discussions at EA’s Building Healthy Communities Summit in 2019. He said that the summit and the feedback that followed made it clear that women, in particular, were reportedly having a “pervasively bad experience” in online gaming.

“If you want to find an environment that’s more toxic than a gaming community, go to a VR social community,” Bruzzo told IGN. “Because not only is there the same amount of toxicity, but my avatar can come right up and get in your avatar’s face, and that creates a whole other level [of] not feeling safe or included.”

They got to work, and in 2020, they published the Positive Play Charter, a guideline of dos and don’ts of online gaming. The charter states that users that do not follow the rules may have their accounts suspended.

The charter, according to Bruzzo, formed a framework that EA could use for both content moderation and creating “positive” experiences.

On the side of moderation, Bruzzo said EA has made efforts to make it easy for players to report speech. The developer uses both AI and human moderators, with Bruzzo insisting that AI makes moderation much easier.

He noted that player names are one of the areas where “toxicity” is an issue. Players attempt to trick AI by using symbols, and he says their automated systems have been trained to address such workarounds.

Bruzzo claimed that in the summer of 2022, EA ran 30 million Apex Legends club names through AI and removed 145,000 that violated its rules. He said human moderators would not have done that.

Bruzzo said that the Positive Play program has helped significantly reduce hateful conduct.

“One of the reasons that we’re in a better position than social media platforms [is because] we’re not a social media platform,” he said. “We’re a community of people who come together to have fun. So this is actually not a platform for all of your political discourse. This is not a platform where you get to talk about anything you want…The minute that your expression starts to infringe on someone else’s ability to feel safe and included or for the environment to be fair and for everyone to have fun, that’s the moment when your ability to do that goes away. Go do that on some other platform. This is a community of people, of players who come together to have fun. That gives us really great advantages in terms of having very clear parameters. And so then we can issue consequences, and we can make real material progress in reducing disruptive behavior.”

Bruzzo did acknowledge that it is much harder to censor voice chats because of the risk of infringing on privacy laws.

“In the case of voice, the most important and effective thing that anyone can do today is to make sure that the player has easy access to turning things off,” he said. “That’s the best thing we can do.”