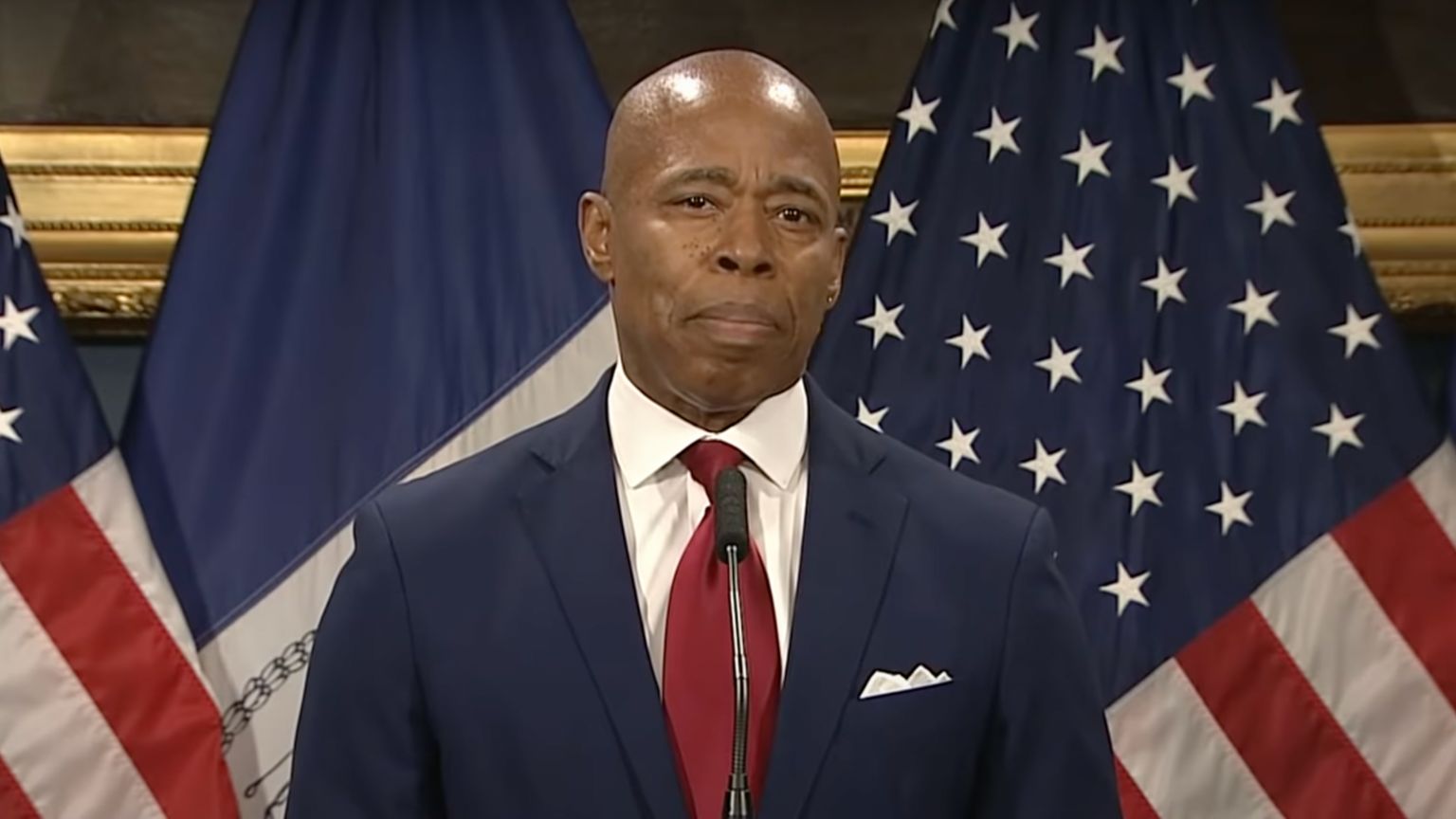

Despite the fact civil rights and privacy advocates are consistently warning against deploying more facial recognition technology, as mass surveillance-susceptible and insufficiently accurate policing solutions, mayors like New York City’s Eric Adams are adamant about moving in the opposite direction.

According to a press release, Adams intends to expand facial recognition as a way for the police to “identify problems, follow up on leads, and collect evidence.”

When reports speak about expanding the New York Police Department’s (NYPD) current use of facial recognition, they are referring to Amnesty International data that shows this policy that’s meant to be expanded here is already invasive. Amnesty International says that the city’s main law enforcement agency already uses images, sometimes scraped from the internet, to compare and match with its own suspect and felon database, and then also those obtained from some 15,000 surveillance cameras installed all around New York.

The question of why there needs to be even more of this controversial push is one that emerges from the take on the situation offered by American Civil Liberties Union (ACLU) senior policy council Michael Sisitzky, cited by Reason magazine, who believes the mass surveillance already operational and at NYPD’s disposal is enough to “track who goes where and when.”

Once again, ACLU emphasizes the possibility that the use of facial recognition could disproportionately infringe on the rights of minorities – but the problem of mass surveillance is and will remain to be universal.

One of the reasons is the level and quality of this tech’s safety, given how easily it can be manipulated, and its general lack of accuracy. NYPD’s use of facial recognition has been described as “unscientific” by Georgetown Law’s Center on Privacy & Technology Jameson Spivack. This now goes so far that officers on occasion, when they cannot find a match with a security video image of a suspect, go ahead and try to match it with that of a lookalike celebrity.

In one case, it was a photo of Woody Harrelson.

“When the software turned up matches for the actor, the police identified and arrested one of the matches that they thought resembled the suspect from the security cam footage,” writes Reason.

One proposed solution is for New York to follow in the footsteps of San Francisco, Oakland, and Seattle and ban facial recognition – instead of doubling down on its usage.