The idea of using AI to detect and perhaps even censor AI content online has been growing over the last year. However, if recent tests are to be believed, the accuracy of such technology is far from perfect – meaning genuine content could be falsely censored if such technology is trusted.

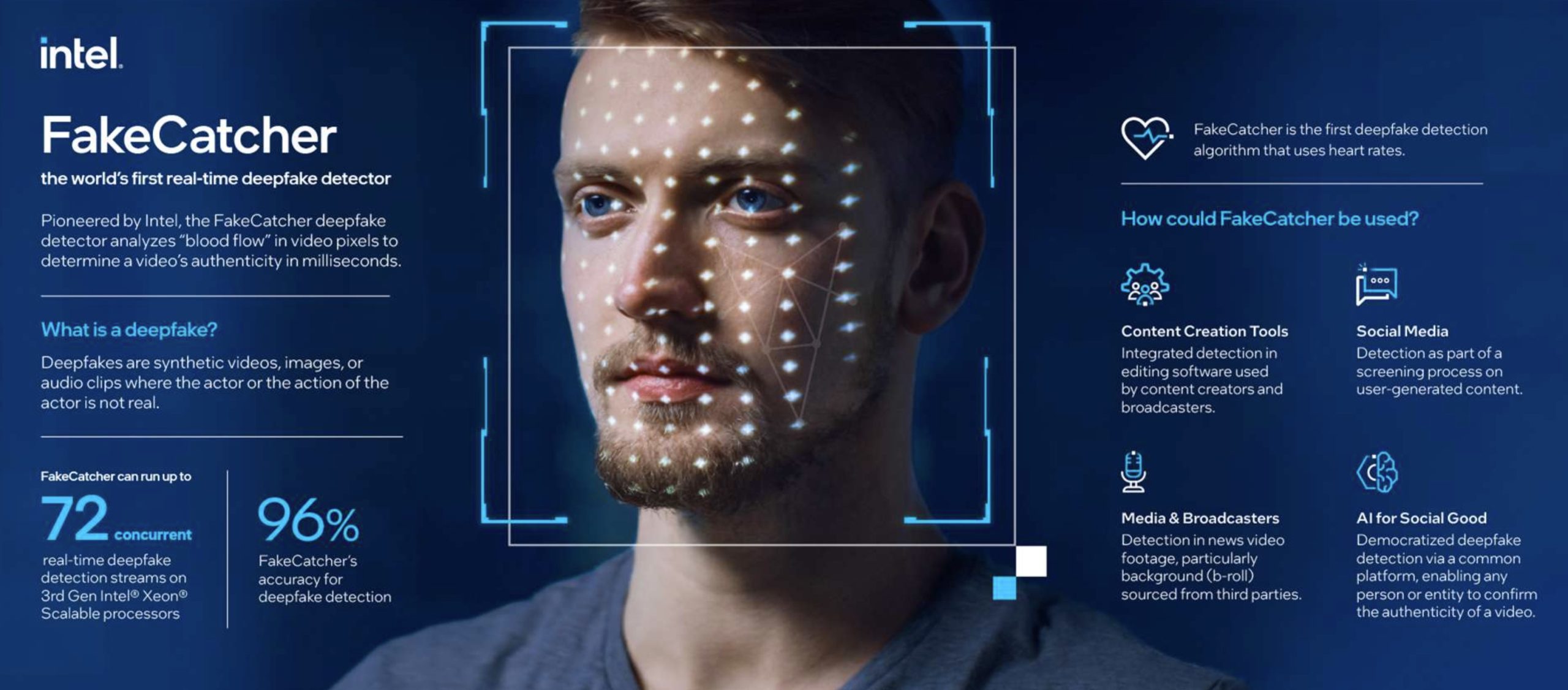

In the fight against deepfake videos, Intel has released a new system named “FakeCatcher” that can allegedly distinguish between genuine and tweaked digital media. The system’s effectiveness was put to the test by using a mixture of real and doctored clips of former President Donald Trump and current President Joe Biden. Intel reportedly uses the physiological trait of Photoplethysmography, which reveals blood circulation changes and tracks eye movement to identify and expose these deep fakes.

The acclaimed scientist Ilke Demir, part of the Intel Labs research team, elucidates that the process involves determining the authenticity of the content based on human benchmarks such as a person’s blood flow changes and eye movement consistency, the BBC reported.

These natural human characteristics are detectable in real videos, but absent in videos made through AI tools, Demir expounded.

However, preliminary testing revealed that this technology might not be foolproof. Despite the company’s bold claim of 96% accuracy for FakeCatcher, the test results onstage a contrasting story. The system efficiently detected lip-synced deepfakes, failing to recognize only one out of several instances. Interestingly, the real ordeal emerged when the system was presented with genuine videos, a situation in which it erroneously identified some real videos as inauthentic.

There is a discernible limitation with pixelated or low-quality videos, where detecting facial blood flow signals becomes challenging. Furthermore, FakeCatcher’s non-audio analysis entails that seemingly authentic audio could be labeled as fake.

Despite the reservation of antidotal researchers like Matt Groh from Northwestern University who questioned the showcased statistics’ applicability in real-world situations, Demir defends FakeCatcher’s overcautious approach aiming at minimizing missed fake videos.