Correcting false information on Twitter makes the problem worse as it leads to more of the same, according to new research.

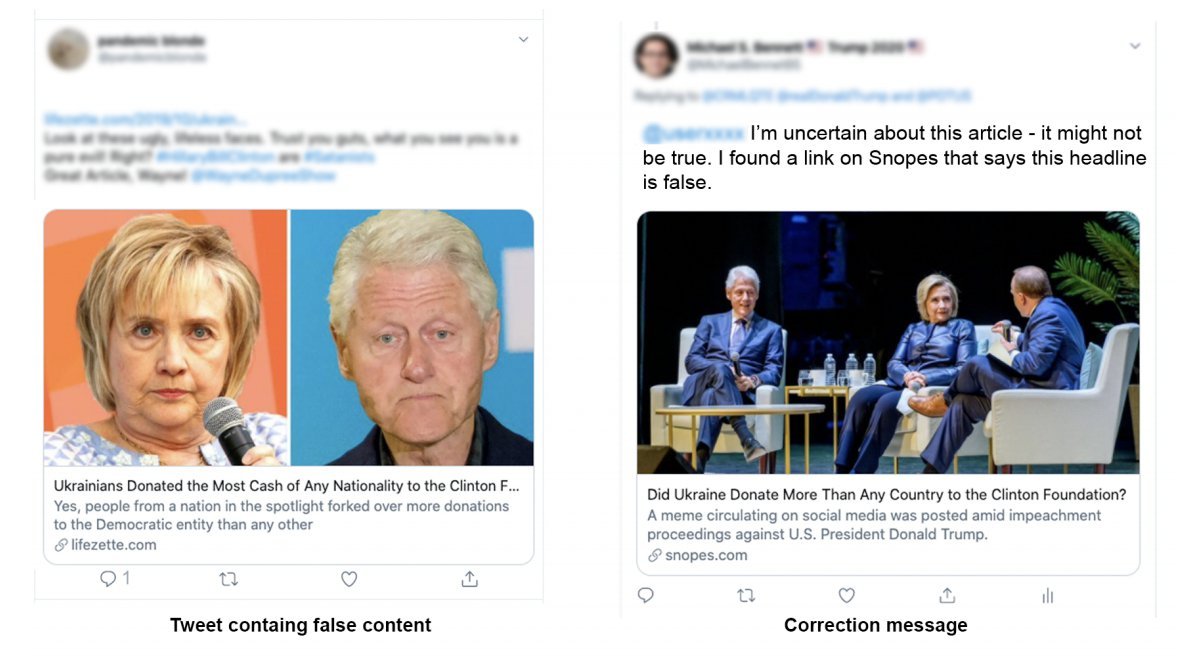

The study was conducted by researchers at MIT Sloan in the US and the University of Exeter, in the UK. The researchers first identified about 2,000 Twitter accounts from across the political spectrum that had shared one of the 11 most popular fake news articles. All of those articles have been debunked by Snopes, a website that claims to be the “definitive fact-checking resource.”

The team then created Twitter bot accounts, all of which had existed for more than three months and amassed at least 1,000 followers. The accounts appeared human to other Twitter users.

The bots would correct tweets containing any of the 11 fake news articles, replying with a statement like: “I’m uncertain about this article – it might not be true. I found a link on Snopes that says this headline is false.” The reply would also contain a link to the supposedly correct information.

They found that the accuracy of the retweets immediately declined by 1% in the 24 hours after being corrected. They also found out that, within the same 24 hours, the corrected users increased the “partisan lean” in their tweets by more than 1% and the so-called “toxicity” of the language used increased by 3%.

The lead author of the study, Dr Mohsen Mosleh of the University of Exeter Business School, described the findings as “not encouraging,” as they suggest that one of the misinformation fighting tools does not work.

“After a user was corrected they retweeted news that was significantly lower in quality and higher in partisan slant, and their retweets contained more toxic language,” said Dr Mosleh.

Researchers also found out that the news quality in retweets (not quoted or retweets with comments) degraded, but did not in original or “primary” tweets by the studied accounts. That could be explained by users spending much more time crafting original tweets and much less time to retweet.

“Our observation that the effect only happens to retweets suggests that the effect is operating through the channel of attention,” said the study’s co-author Professor David Rand from the MIT Sloan School of Management.

“We might have expected that being corrected would shift one’s attention to accuracy.

“But instead, it seems that getting publicly corrected by another user shifted people’s attention away from accuracy – perhaps to other social factors such as embarrassment.

“This shows how complicated the fight against misinformation is, and cautions against encouraging people to go around correcting each other online.”