Twitter has announced that random users will now be able to flag tweets for “misinformation” under its new community-based Birdwatch program.

The program is currently being piloted in the US and lets Twitter users create notes that “add context to tweets” via a three-step process.

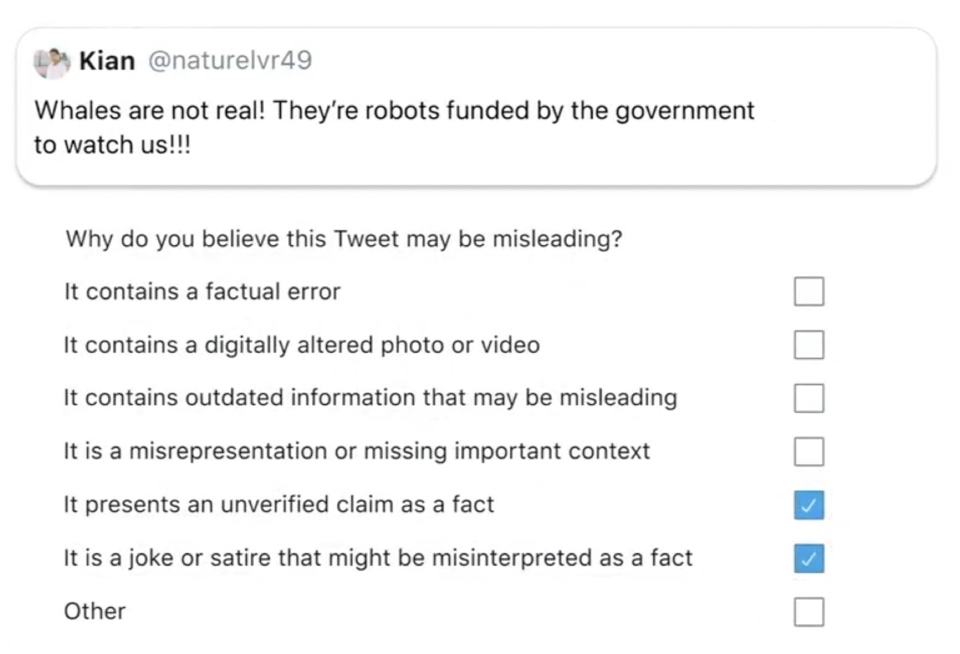

First, users are asked to select why they believe the tweet is misleading (with one of the options being “it is a joke or satire that might be misinterpreted as fact”).

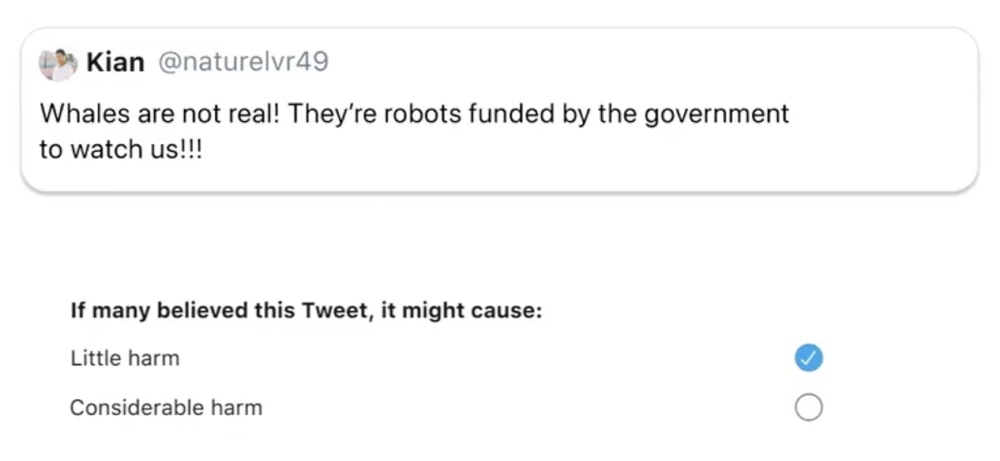

Then they’re asked whether they believe the tweet could cause “little harm” or “considerable harm.”

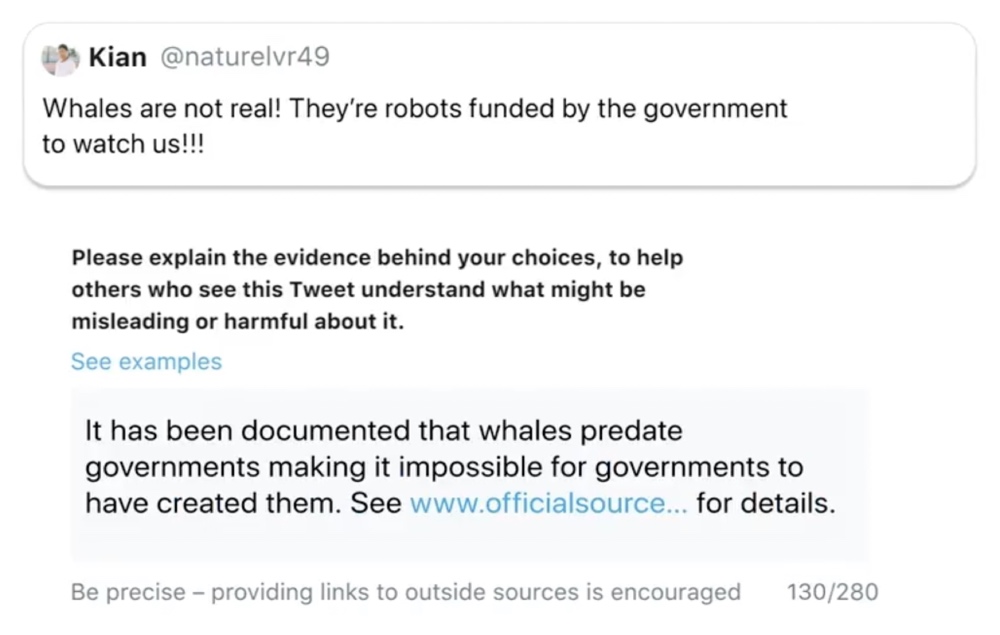

Finally, they’re asked to leave a note that explains the evidence behind their choice.

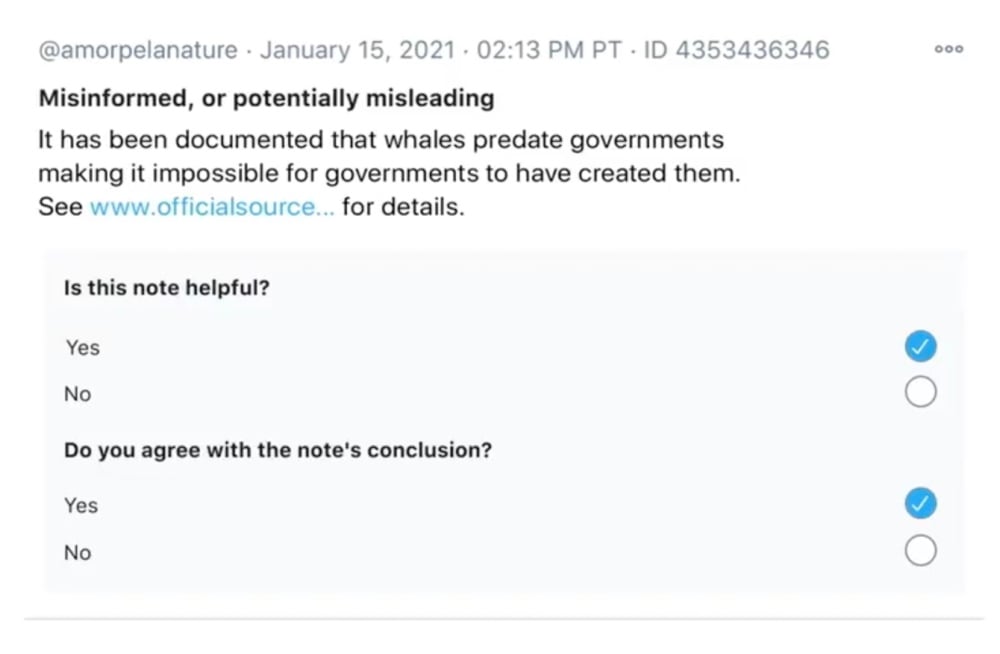

For now, the Birdwatch notes are visible on a separate Birdwatch site and Birdwatch pilot participants can rate the helpfulness of the notes and whether they agree with the notes’ conclusions via this site.

Twitter adds that Birdwatch notes “will not have an effect on the way people see Tweets or our system recommendations.”

Twitter hasn’t specified when or how the notes will be integrated into the main Twitter app.

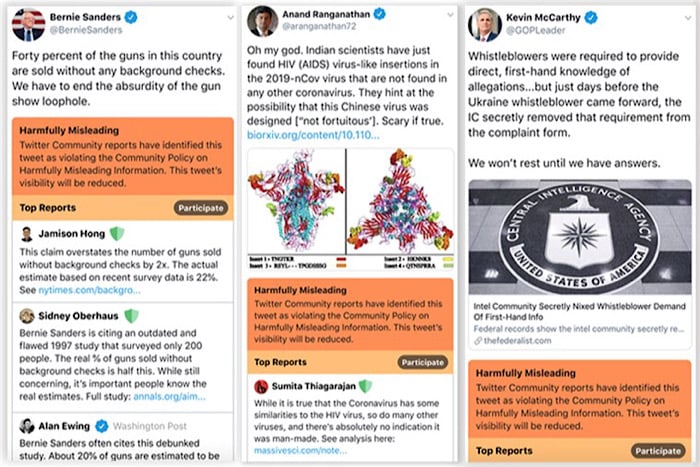

However, leaked documents containing screenshots of a similar “Community Notes” feature that Twitter was testing in February 2020 showed one possible iteration of how notes could be applied to tweets.

In these screenshots, prominent bright orange labels were applied to “harmfully misleading” tweets and a selection of notes from Twitter users were displayed below this label. This label also stated: “This tweet’s visibility will be reduced.”

One of the biggest problems is the huge potential for brigading and false flagging. Any tweet, regardless of its accuracy, could potentially be flagged as misinformation if a large enough number of users flag it or give a positive rating to notes that claim the tweet is misleading. And any bad actor with a large enough following could easily direct a large number of users to mass flag tweets.

Twitter addressed the brigading concerns by stating:

“Brigading is also one of our top concerns. Birdwatch will only be successful if a wide range of people with diverse views find context it adds to be helpful & appropriate. We’ll be experimenting with mechanics and incentives that are different from Twitter. For example, incorporating input from a wide & diverse set of folks vs. letting a simple majority determine outcomes. Later in the pilot, we’ll build a reputation system where participants earn reputation over time for contributions that people from a wide range of perspectives find helpful.”

While the reputation system could reduce the prevalence of brigading, it’s likely to create a new problem by allowing a small proportion of Birdwatch users with a high reputation score to decide what constitutes misinformation.

Even if Twitter attempts to factor in ratings from a wide range of perspectives, recent statistics have shown that Twitter’s recent censorship has disproportionately affected Republicans. If Republicans are being purged at a much higher rate than Democrats, then the remaining users are likely to skew this reputation system in one direction.

This problem of giving a small number of users a disproportionate amount of power has been highlighted multiple times on Wikipedia where just over 1,000 “trusted users” have been appointed administrators and been given additional technical privileges. These administrators are often highly influential when Wikipedia blacklists news outlets and the vast majority of outlets that get blacklisted are conservative.