Last year, Twitter re-launched its system that inserts a prompt whenever it detects that a user is about to post a “potentially offensive” statement.

The introduction of the feature was somewhat controversial, allowing Twitter to determine what was “offensive” and resulted in Twitter being able to nudge the public conversation in a direction that it chooses.

Now, some data has been collected to reveal that impact that these prompts have.

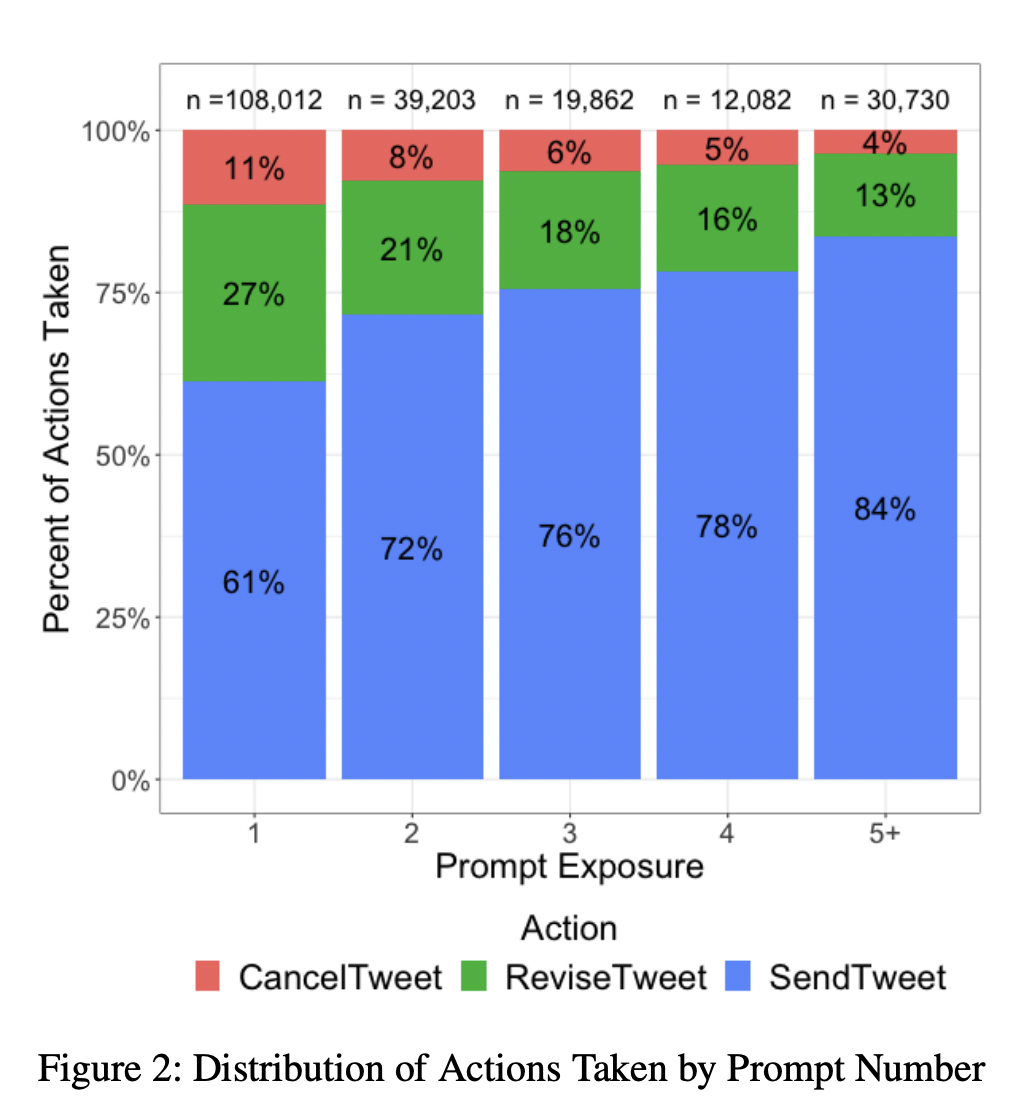

In the report, Twitter says that, when issued with the prompt, 30% of users backtrack on what they were about to post, changing it or deleting it.

30% is no small number when the impact is extrapolated to Twitter’s almost 200 million users.