It has been revealed that the UK government is involved in developing yet another dystopian scheme, with this one getting compared to the concepts explored in Minority Report, as it involves algorithm-based analysis to “predict murder.”

The Ministry of Justice (MoJ) “Homicide Prediction Project” – as it was known when first launched, only to now be referred to with the generic “sharing data to improve risk assessment” phrase – uses information belonging to between 100,000 and half a million people, with the goal of finding out who is “at greatest risk” of becoming a murderer.

Statewatch uncovered the project that started during Rishi Sunak’s government. The civil rights group used Freedom of Information requests and notes that this concerns a (previously) secret program that involves an agreement between the MoH, Greater Manchester Police (GMP), and the London Metropolitan Police.

Responding to the report, the UK government said it was for research purposes only, “at this stage.” Statewatch quotes from one of the three FOIA documents it has seen, where MoJ mentions a future “operationalization” of this system.

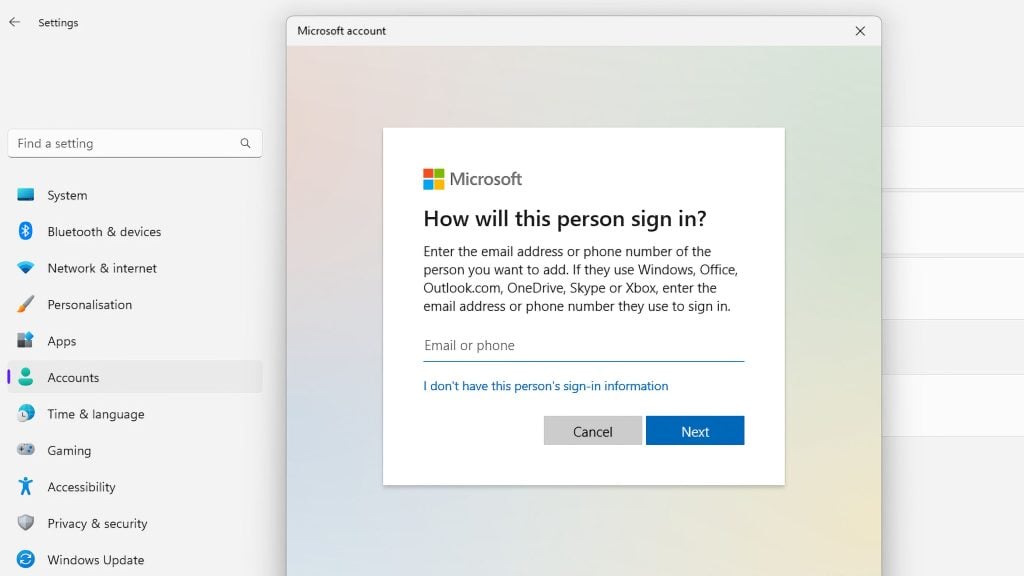

The data used is originally collected by the police, and comes not only from criminals or suspects but also victims, witnesses, missing persons, “and people for whom there are safeguarding concerns.”

However the MoJ claims that only data from convicted persons is being used, maintaining that other categories are not included.

When it comes to the type of information processed by the “predictive tool” – in addition to names, dates of birth, genders, ethnicities, and the Police National Computer unique identifier, are also “health markers.”

These cover a person’s mental health history, and details such as addiction, self-harm, suicide, vulnerability, and disability.

The Big Brother Watch reacted to the news about the scheme by stating that the government giving itself the ability to use machines to predict who might turn into a killer is “alarming.”

Interim Director Rebecca Vincent remarked that even when a crime has already been committed, algorithms and AI can still produce erroneous conclusions based on evidence.

Using the same technology and techniques to try and “predict crime” that could end up targeting innocent people carries with it “enormous privacy implications,” Vincent said, calling for the project to be immediately abandoned as “a human rights nightmare reminiscent of science fiction that has no place in the real world, and certainly not in a democracy.”