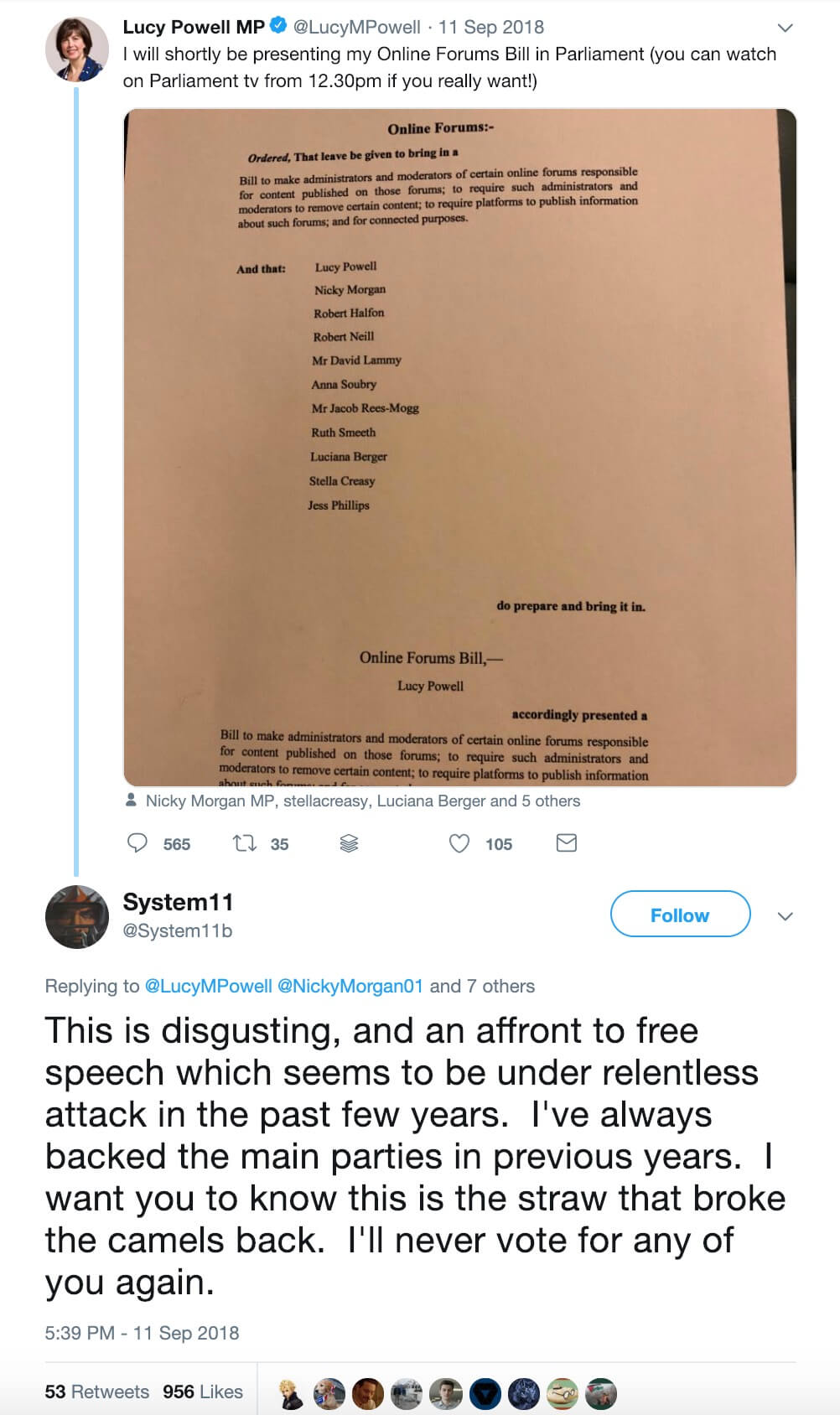

• A group of UK Members of Parliament (MPs) are asking the government to consider the Online Forums Bill – a bill which would potentially make online forum administrators and moderators responsible for illegal content that is posted to their forums

• The author of the bill, Lucy Powell MP, is also suggesting that the bill would require online forum administrators and moderators to remove content that has been deemed harmful and damaging and that the bill could be used to tackle online hate, fake news, and radicalization

• However, the bill itself contains no reference to harmful and damaging content, hate, fake news, or radicalization, so it’s unclear how Powell’s statements apply to the bill

A group of UK MPs are asking for parliamentary time to debate and vote on the Online Forums Bill – a bill which if passed would make online forum administrators and moderators responsible for illegal content that’s posted to their forums.

In a letter sent to Andrea Leadsom, the leader of the UK House of Commons, and Jeremy Wright, the UK’s Culture Secretary, the group of MPs suggest that “those with malign intent are able to exploit the openness of social media to spread hate and disinformation” and that this “misuse of social media” needs to be addressed legislatively through the Online Forums Bill.

The letter asking for this bill to be discussed in parliament is authored by Lucy Powell, the Labour MP for Manchester Central, and has been co-signed by the MPs Robert Halfon, Nicky Morgan, Bob Neill, Jacob Rees Mogg, Jess Phillips, David Lammy, Stella Creasey, Luciana Berger, Anna Soubry, and Ruth Smeeth.

In the letter, Powell says the Online Forums Bill would “require administrators and moderators to remove certain harmful and damaging content.” Additionally, in a tweet about the bill, Powell described it as a “proposal to tackle online hate, fake news, and radicalization.”

However, the Online Forums Bill refers specifically to illegal content and does not mention harmful and damaging content, hate, fake news, or radicalization.

Not only does this make it unclear how these terms will apply to the final bill but the terms being used are incredibly vague and undefined. Powell doesn’t describe the criteria that will be used to identify harmful or damaging content, hate, fake news, or radicalization.

Using vague terms like this when talking about potential laws is very dangerous. If the terms aren’t clearly defined, jokes can be deemed harmful or damaging, criticism can be deemed hateful, and satire or news that certain people don’t agree with can be deemed fake news.

Ultimately, any piece of content could be labeled with these vague terms and if they make their way into law, these terms could easily be used to suppress free speech and censor people through the legal system.

This is often the case when hate speech laws are proposed and it’s yet another example of why hate speech laws shouldn’t be a thing.