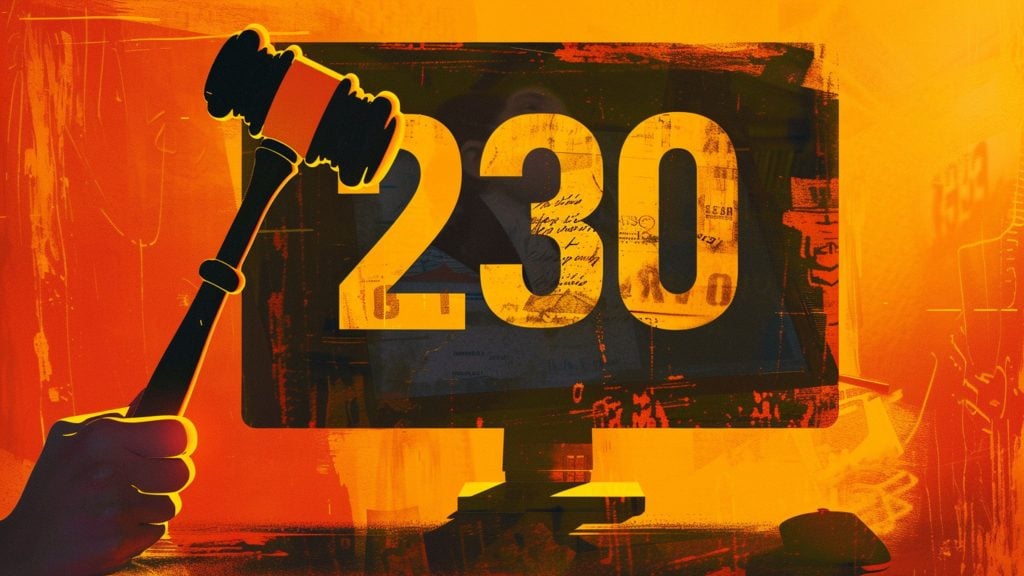

Five Democrat senators have penned a letter to OpenAI CEO Sam Altman, as a form of not-so-subtle pressure to commit “to making its next foundation model available to US Government agencies for pre-deployment testing, review, analysis, and assessment.”

But, “pre-deployment testing by government agencies?” Are these guys sure this is how any democratic (no pun) government should be speaking to a private company?

What Altman is really being asked, critics say, is to commit to even broader Big Tech-Big Government collusion.

The accusations of stepping out of bounds of what’s constitutionally allowed ahead and after the previous election, now range from involvement in censoring posts on social media, cutting revenue streams for individuals and businesses by teaming up with gargantuan advertising groups – to now seemingly tampering with future AI.

It’s not like the senators have nothing to work with here: OpenAI very recently has former NSA Director Paul M. Nakasone as a member of its board.

And OpenAI has a recently established Safety and Security Committee, of which Nakasone is a member. Plus, Altman is quoted as saying things like his company developing “levels to help us and stakeholders categorize and track AI progress.”

But Democrat senators are not the only ones who get to ask questions. One could be – who exactly are your “stakeholders,” Altman? And could Nakasone’s switch from the government to tech (an extraordinary example of the “revolving door phenomenon) be considered as a way to implement “prior restraint?”

That too is illegal in the US, as is the government censoring speech – and prior restraint, or prior censorship, is exactly what it says on the tin. These days a popular form of this would be, “prebunking.” And some form of AI is a necessary ingredient.

The senators deciding to publicly lean on Altman at this particular time allegedly happened because of a Washington Post report citing employees concerned that safety testing is being “rushed through.”

So “safety” of AI, and the industry leader-apparent, OpenAI’s stance, is how the letter’s point is presented.

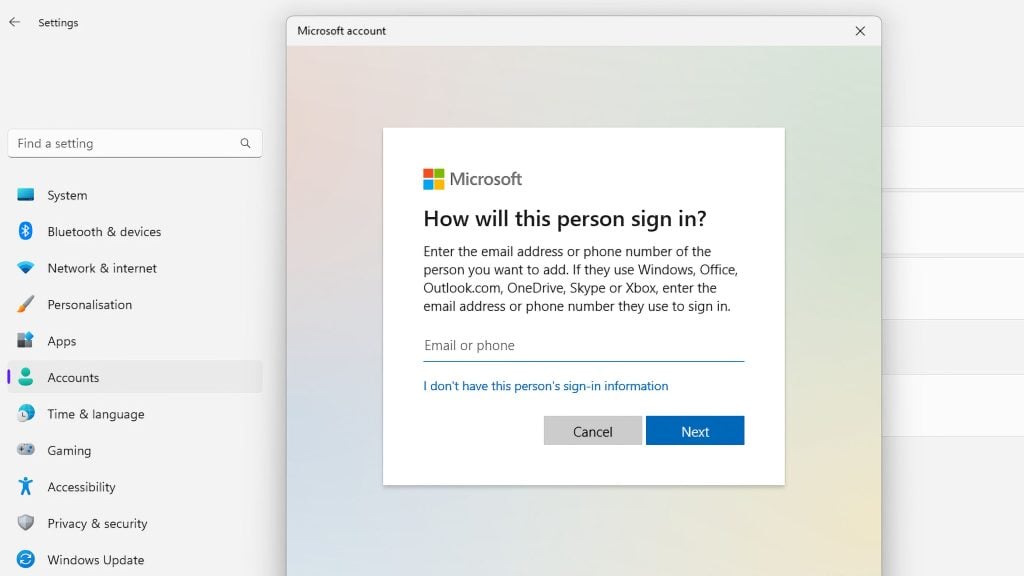

But then question number 9 – out of 12 – sneaked in. The same one quoted at the top of this article, asking – “Will OpenAI commit to making its next foundation model available to US Government agencies for pre-deployment testing, review, analysis, and assessment?”

There’s also the question – what if the next US government is not controlled by Democrats?

i.e., are Democrats playing a long collusion game? Or is this just one of the Hail Mary passes ahead of the November vote?