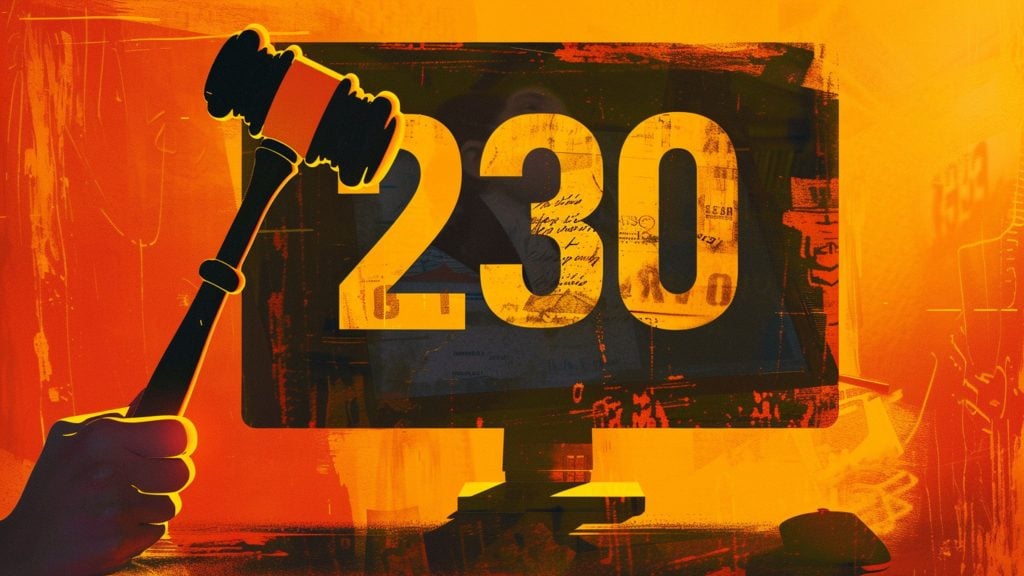

A research paper, authored by Microsoft, OpenAI, and a host of influential universities, proposes developing “personhood credentials” (PHCs).

It’s notable for the fact that the same companies that are developing and selling potentially “deceptive” AI models are now coming up with a fairly drastic “solution,” a form of digital ID.

The goal would be to prevent deception by identifying people creating content on the internet as “real” – as opposed to that generated by AI. And, the paper freely admits that privacy is not included.

Related: The 2024 Digital ID and Online Age Verification Agenda

Instead, there’s talk of “cryptographic authentication” that is also described as “pseudonymous” as PHCs are not supposed to publicly identify a person – unless, that is, the demand comes from law enforcement.

“Although PHCs prevent linking the credential across services, users should understand that their other online activities can still be tracked and potentially de-anonymized through existing methods,” said the paper’s authors.

Here we arrive at what could be the gist of the story – come up with workable digital ID available to the government, while on the surface preserving anonymity. And wrap it all in a package supposedly righting the very wrongs Microsoft and co. are creating through their lucrative “AI” products.

The paper treats online anonymity as the key “weapon” used by bad actors engaging in deceptive behavior. Microsoft product manager Shrey Jain suggested during an interview that while this was in the past acceptable for the sake of privacy and access to information – times have changed.

The reason is AI – or rather, AI panic, thriving these days well before the world ever gets to experience and deal with, true AI (AGI). But it’s good enough for the likes of Microsoft, OpenAI, and over 30 others (including Harvard, Oxford, MIT…) to suggest PHCs.

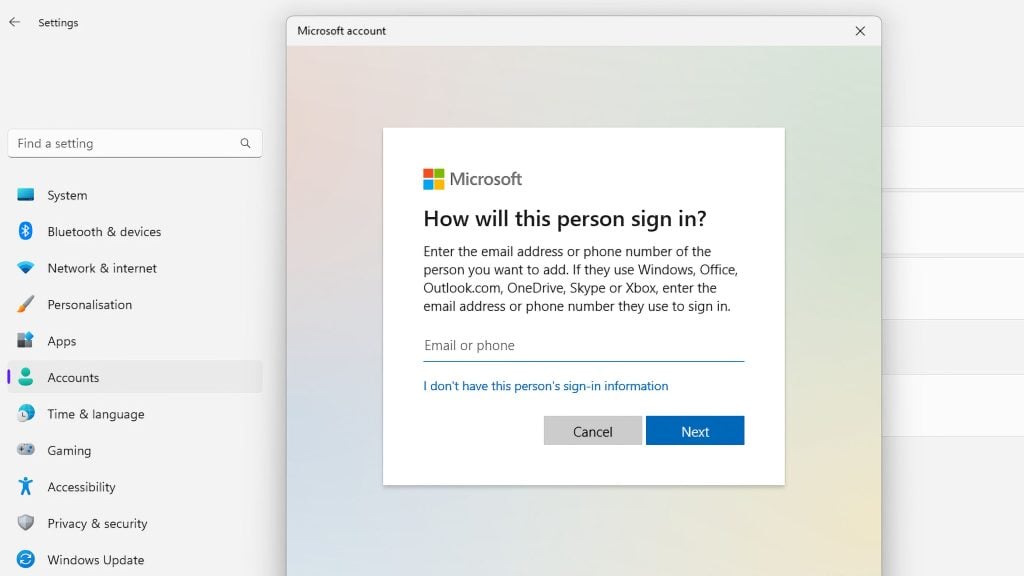

People are treated as not really different from websites in this scenario, where PHCs are likened to certificate authorities. But it remains unclear (although governments are mentioned as a possible “roots of trust”) which authority should “authenticate” and assign identifiers to humans, as proof that they are human.

Since the paper doesn’t really represent a concrete blueprint on how to develop and implement PHCs, it could also be seen as a way for the industry to continue raking in revenues from “AI,” while pacifying restless governments, reassuring them that “something is being done.”