In an appearance this week on the Joe Rogan Experience, Meta CEO Mark Zuckerberg repeated his claim that Facebook used an algorithm to limit the spread of the Hunter Biden laptop articles and that the FBI had warned the company of “Russian disinformation” ahead of the 2020 election.

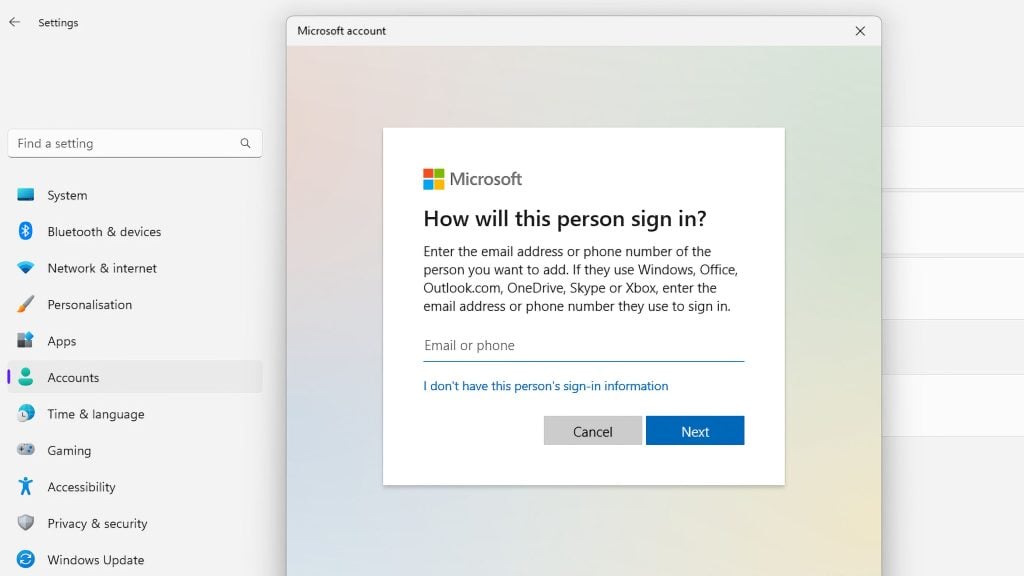

Zuckerberg first made the claim back in a Senate Committee hearing in October 2020. See our summary of this hearing here.

Zuckerberg insisted that, unlike Twitter, Facebook did not ban the story entirely. At the time, over 50 former intelligence officials signed a letter claiming that the story “had all the classic earmarks of a Russian information operation.”

“So we took a different path than Twitter. Basically the background here is the FBI, I think basically came to us – some folks on our team and was like, ‘Hey, um, just so you know, like, you should be on high alert. There was the — we thought that there was a lot of Russian propaganda in the 2016 election. We have it on notice that basically there’s about to be some kind of dump of — that’s similar to that. So just be vigilant,’” he explained.

Related: Facebook suspends news outlet for reporting on latest Hunter Biden story

Zuckerberg continued to explain that Facebook uses third-party fact-checkers to determine if something is misinformation.

“So our protocol is different from Twitter’s. What Twitter did is they said ‘You can’t share this at all.’ We didn’t do that,” Zuckerberg said. “If something’s reported to us as potentially, misinformation, important misinformation, we also use this third party fact-checking program, cause we don’t wanna be deciding what’s true and false.”

“I think it was five or seven days when it was basically being determined whether it was false. The distribution on Facebook was decreased, but people were still allowed to share it. So you could still share it. You could still consume it,” he said.

Rogan asked what he meant by “decreased distribution.” Zuckerberg said that meant the story would appear much lower on users’ newsfeeds.

“I don’t know off the top of my head, but it’s meaningful,” Zuckerberg said when asked the percentage of the decrease in distribution. “But basically, a lot of people were still able to share it. We got a lot of complaints that that was the case.”

Zuckerberg has, in the past, said that a fact-checked article got 95% less reach than a standard post.

“We weren’t, sort of, as black and white about it as Twitter,” he continued. “We just kind of thought if the FBI, which I still view as a legitimate institution in this country, it’s a very professional law enforcement. They come to us and tell us that we need to be on guard about something. Then I wanna take that seriously.”

Rogan then asked: “Did they specifically say you need to be on guard about that story?”

“I don’t remember if it was that specifically, but it basically fit the pattern,” Zuckerberg said.

Asked about the aftermath of limiting the spread of the story, Zuckerberg said: “Yeah. I mean, it sucks, it turned out after the fact. The fact-checkers looked into it, no one was able to say it was false, right. So basically it had this period where it was getting less distribution,” Zuckerberg said, suggesting the story was suppressed even though it had never been shown to the false.

“I think it probably, it sucks though, I think in the same way that probably having to go through like a criminal trial, but being proven innocent in the end, sucks. Like it still sucks that you had to go through a criminal trial, but at the end you’re free.

“I don’t know if the answer would’ve been don’t do anything or don’t have any process. I think the process was pretty reasonable. We still let people share it, but obviously you don’t want situations like that.”