TikTok, the wildly popular Chinese video-sharing app has long been accused of having a race problem.

While the company is also under fire for privacy problems, with countries such as India banning it already and the US being concerned about user privacy, there have also been accusations of racial bias against the company.

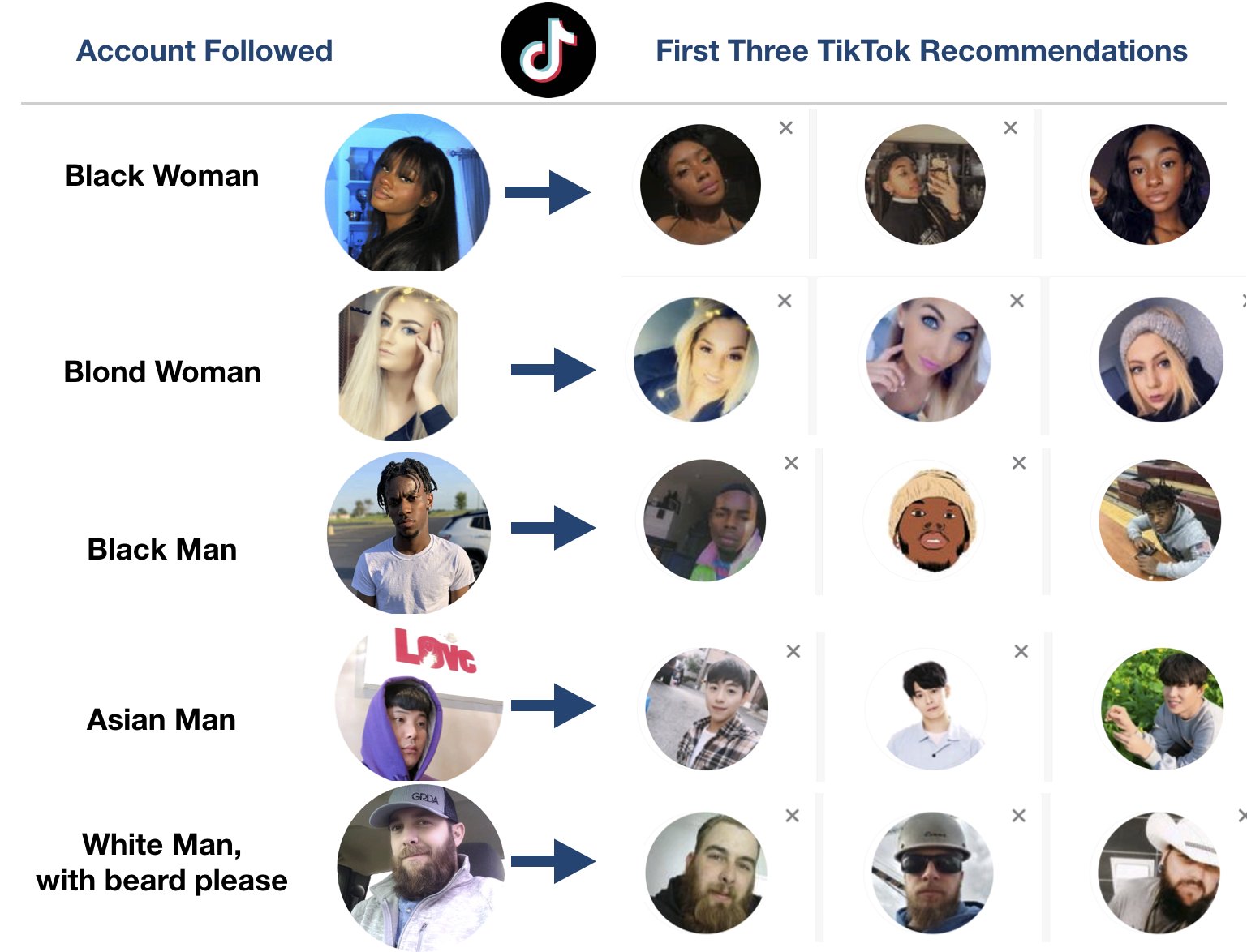

Marc Faddoul, an artificial intelligence researcher, first brought more solid evidence to this idea at the start of the year and stated that there may be a potential glitch in TikTok that may end up fostering racial bias in its algorithm that causes it to censor black people. Faddoul, in a series of tweets, further revealed his findings.

“Follow a random profile and TikTok will only recommend people who look almost the same,” read a part of Faddoul’s announcement.

While what he said may sound pretty normal, going deeper would certainly reveal that users hitting follow on a white person’s profile are now more likely to see more white people on the platform over and again and that the appearance of those who users were seeing would determine who they’re shown next.

This would inevitably lead them to follow another random white individual from the recommendations, which not only eliminates the scope for black people and other minorities from gaining traction on the platform, but also ends up creating a ripple effect with individuals of a certain race or other physical attribute being recommended more to a certain user. And it works both ways – black people would see more black people and white people would see more white people – but those in the majority would have a bigger advantage on the platform and access to a larger audience.

The algorithm censorship accusations were wild but also seemed to be backed up with anecdotal stories.

Cat Zhang from Pitchfork commented on this in a recent podcast, saying that it was hard to prove but, “but there definitely have been a lot of black creators, LGBTQ creators who have talked about their posts getting taken down, or censored or something to that effect. They feel like it’s been ongoing to the point where it’s not just arbitrary. Like they actually feel like they’re being targeted.”

Zhang continues, “I interviewed Iman, she’s a big activist who’s been disseminating a lot of protest info. She says that basically, every black creator she knows has another TikTok account just in case their primary one gets banned or deactivated.

“And so black creators have long suspected that they have been primarily targeted for shadow banning, especially for posting about activist-related causes.”

In China, TikTok is known as Douyin and implements untold levels of censorship for all different reasons – even as far as banning people for speaking Cantonese instead of Mandarin.

Click here to display content from X.

Learn more in X’s privacy policy.

In the West, TikTok says that it doesn’t censor. But it’s been caught doing that numerous times, mostly commonly censoring content that would irritate Beijing, such as references to Tiananmen Square, Tibet, and Hong Kong.

And there has also been studies into how it shadowbans and suppresses content using various ranking factors.

“Basically, TikTok doesn’t tell you that anything is happening, but they just don’t promote your video, or maybe your followers don’t see it, Zhang says. “So the view count is really low and it’s almost like the content really didn’t exist in the first place. Like you’re just kind of being siloed into your own world.”

And while TikTok has been found censoring anti-China content, it’s also been accused of censoring content based on the user. For example, it was this year that TikTok faced backlash for censoring content from transgender users and a same-sex couple in India found that TikTok deleted a video of them dancing with no explanation.

The stories are numerous and add weight to the idea that TikTok’s algorithms do censor based on the user, especially after a report found that TikTok moderators were told to suppress content from obese and disabled people and was weirdly based on the idea that if no one could see their content then they couldn’t be bullied.

In other words, it was supposed to be censorship for their own good.

The aforementioned phenomenon is known as “collaborative filtering.” With visibility being the number one factor that determines a creator’s success on any social media platform, the so-called collaborative filtering can end up becoming a huge hurdle to a number of creators on the platform, if they aren’t similar to the majority.

Although, that being said, recent rumors suggest that TikTok isn’t long for the US market anyway, and could soon be kicked out of the country for privacy and national security concerns before it even has a chance to add more transparency to its algorithms and correct accusations of censorship.