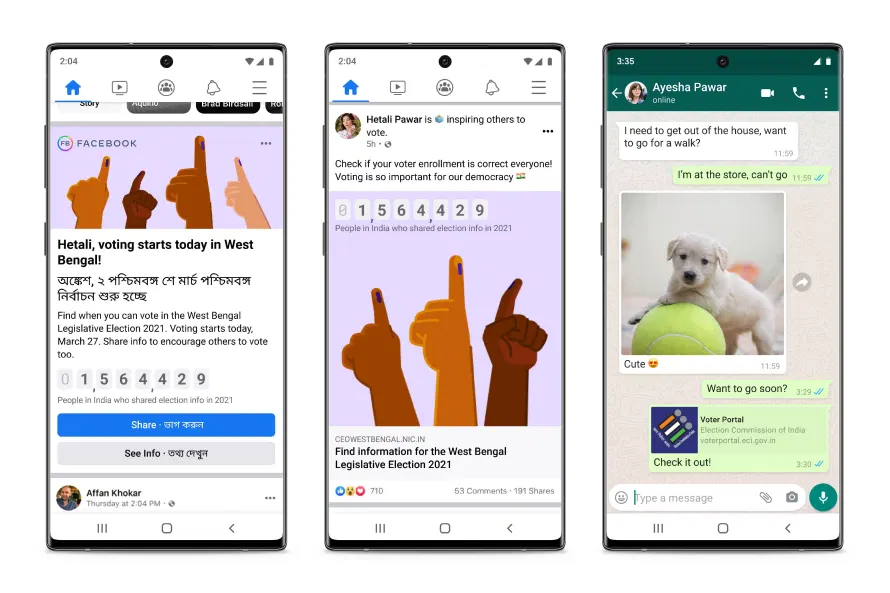

As several major Indian states go into elections – what could be the role of a social media giant like Facebook? To facilitate conversations between its 2.5+ billion users in various parts of the world, different parts of India included, and bring people together in allowing them to communicate with one another – like its “manifesto” would have it?

Well, not exactly. Not when it comes to India, the world’s “biggest democracy” – in terms of the number of people ostensibly participating – and also Facebook’s largest stomping ground, in terms of market by users, not least thanks to the widespread use of Facebook-owned WhatsApp messenger app.

Instead, Facebook has chosen to combat “hate speech” and determine “misinformation” as the fore of its official agenda in India right now.

On March 30, Facebook issued a statement saying that the US tech behemoth recognizes that content marked as hate speech “could lead to imminent offline harm.”

This latest twist in the giant’s local policy comes after Facebook came under intense pressure from inside and outside India – through opposition circles and media reports published by the likes of the Wall Street Journal – who suggested that the tech giant was aligning its moderation policies with the interests of the country’s ruling elites.

This naturally sparked outrage among opposition forces in those territories, accusing Facebook of bias against them.

In this particular case, it had to do with India’s PM Narendra Modi’s BJP party, which is now facing challenges in Tamil Nadu, West Bengal, Assam, Kerala, and union territory Puducherry, not least because of the central government’s agriculture reform laws that have caused protests in India, and outcry from some political circles abroad.

Long story short: since it might turn out to be a sensitive political moment in India, Facebook is opting for increased moderation and just outright censorship as what no doubt must appear as – and might in the long run prove to be – the most profitable route for its business to prosper in India.

Specifically, Facebook said it would camp down on “problematic” content that might incite violence in the said territories by some super vague rules, like – content that “significantly reduces the distribution of content that our proactive detection technology identifies as likely hate speech or violence and incitement.”