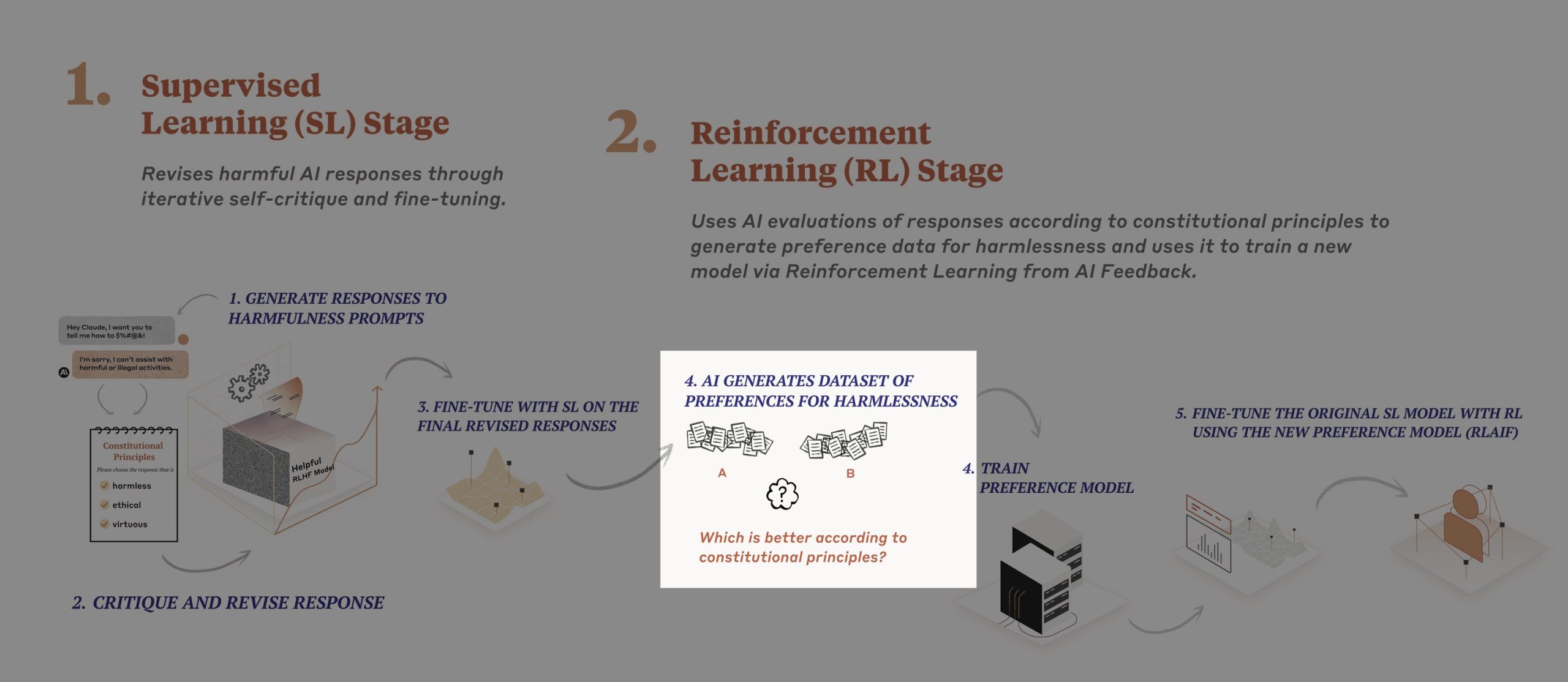

As concern about companies putting their thumb on the AI scale grows, with corporations and global entities using their own values to influence AI technology, an AI startup backed by Google’s parent company Alphabet has published the moral values that guide and make its ChatGPT rival Claude “safe.” The startup, Anthropic was founded by former executives of OpenAI, the Microsoft-backed AI startup behind ChatGPT.

The moral values guidelines, which the startup calls Claude’s “constitution,” were derived from several sources, including Apple’s data privacy rules and the UN’s Declaration on Human Rights.

Lawmakers, regulators, and industry experts have raised privacy and ethical concerns about AI, specifically generative AI like ChatGPT. Earlier this year, President Joe Biden called on tech companies to ensure their AI tools are “safe” before releasing them for public use.

Claude has a constitution to read from in order to properly respond to such topics as race and politics. The values in the constitution include choosing “the response that most discourages and opposes torture, slavery, cruelty, and inhuman or degrading treatment.”

The AI chatbot has also been instructed to choose a reply with the least likelihood to be offensive.