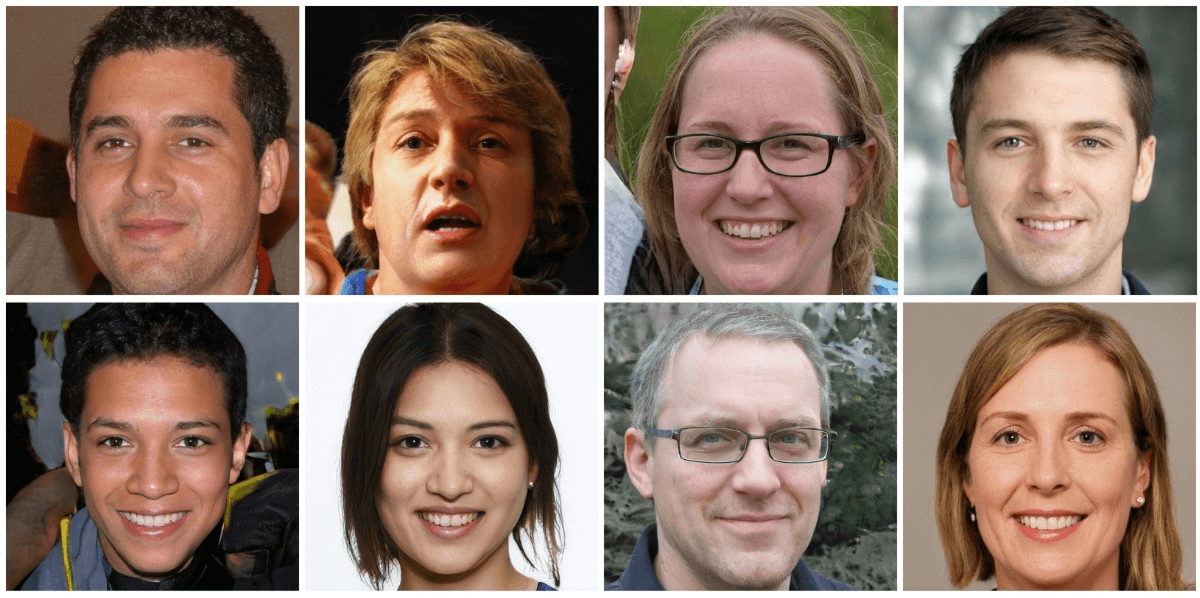

The ability for the average person to use AI to generate fake images is soon upon us, creating an interesting and perhaps frightening look into the future. A new website ThisPersonDoesNotExist.com is a random image generator that generates a totally random face of someone that doesn’t exist; their likeness is created on the fly by artificial intelligence.

The project is created by Philip Wang, a software engineer who works at Uber. It uses the Nvidia technology to create an unlimited number of fake faces. The faces are generated by a vast depository of real images that then use a neural network to take elements of those real faces to produce an unlimited supply of fake ones.

Through this web app, you can generate random faces of people who never existed. You can now create fictional characters out of thin air. While this does not, by itself, become an end to a greater application or an idea, it is surprising about how the technology is powering such novel processes. From a stage where machines couldn’t create anything by themselves and pure dependence on human command to a position where they can be ‘coded’ to be creative, we’ve come a long way.

Wang wrote in a Facebook post:

“Each time you refresh the site, the network will generate a new facial image from scratch,”.

Nvidia’s version on the algorithm, named StyleGAN, was made open source recently and has proven to be incredibly flexible.

However, the same technology can have more notorious use cases. One example is in the development of “deepfakes”. Deepfakes use GANs to add real people’s faces to the bodies of real videos in order to create pornography that is non-consensual.

The power to create and manipulate new forms of videos that appear realist but are completely fake is going to cause a vast change to the way we see video evidence in the future, and there’s set to be a more prolific distrust of video evidence once realization of what this kind of technology can do hits the mainstream.

In the field of artificial intelligence, there has been a surprisingly huge growth and progress. Despite having a steep learning curve, with time passing by, more people are getting their hands dirty with it and are gaining considerably decent knowledge in this field.

The past was different, only a few people could get their hands on computers. Post that, there came an era where only a few could program and afford the luxury to experiment and learn with cutting edge technology. Thanks to the massive online courses and accessible internet, a wide range of people are now able to access the learning material for various topics related to tech.

With this surge in adopters and learners of tech, AI is now in a position where massive new developments take place very often. Previously, only a few elite people could gain access to the knowledge and have the luxury to experiment with artificial intelligence. However, with sites like Kaggle, DataCamp etc, that help in teaching people Data Science and subsequently, AI, it is easy to learn and play around with this technology.

Simultaneously, with passing time, an increasing number of people are showing interest in contributing to the FOSS (Free and Open Source Software.) Due to this, accessing libraries, uploading new products and implementations has become even easier.

From a position where AI involved fancy and intricate experiments to high school students doing simple experiments involving AI, we’ve come a long way.