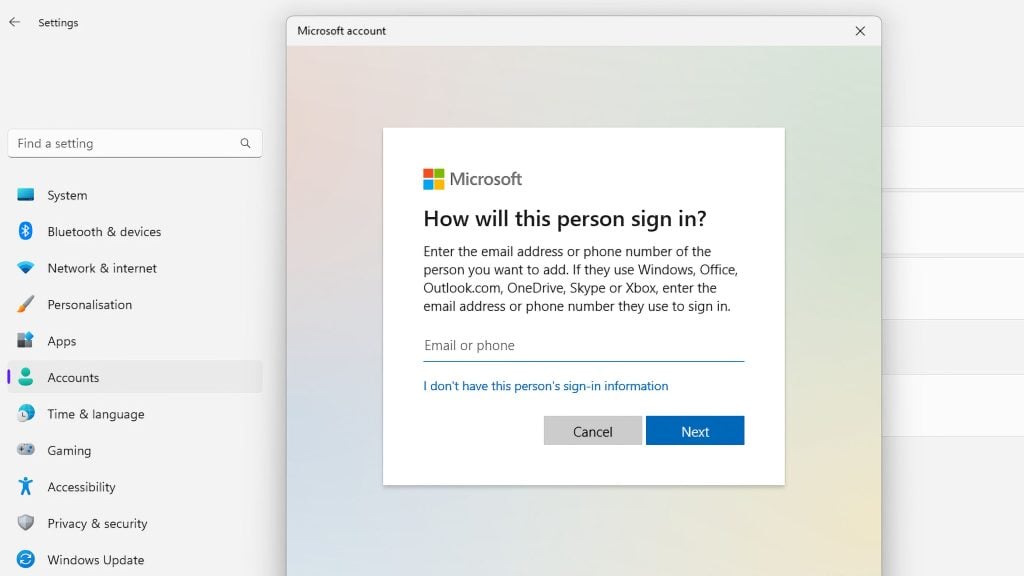

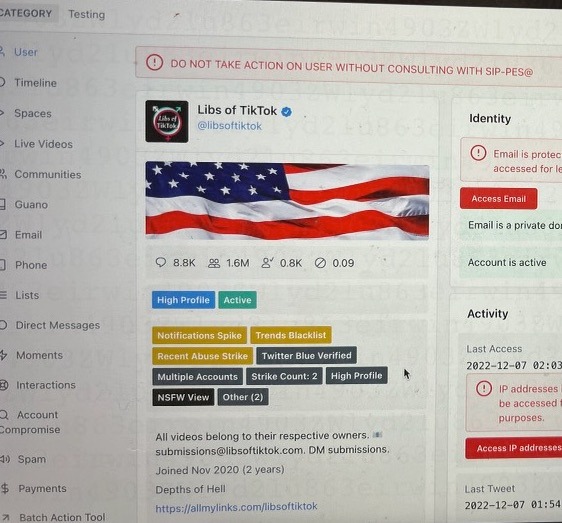

The second batch of “Twitter Files” revealed that popular account Libs of TikTok was suspended despite executives acknowledging that the account has not violated any policies. The account was flagged with a big label that read: “DO NOT TAKE ACTION ON USER WITHOUT CONSULTING WITH SIP-PES.”

The Free Press founder Bari Weiss, who published the files, explained that SIP-PES was short for “Site Integrity Policy, Policy Escalation Support.” The group, which made the “most politically sensitive decisions,” consisted of head of trust and safety Yoel Roth, head of legal, policy, and trust Vijaya Gadde and CEOs Jack Dorsey and Parag Agrawal.

Libs of TikTok, which was suspended 6 times in 2022 alone, was told that it was suspended for violating the policy on “hateful conduct.” However, internal SIP-PES documents revealed that the account “has not directly engaged in behavior violative of the Hateful Conduct policy.”

SIP-PES said that Libs of TikTok should receive a “timeout” for “indirectly violating” the hateful conduct policy by “targeting individuals/allies/supporters of the LGBTQIA+ community.” The group noted that the account had not posted anything “explicitly violative” to justify a permanent suspension.

The person behind Libs of TikTok, Chaya Raichik, had remained anonymous until she was doxxed by the Washington Post’s Taylor Lorenz. Weiss noted that Twitter did not take any action against the tweet that contained a photo of Raichik’s home with her address visible. The tweet is still up.

“When Raichik told Twitter that her address had been disseminated she says Twitter Support responded with this message: ‘We reviewed the reported content, and didn’t find it to be in violation of the Twitter rules.’” Weiss wrote.

Twitter, which has a policy against posting personal information, said that the tweet was not in violation of any of its policies.

Following Weiss’ revelations, Libs of TikTok tweeted: “Going to sleep tonight feeling entirely vindicated. It was infuriating getting suspension after suspension knowing I never violated any Twitter rules. This is the greatest gift.”

She was later interviewed by Fox News’ Tucker Carlson.

“Absolutely,” Raichik answered after Carlson asked her if she ever sensed that she was being censored, noting that her popular tweets never appeared on the trending section.

“There were sometimes days or weeks at a time where I felt like my tweets were getting much less engagement than usual, than they should, and I think now it’s clear that there was suppression and there was shadow-banning,” she added.

Carlson noted that most of her content was previously reported material that was posted without commentary. Raichik said she was censored because the establishment did not want such content in the eyes of the masses because it made them look bad.

“I was suspended for probably a month altogether for what? For not even violating their policies,” she said.

Weiss revealed how Twitter employees admitted to using technicalities to censor tweets and topics.

Here’s Yoel Roth, Twitter’s then Global Head of Trust and Safety, in a direct message to a colleague in early 2021:

“A lot of times, Si has used technicality spam enforcements as a way to solve a problem created by Safety under-enforcing their policies. Which, again, isn’t a problem per se – but it keeps us from addressing the root cause of the issue, which is that our Safety policies need some attention.”

“Six days later, in a direct message with an employee on the Health, Misinformation, Privacy, and Identity research team, Roth requested more research to support expanding ‘non-removal policy interventions like disabling engagements and deamplification/visibility filtering,’” Weiss wrote.

Roth:

“One of the biggest areas I’d *love* research support on is re: non-removal policy interventions like disabling engagements and deamplification/visibility filtering. The hypothesis underlying much of what we’ve implemented is that if exposure to, e.g., misinformation directly causes harm, we should use remediations that reduce exposure, and limiting the spread/virality of content is a good way to do that (by just reducing prevalence overall). We got Jack on board with implementing this for civic integrity in the near term, but we’re going to need to make a more robust case to get this into our repertoire of policy remediations – especially for other policy domains. So I’d love research’s POV on that.”