Microsoft has launched a new AI-powered censorship tool to detect inappropriate content in texts and images. Dubbed “Azure Content Safety,” the tool has been trained to understand different languages, English, French, Spanish, Italian, Portuguese, Chinese, and Japanese.

The tool gives flagged content a severity score from one to a hundred, which will help moderators know which content to address.

During a demonstration at Microsoft’s Build conference, Microsoft’s head of responsible AI, Sarah Bird, explained that Azure AI Content Safety is a commercialized version of the system powering the Bing chatbot and Github’s AI-powered code generator Copilot. Pricing of the new tools begins at $0.75 for 1,000 texts and $1.5 for 1,000 images.

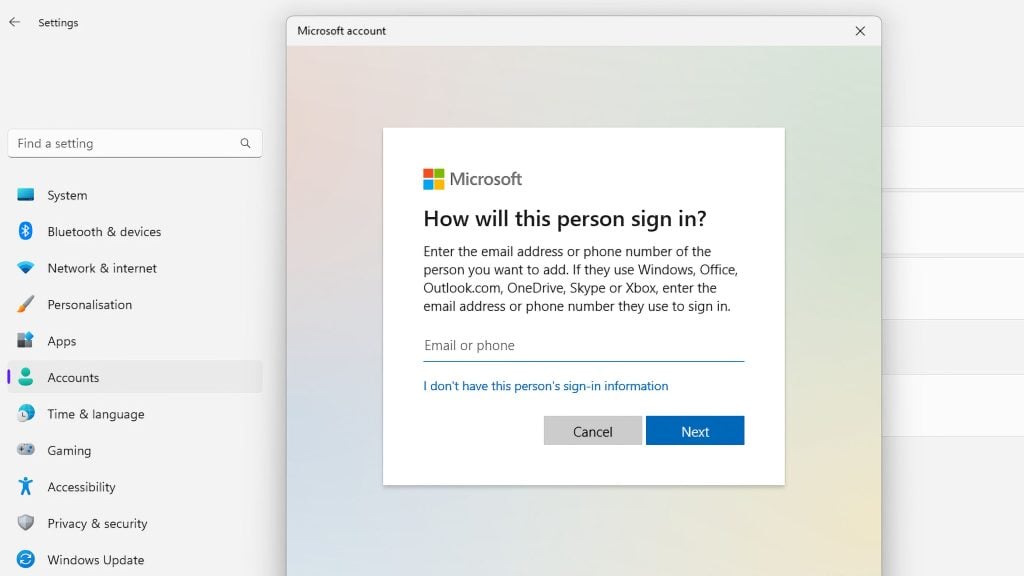

The aim of the tool is to give developers the ability to introduce it into their platforms.

“We’re now launching it as a product that third-party customers can use,” Bird said in a statement.

In a statement to TechCrunch, a spokesperson for Microsoft said: “Microsoft has been working on solutions in response to the challenge of harmful content appearing in online communities for over two years. We recognized that existing systems weren’t effectively taking into account context or able to work in multiple languages.

“New [AI] models are able to understand content and cultural context so much better. They are multilingual from the start … and they provide clear and understandable explanations, allowing users to understand why content was flagged or removed.”

Microsoft claims that the new tool has been trained to understand context.