The Cybersecurity and Infrastructure Security Agency (CISA), a part of the US Department of Homeland Security (DHS), all of a sudden wants to have deepfakes (which have existed for decades in entertainment. etc. industries) tightly regulated.

Tech companies (Google, Meta, TikTok, Open IA) “voluntarily identifying and labeling” such content on their social platforms and elsewhere won’t cut it now. This is all presented as a segment in the larger AI “conversation” – such as AI is, at this point – while the conversation is around how to make sure the authorities control it strictly.

The value agencies and groups now thoroughly exposed as multi-year drivers of online censorship see in zeroing in on deepfakes (and AI) is, on one hand, the ability to interpret the meaning of this content (even if it’s parody or satire) as political “disinformation.”

And if the election doesn’t go their way, they can always contest it claiming it was substantially influenced by such “disinformation.”

That’s why – rather than believing that any regulation “with real teeth” will make it through the legislative process any time soon – it’s important for entities like CISA to keep the topic alive in the media.

At the same time, although this pressure on social platforms and demands for better “labeling” (i.e., censorship) clearly comes from inside the US, those behind the narrative never miss the chance to claim that stricter rules, all the way up to new laws, are needed to “keep the tech from being used by other countries to try to influence the US election.”

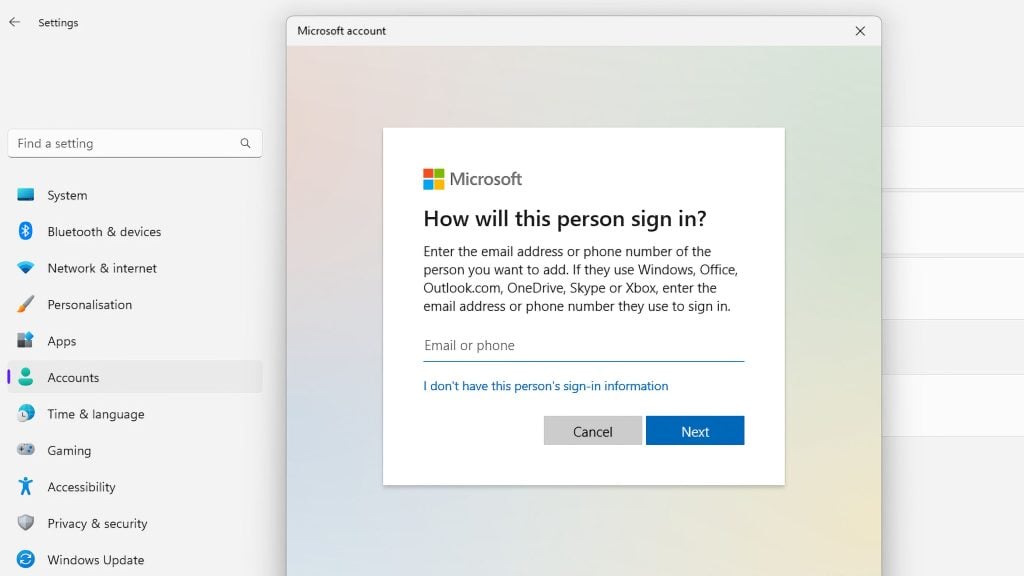

Most of this came up directly or indirectly as CISA Director Jen Easterly spoke at a Washington Post-organized event, insisting that platforms essentially “self-censoring” with the current way they label AI-generated content, is not enough.

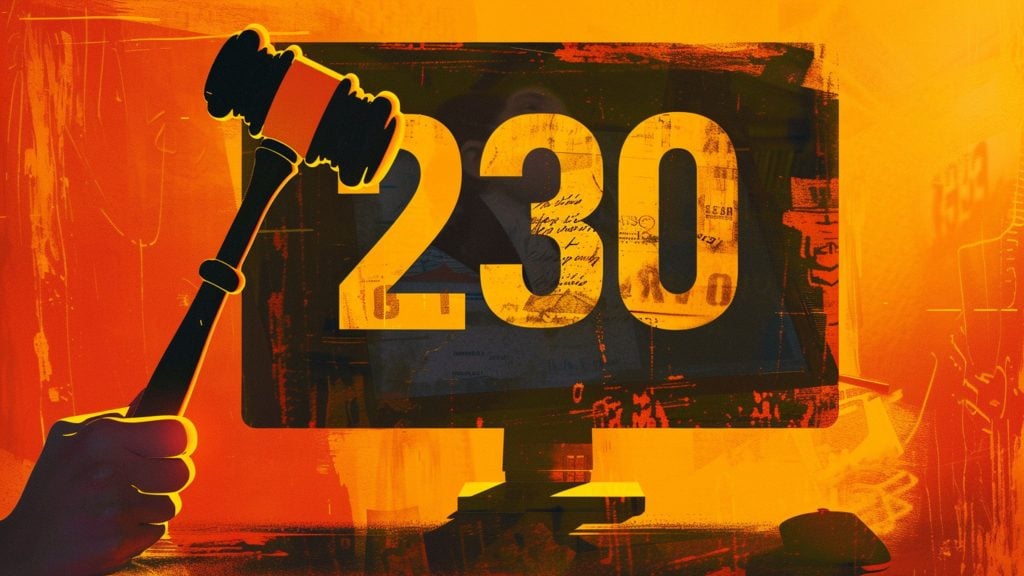

“There needs to be a set of rules in place, ultimately legislation,” she said.

These comments come as the Washington Post remarks that although there have been initiatives to regulate the use of AI in the US, none of them have so far panned out.

Proponents of such policies have big hope in the EU and its controversial AI Act which was passed this year – but even that will not be fully implemented as law for the next two years.