California Governor Gavin Newsom has vetoed an artificial intelligence “safety” bill, not because of potential threats to free speech (it does) but because he believes the proposed regulations do not go far enough in their current form.

The bill, SB 1047, was designed to regulate the use of large-scale AI models by imposing stringent requirements on their development and deployment. However, Newsom critiqued the bill for its narrow focus on only the most sizable and costly AI models, neglecting what he believes are the varied risks presented by smaller AI systems involved in critical sectors such as healthcare and energy management.

This legislative effort, which was poised to set a precedent for AI regulation across the nation, drew intense scrutiny and debate. California’s status as a tech hub meant the bill’s implications could extend well beyond state lines, influencing nationwide policy at a time when federal regulation remains stagnant, and gives California control over what AI tools are released – all in the name of “safety.”

The bill imposes heavy governmental control over AI development, mandating extensive protocols approved by the attorney-general, and obligating developers to report “safety” incidents and comply annually. These stringent regulations are feared to lead developers to self-censor to avoid legal consequences.

Yet, despite the potential for establishing a regulatory framework, the governor opted for a veto, signaling his intention to collaborate with AI experts to develop more comprehensive, stricter legislation.

Governor Newsom articulated his concerns in a veto message, pointing out that the bill’s focus was too narrow, only targeting the largest AI models without considering their actual application in high-stakes or low-risk environments.

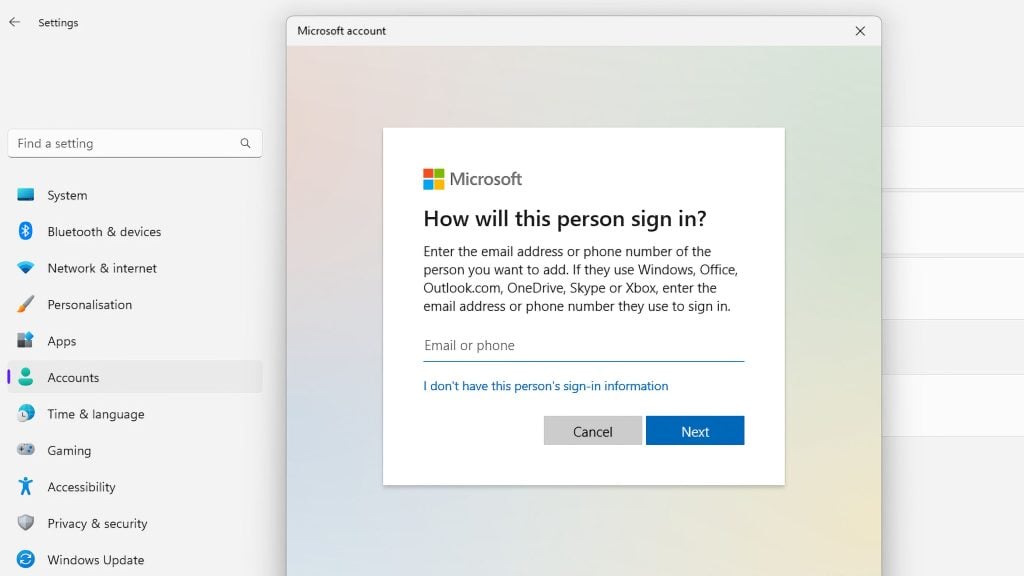

We obtained a copy of Governor Newsom’s letter for you here.

“While well-intentioned, SB 1047 does not take into account whether an AI system is deployed in high-risk environments, involves critical decision-making or the use of sensitive data,” Newsom wrote.

“Instead, the bill applies stringent standards to even the most basic functions — so long as a large system deploys it. I do not believe this is the best approach to protecting the public from real threats posed by the technology.”