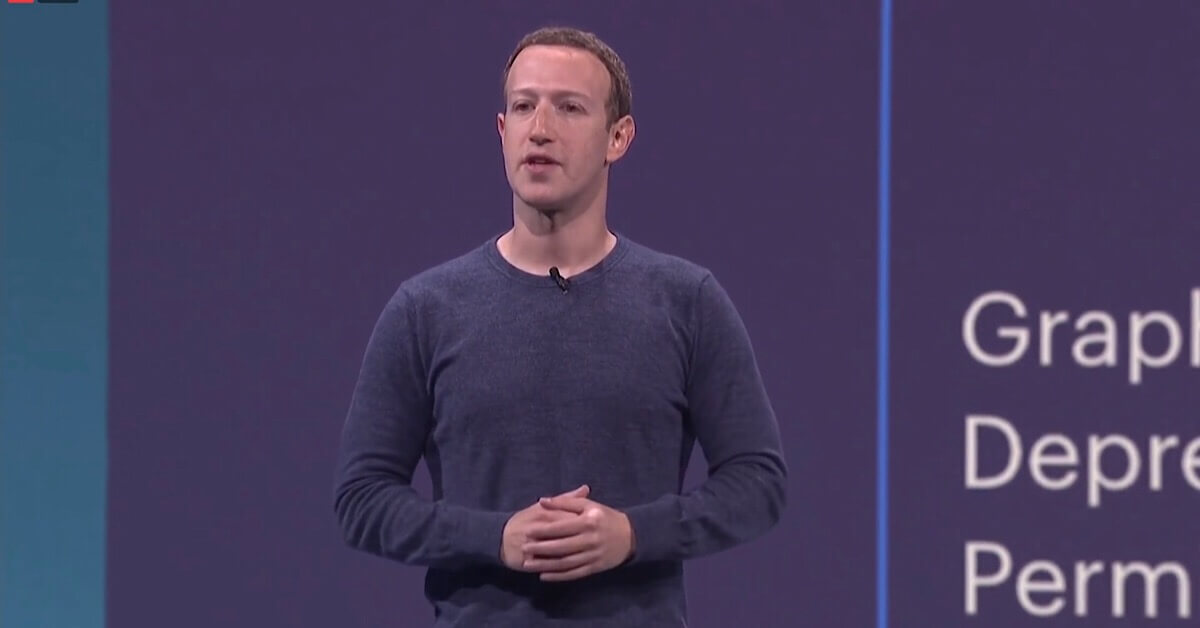

Since the mass shootings in New Zealand, Facebook has repeatedly indicated that it will be taking a more proactive approach when it comes to policing “hate” on its platforms. Now Facebook CEO Mark Zuckerberg has confirmed that the company will be investing heavily in AI (artificial intelligence) systems and has hired a total of 30,000 content reviewers to police so-called “harmful content and hate speech.

Zuckerberg made the statements in an interview with ABC News where he said:

Certainly, though, this is why I care so much about issues like policing harmful content and hate speech, right? I don’t want our work to be something that gets towards amplifying really negative stereotypes or promoting hate. So that’s why we’re investing so much in building up these AI systems. Now, we have 30,000 people who are doing content and security review to do as best of a job as we can of proactively identifying and removing that kind of harmful content.

These latest comments from Zuckerberg suggest the company has doubled the size of its content moderation team in just over three months. In December 2018, the New York Times reported that Facebook had hired a total of 15,000 content moderators.

In the last month, Facebook has also said that it’s starting to use audio-matching AI technology and considering placing restrictions on who can go live in order to control “hate” on its platforms.

However, as Facebook continuously steps up its efforts to police “hate” on its platform, it never defines what “hate” actually is.