Microsoft has launched AI that enforces social distancing. While an impressive technology with more uses than calculating the distance between people, there are privacy concerns and ethical issues raised in this new coronavirus surveillance age.

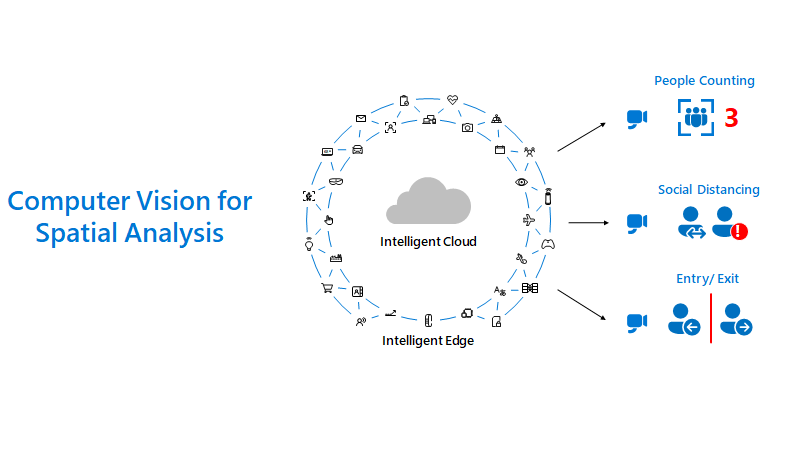

In a virtual event, Microsoft launched Ignite Spatial Analysis, which is part of Redmond’s Cognitive Services, machine learning services intended for everyday use that does not require a deep level of knowledge in machine learning and data science.

The AI is intended to help video surveillance systems to identify instances of people who are close together.

“Spatial Analysis is an advanced machine-learning model that aggregates data from multiple camera feeds,” said Bharat Sandhu, Microsoft’s Azure AI director of product marketing.

The company explained Spatial Analysis could help count the number of people in a room and the distance between people. Calculating wait time can help determine how long people spend on queues. Another possible application is measuring the effectiveness of ad billboards by seeing how much time people look at them.

The company classifies the technology as a toolset for “people analytics,” a very unnerving phrase to use in a world where privacy is a huge concern and civil liberties are low on the agenda.

It is a “gated service” requiring a customer to provide a “valid business scenario,” otherwise Microsoft will not approve.

“Responsible AI practices and guidelines for safe use are infused into Azure AI’s services, such as Spatial Analysis, to ensure personal privacy, transparency, and trust,” said the AI Platform CVP Eric Boyd.

That said, can we trust a company that is focused on making profits to have a strict vetting process?