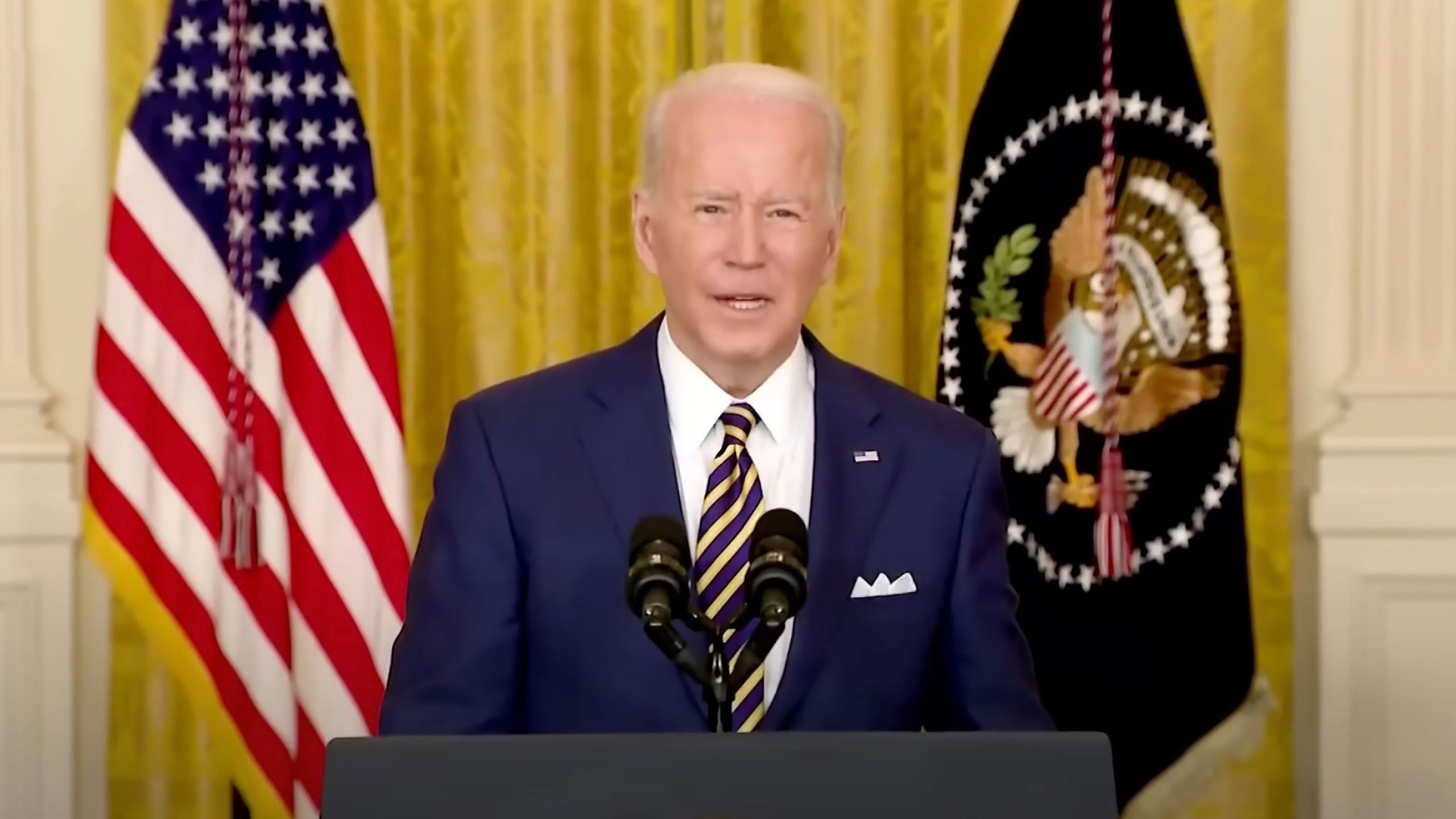

The Biden administration is pushing for sweeping measures to combat the proliferation of nonconsensual sexual AI-generated images, including controversial proposals that could lead to extensive on-device surveillance and control of the types of images generated. In a White House press release, President Joe Biden’s administration outlined demands for the tech industry and financial institutions to curb the creation and distribution of abusive sexual images made with artificial intelligence (AI).

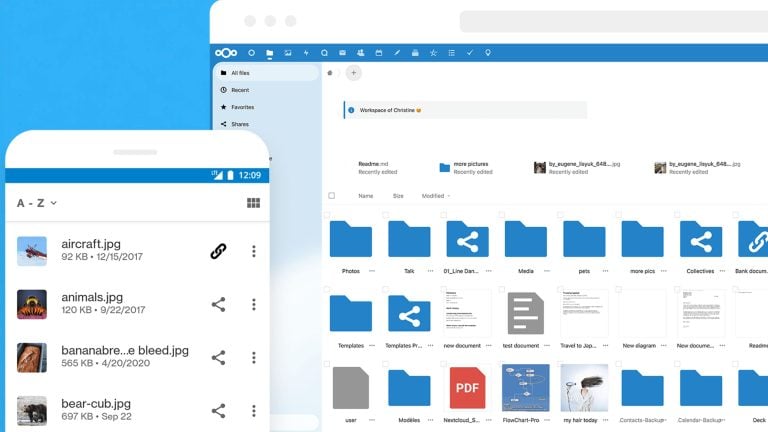

A key focus of these measures is the use of on-device technology to prevent the sharing of nonconsensual sexual images. The administration stated that “mobile operating system developers could enable technical protections to better protect content stored on digital devices and to prevent image sharing without consent.”

This proposal implies that mobile operating systems would need to scan and analyze images directly on users’ devices to determine if they are sexual or non-consensual. The implications of such surveillance raise significant privacy concerns, as it involves monitoring and analyzing private content stored on personal devices.

Additionally, the administration is calling on mobile app stores to “commit to instituting requirements for app developers to prevent the creation of non-consensual images.” This broad mandate could require a wide range of apps, including image editing and drawing apps, to scan and monitor user activities on devices, analyze what art they’re creating and block the creation of certain kinds of content. Once this technology of on-device monitoring becomes normalized, this level of scrutiny could extend beyond the initial intent, potentially leading to censorship of other types of content that the administration finds objectionable.

The administration’s call to action extends to various sectors, including AI developers, payment processors, financial institutions, cloud computing providers, search engines, and mobile app store gatekeepers like Apple and Google. By encouraging cooperation from these entities, the White House hopes to curb the creation, spread, and monetization of nonconsensual AI images.

The initiative builds on previous efforts, such as the voluntary commitments secured by the Biden administration from major technology companies like Amazon, Google, Meta, and Microsoft to implement safeguards on new AI systems. Despite these measures, the administration acknowledges the need for legislative action to enforce these safeguards comprehensively.

The administration’s proposals raise significant questions about privacy and the potential for mission creep. The call for on-device surveillance to detect and prevent the sharing of non-consensual sexual images means that personal photos and content would be subject to continuous monitoring and analysis. Through Photoshop and other tools, people have been able to generate images on their devices for decades but the recent evolution of AI is being used to now call for surveillance of the content people create.

This could set a precedent for more extensive and intrusive forms of digital content scanning, leading to broader applications beyond the original intent.