Arunesh Mathur, a Princeton graduate student who focuses on information privacy and online manipulation, has shared on Twitter an interesting discovery that appears to be right in his wheelhouse.

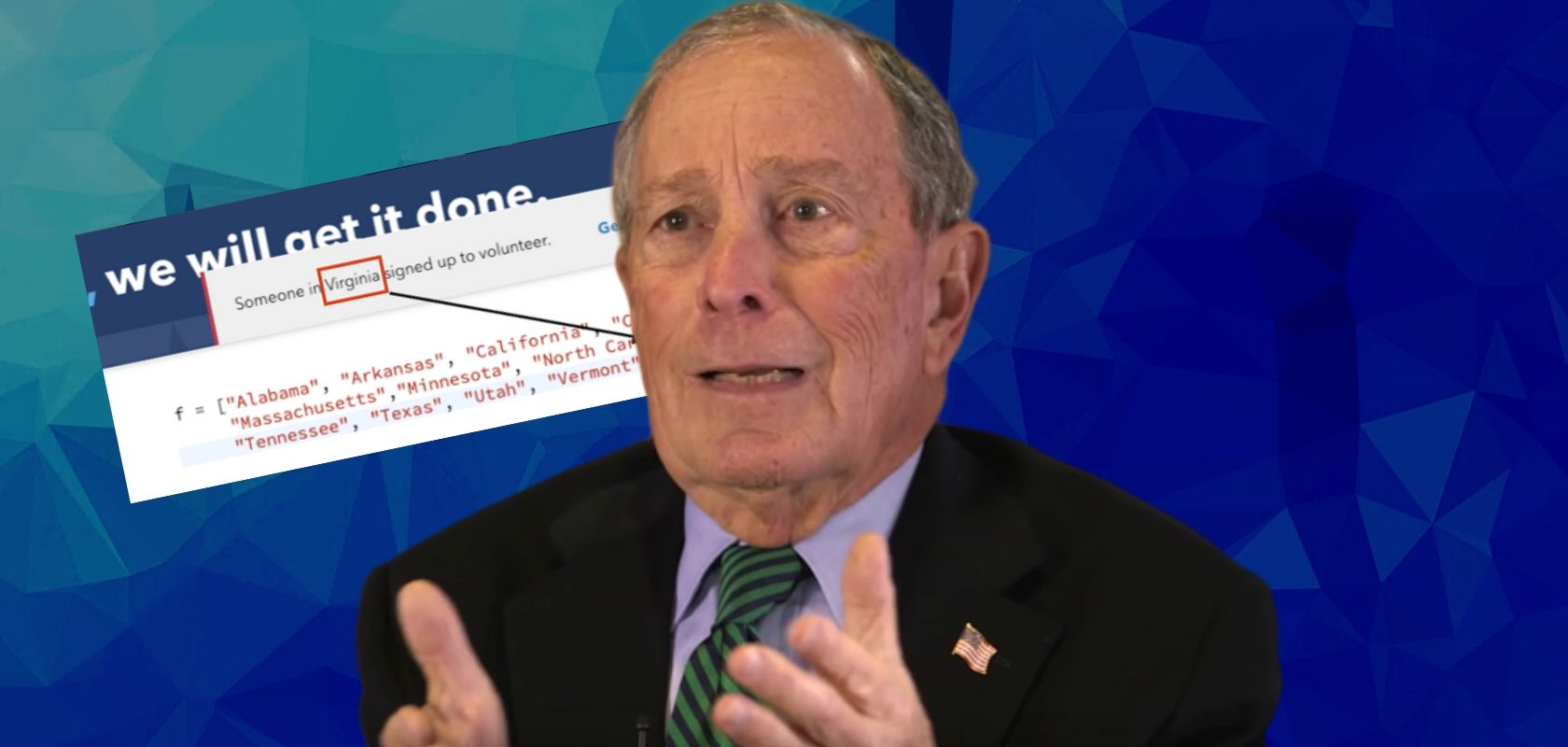

In a tweet posted on Thursday Mathur says that the website of Democratic presidential hopeful Mike Bloomberg is treating its visitors with periodic pop-up messages informing them that “people in certain states are signing up as volunteers.”

Click here to display content from X.

Learn more in X’s privacy policy.

Does this mean that the billionaire’s campaign is displaying real-time updates based on genuine data, i.e., new volunteer sign-ups?

Mathur – who inspected the page and got some screenshots to prove it – says no.

The website was instead simply using some JavaScript code to generate these false and deceptive messages.

It didn’t take long for the Bloomberg campaign to react after the discovery was made public, however; in a separate tweet, Mathur said the script was now gone from the website.

Click here to display content from X.

Learn more in X’s privacy policy.

“Maybe that’s what happens when a >1000 people learn about your deceptive messages in the last 12 hours?,” the researcher wondered.

And although the messages are now gone, it’s worth considering why they were there in the first place, and what impact the campaign – that has already spent $300 million, some say mostly on attempting to buy influence online – was hoping to achieve.

Mathur says the falsely generated pop-up messages are a form of what’s known as “social proof nudge” – a way to manipulate people into copying actions of others, which are perceived as the norm.

In other words, when uncertain what action or stance to take, people will often follow the example of the crowd. Hence the importance of displaying the follower, like and upvote counts on social media, for example.

Mathur notes that the “social proof nudge” method emerged from experiments meant to push hotel guests to reuse their towels by exposing them to appeals containing “descriptive social norms.”

Later, marketers picked up the technique, followed by social media sites, neither of which shy away from displaying fabricated “social proof nudge” messages to get customers to buy things they don’t really need, or keep using social networks they don’t really like.

Mathur says that these dark patterns are now seeping into the realm of “high-stakes domains like politics where we can no longer afford to be manipulated as easily.”