The EU elections are less than a month away so all the mainstream social media sites are ramping up their efforts to clamp down on so-called “misinformation.” Yesterday Twitter introduced some changes that allow users to report “misleading” election tweets – a move that’s likely to be problematic because anyone who doesn’t agree with a political tweet will be able to report it as “misleading.” Now Facebook is following in Twitter’s footsteps and has introduced a series of changes to help stop the spread of so-called “misinformation” in the run-up to the EU Elections on May 23.

These new changes from Facebook are even more problematic than the changes Twitter is making. The changes will downrank or remove content that’s labeled as “misinformation” while promoting content that’s deemed to be trustworthy. However, the methods that are being used to identify what’s trustworthy and what’s “misinformation” are unreliable and often lead to more misleading information being spread while truthful information is suppressed.

Here’s a summary of the changes Facebook announced along with examples of how changes like these almost always create more misinformation:

1. New local fact-checking partners

Facebook is expanding its third-party fact-checking program and introducing five new local fact checkers in different EU countries. This comes just days after it was revealed that Facebook is finding it impossible to police content due to there being so many global languages. By expanding its fact-checking efforts in an area where it’s struggling to accurately moderate content, Facebook is likely to cause more confusion and spread more misinformation.

Additionally, third-party fact checkers are often inconsistent and guilty of spreading the misinformation they’re meant to be stopping. For example, NewsGuard, a third-party fact-checking tool, often spreads misleading information through its ratings system and this includes information related to the EU elections.

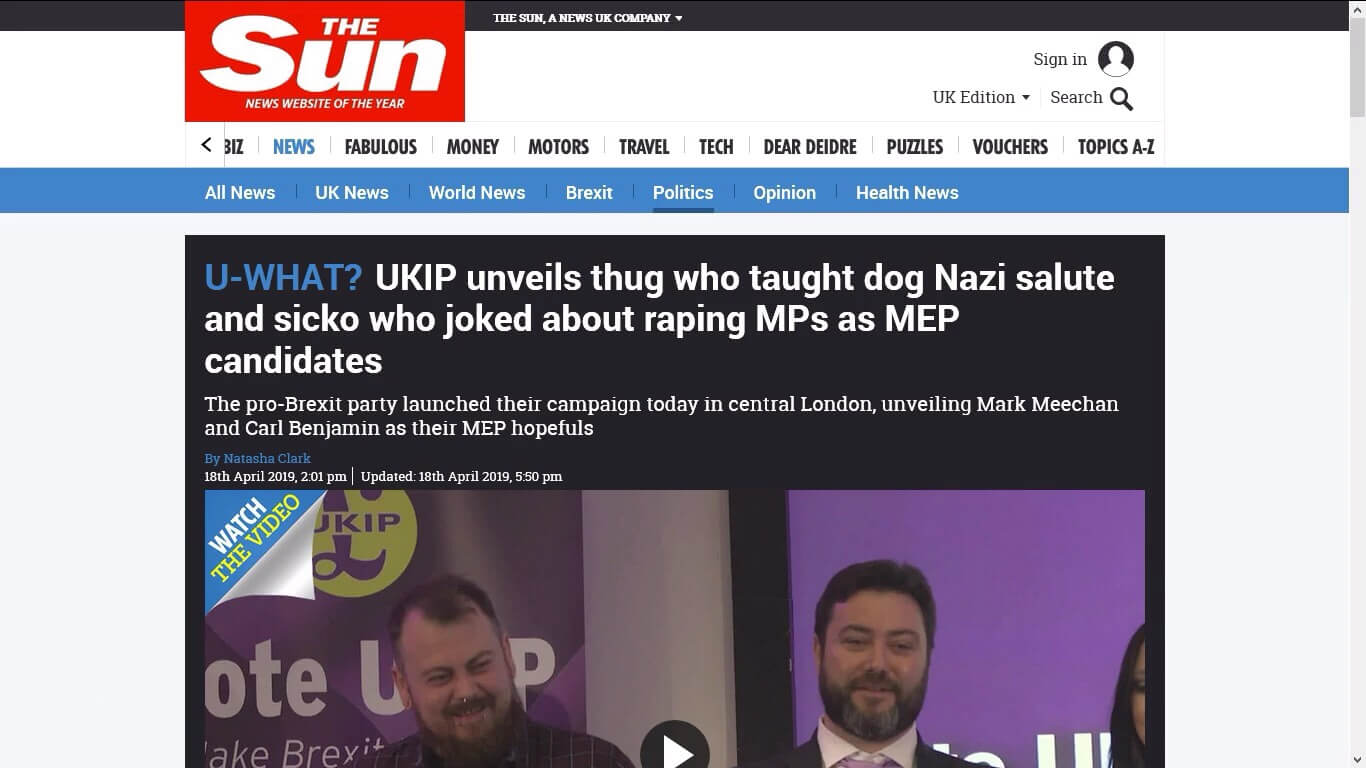

If we look at The Sun’s coverage of Carl Benjamin announcing his UKIP (UK Independence Party) MEP (Member of the European Parliament) campaign, we can see the headline, “UKIP unveils thug who taught dog Nazi salute and sicko who joked about raping MPs as MEP candidates.”

The “sicko who joked about raping MPs” is a reference to Benjamin and an offensive joke he made three years before he became a UKIP MEP candidate. The joke is nothing to do with his political candidacy and the way The Sun is framing it is quite dishonest. Despite this dishonest framing, The Sun is rated “green” in NewsGuard which according to its rating system means “it generally adheres to basic standards of credibility and transparency.”

If we look at Breitbart’s coverage of Benjamin’s UKIP MEP candidacy, we can see the headline, “Carl ‘Sargon of Akkad’ Benjamin Named UKIP Candidate for EU Elections.”

This is a more fair, objective, and honest representation of the news yet Breitbart is rated “red” in NewsGuard. According to NewsGuard’s rating system, this means “it generally fails to meet basic standards of credibility and transparency.”

While NewsGuard isn’t one of Facebook’s fact-checking partners, the way its ratings cause confusion and sometimes rate misleading information as trustworthy while rating less biased information as untrustworthy illustrates a problem that affects all third-party fact-checking services. Biased or dishonest political articles are often boosted by the fact checkers whereas more honest articles about political candidates are often demoted in the algorithm or even deleted from Facebook.

2. Click-Gap signal

Facebook positions the Click-Gap signal as an algorithm which identifies whether a website is producing low-quality content. However, the algorithm works by assuming that sites which have lots of inbound links are authoritative whereas sites with fewer inbound links are less trustworthy. If a site with fewer inbound links starts to get lots of clicks on Facebook, the Click-Gap signal uses this as an indicator that the website is producing low-quality content and may then downrank or delete the content.

The problem with this Click-Gap algorithm is that mainstream media sites usually have lots of inbound links because they have the scale and the resources to produce lots of content. This size and scale don’t make them inherently more reliable but it does allow them to benefit from the Click-Gap algorithm.

Smaller independent sites don’t usually have the resources to produce as much content so they often have fewer backlinks. However, if they produce trustworthy and truthful content that gets lots of clicks on Facebook, they’re punished by the Click-Gap algorithm and their content can potentially be demoted or deleted.

This becomes even more problematic if mainstream media sites decide to smear a political candidate and smaller independent sites report on the candidate truthfully. This results in the Click-Gap algorithm suppressing truthful election information and boosting misleading election information.

While Facebook position this Click-Gap algorithm as a tool to prevent misinformation, it’s really just a tool that boosts the content of larger sites with lots of links, regardless of how truthful it is.

3. Context button

The context button appears below posts from pages that Facebook has deemed to have a history of sharing misinformation. It provides more context and information on the article and links to external sources such as Wikipedia. While Facebook position this context button as a tool for preventing the spread of misinformation, it actually creates more misinformation.

First, it uses Wikipedia as one of the sources of additional context. There have been numerous reports that Wikipedia is politically biased, that Wikipedia pages are manipulated by paid contributors, and that Wikipedia spreads fake news so these Wikipedia links are likely to spread more misinformation.

Second, as we’ve already highlighted, third-party fact checkers often spread misinformation yet they get to decide whether a context button should be added to certain posts. This means the context button is subject to the same reliability issues as third-party fact checkers.

Finally, if Facebook uses artificial intelligence (AI) and machine learning to power its context buttons, this could lead to even more misinformation being spread through these context buttons. We saw this happen when YouTube used AI to automatically add fact-check panels to videos about the Notre Dame fire. The AI incorrectly added panels and links to the September 11 terror attacks which were nothing to do with the Notre Dame fire. Facebook’s AI could easily make the same mistake and start spreading misinformation if it’s used to power these context buttons.

4. Punishing pages and groups for repeatedly sharing misinformation

Facebook says that if pages or groups repeatedly share misinformation, all of their content will be demoted and they will have their ability to advertise and use monetization products revoked.

As we’ve already established, the tools and methods Facebook is using to identify misinformation are inaccurate and prone to errors. The flaws in these tools and methods are likely to unfairly punish many pages and groups that haven’t shared any misinformation.

This is particularly problematic for groups because most of their content is user-generated and Facebook expects group administrators to fact check every post to avoid punishment. Some groups have millions of members who post multiple times per day so it’s impossible for the group administrators to manually review this volume of content. Facebook has over 30,000 full-time content reviewers and still has huge content moderation problems. It’s untenable to expect a small group of administrators to fact check all the content in their group and contend with Facebook’s flawed misinformation tools and algorithms.

The real solution to misinformation

Facebook may position these new methods as ways to protect the EU elections from misinformation but they’re adding to the problem and creating more misinformation. Third parties, tools, and algorithms are never going to able to accurately identify and suppress misinformation or tell you what’s truthful information.

The most reliable way to identify misinformation and find honest information is to do it yourself. Look at different sources, assess how they gather information, decide whether they present the facts truthfully, and then make your own judgment on whether each source is reliable or not. Your own judgment is the best tool you have for assessing the credibility of information.

Click here to display content from www.bitchute.com.