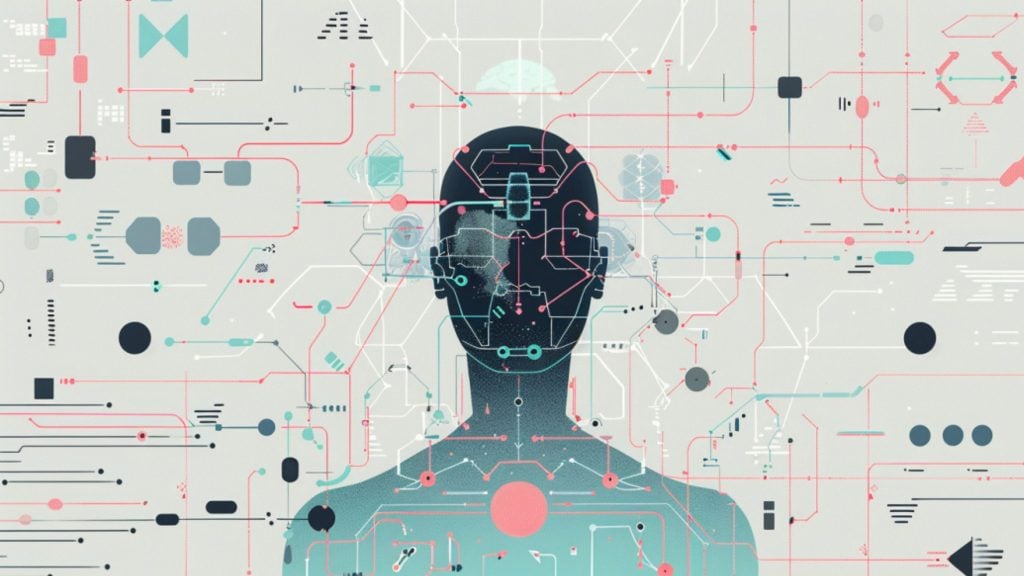

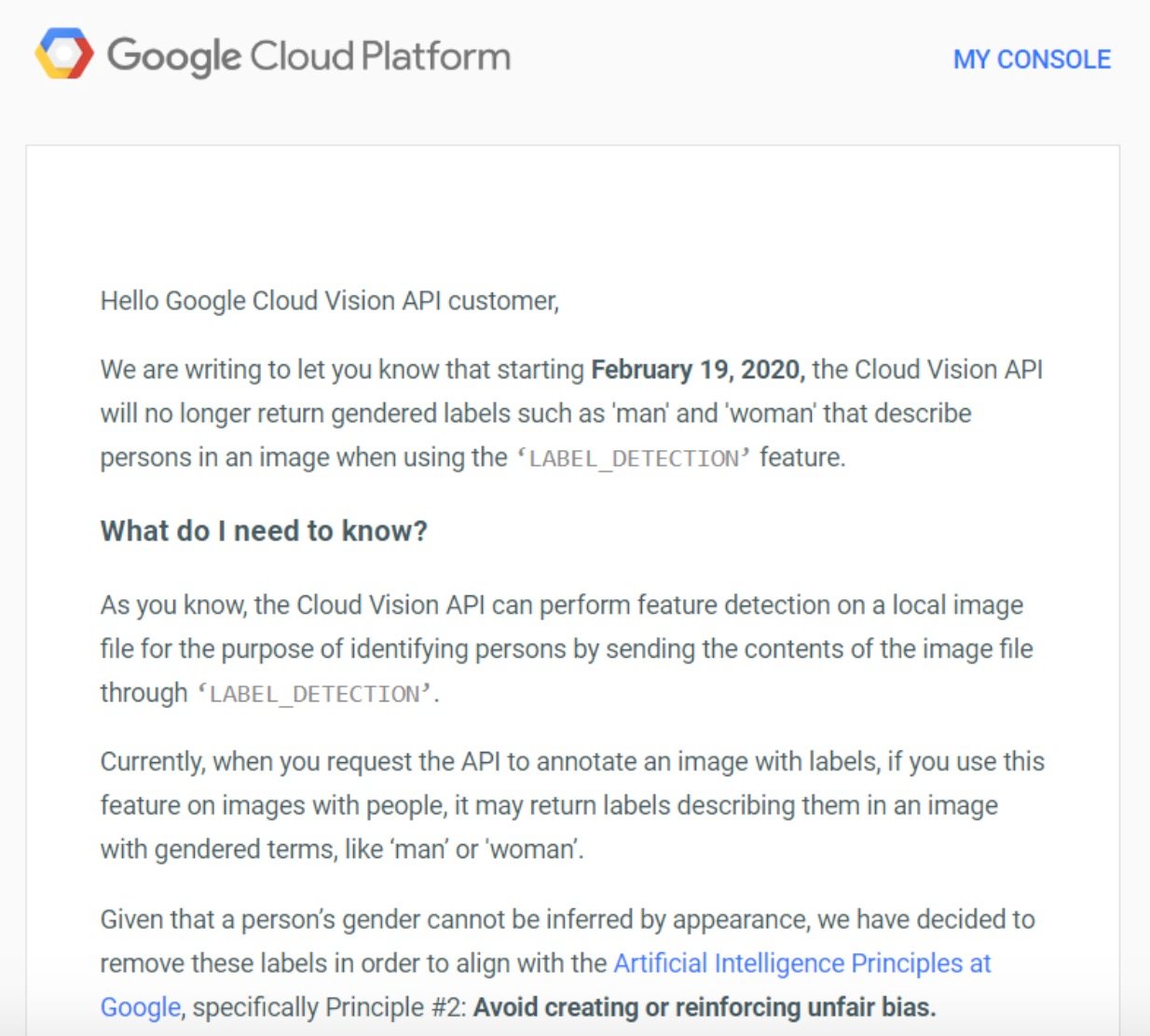

Based on an email seen by Business Insider, it was found that Google’s image-labeling AI tool will no longer use gender tags such as “man” and “woman” to label pictures – because, apparently, someone’s gender can’t be determined by looks alone.

In the email, Google apparently cited its ethical rules as a basis for making the changes.

Industry insiders are welcoming the change and believe that Google’s move is exemplary for the AI industry as a whole.

Here’s what Google’s ethics say about “reinforcing unfair bias”:

“AI algorithms and datasets can reflect, reinforce, or reduce unfair biases. We recognize that distinguishing fair from unfair biases is not always simple, and differs across cultures and societies. We will seek to avoid unjust impact on people, particularly those related to sensitive characteristics such as race, ethnicity, gender, nationality, income, sexual orientation, ability, and political or religious belief.”

While AI’s capabilities in recognizing a gender binary might be helpful, at a point of time where there are now apparently many gender identities outside the binary spectrum of “man” and “woman”, a move such as this is attracting positive reception in some circles.

Though Google hasn’t been very explicit about the intentions behind its move, it is apparent that it was done as a measure to accommodate transgender people.

What’s more, according to a test of Google’s Cloud Vision API by Business Insider, it was found that Google already started implementing the changes.

A tech policy fellow at Mozilla, Frederike Kaltheuner said that Google’s new move was “very positive.”

“Anytime you automatically classify people, whether that’s their gender, or their sexual orientation, you need to decide on which categories you use in the first place – and this comes with lots of assumptions. Classifying people as male or female assumes that gender is binary. Anyone who doesn’t fit it will automatically be misclassified and misgendered. So this is more than just bias – a person’s gender cannot be inferred by appearance. Any AI system that tried to do that will inevitably misgender people,” said Kaltheuner.