Social commentary YouTuber I,Hypocrite has had his channel terminated shortly after YouTube flagged one of his videos for “hate speech.”

https://twitter.com/lporiginalg/status/1234704837947736066

The video is still available on BitChute, an alternative to YouTube that supports free speech, and features I,Hypocrite reviewing key moments from a recent debate he had with YouTuber Jangles ScienceLad over whether Islam or White Nationalism is a greater threat to national security in the US.

In the video, I,Hypocrite and Jangles ScienceLad discuss Islamist attacks, hate crimes, terrorist attacks, shootings, murders, and more while also showing various related statistics and reports on-screen.

It’s likely that YouTube’s artificial intelligence (AI) automatically flagged the video as hate speech based on this without understanding that the topics were being discussed in the context of a debate.

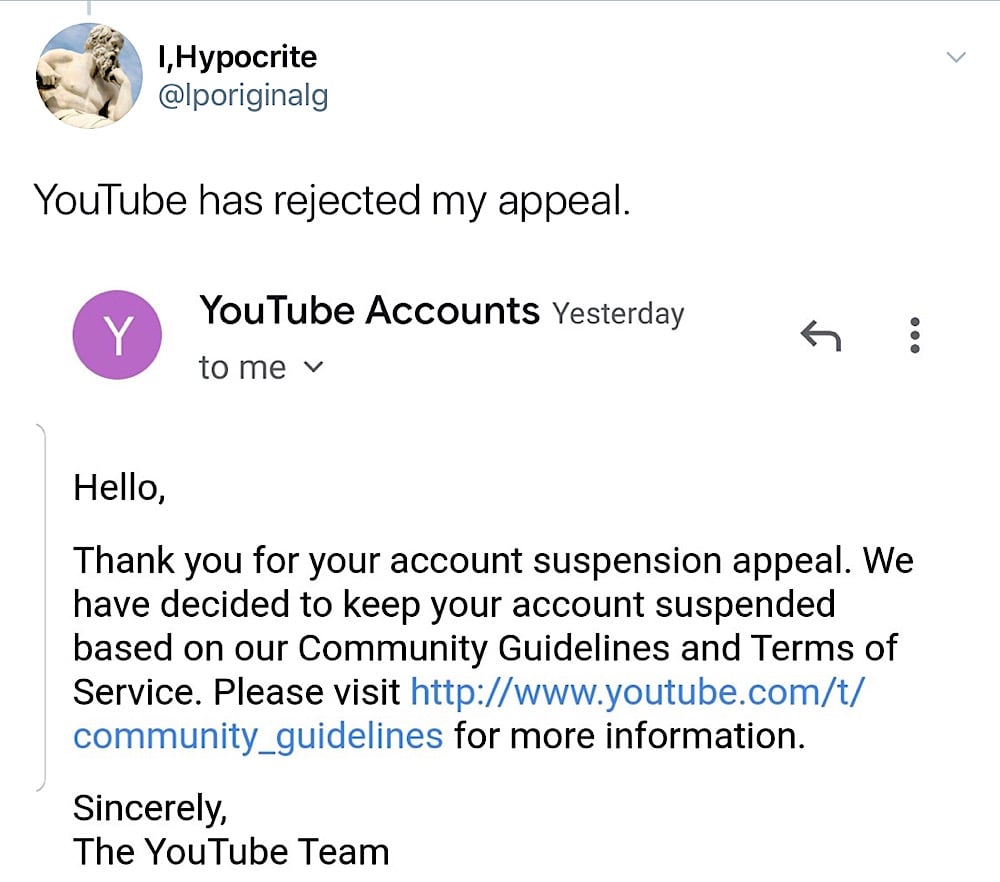

I,Hypocrite has appealed the decision on the grounds that his content did not glorify violence and it being an “intellectual dissection of terror and political violence threats in the United States.”

However, YouTube rejected the appeal and said it would be not reinstating the channel.

After the appeal was rejected, Team YouTube did reach out to I,Hypocrite on Twitter and said “we’ll look into this further.”

Click here to display content from X.

Learn more in X’s privacy policy.

Click here to display content from X.

Learn more in X’s privacy policy.

For now, the I,Hypocrite YouTube channel is down but many of his videos are available on BitChute.

The takedown of I,Hypocrite’s channel encapsulates the wider impact YouTube’s hate speech rules and its use of AI to enforce these rules has had on the creator community.

Journalists, history teachers, and other content creators who discuss topics such as war and crime from an analytical, educational, or historical context have found their content suddenly removed or demonetized since these hate speech rules were introduced in June 2019.

And despite the collateral damage these hate speech rules have caused to the creator community, federally funded researchers recently wrote that they are “devoting significant resources to developing detection algorithms for hate speech, abusive language, and offensive language” in response to the hate speech policies that have been introduced on several big tech platforms.