A new collaboration between YouTube and talent agency CAA is making waves, but not without raising concerns about its broader implications.

The partnership, which aims to empower celebrities to identify and remove AI-generated deepfakes, is being marketed as a step toward “responsible AI.” However, as with YouTube’s auto-copyright removal features, this new feature could pave the way for a slippery slope of content censorship, potentially silencing satire, memes, and even critical commentary.

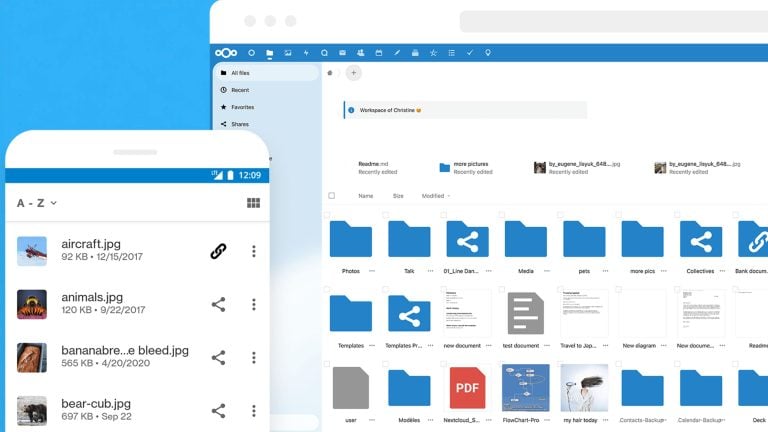

YouTube, positioning itself as a champion of creative industries, has developed an early-stage likeness-management tool intended to help talent fight back against unauthorized AI-created content. This tool will purportedly allow actors, athletes, and other public figures to flag and request the removal of deepfake videos featuring their likenesses.

CAA’s clients, described as having been “impacted by recent AI innovations,” will be among the first to test this system in early 2025. While the identities of the participating celebrities remain undisclosed, YouTube plans to expand testing to include top creators and other high-profile individuals.

According to YouTube, this initiative is part of its broader effort to establish a “responsible AI ecosystem.”

In a blog post, the company emphasized its commitment to empowering talent with tools to protect their digital personas. But skeptics have long argued this approach risks overreach, giving a privileged few the power to dictate what can and cannot be shared online.

Bryan Lourd, CAA’s CEO, applauded YouTube’s efforts, stating that the partnership aligns with the agency’s goals of protecting artists while exploring new avenues for creativity. “At CAA, our AI conversations are centered around ethics and talent rights,” Lourd said, commending YouTube’s “talented-friendly” solution. But while these goals sound noble, they mask a larger concern: where does the line get drawn between protecting one’s likeness and stifling legitimate expression?

Critics of the initiative fear it could lead to an unintended suppression of content, particularly memes and parodies that often rely on the creative reinterpretation of public figures.

Historically, such content has been protected under fair use laws, but the growing push to regulate AI-generated media risks undermining these protections. As YouTube experiments with new tools to detect and manage AI-generated faces, it’s worth questioning how this technology might be weaponized to suppress dissent or critique in the digital public square.

Adding to the controversy, YouTube’s announcement follows its introduction of other AI-detection technologies. Recently, the platform rolled out tools to identify synthetic singing voices and gave creators the option to permit third parties to use their content for training AI models. While these advancements are framed as empowering, they also grant YouTube immense control over the boundaries of digital creativity.

YouTube CEO Neal Mohan praised CAA’s involvement, describing the agency as a “strong first partner” for testing its AI-detection tools. “At YouTube, we believe that a responsible approach to AI starts with strong partnerships,” Mohan said. But critics remain wary, questioning how “responsible” such initiatives can be when they hold the potential to curtail free expression.

As AI technologies evolve, so too does the debate over how to manage their impacts. While safeguarding against malicious deepfakes is undeniably important, the tools designed to combat them must not come at the expense of creativity, critique, and the open exchange of ideas. With YouTube and CAA pushing the envelope on digital rights management, it remains to be seen whether these efforts will strike the right balance—or tip the scales toward censorship.