When YouTube changed its algorithm in January 2019 to crack down on “borderline content and content that could misinform users in harmful ways,” recommendations to legacy media outlets increased at the expense of independent YouTubers.

Despite benefiting from this algorithm change, several media outlets are continuing to push YouTube to further reduce recommendations to “problematic content.”

Reporters from The New York Times and The Daily Mail are citing a study from researchers at the University of California, Berkeley which acknowledges that YouTube has substantially reduced “conspiratorial recommendations” but concludes by questioning “whether such content is appropriate to be part of the baseline recommendations” on YouTube.

Hany Farid, a computer science professor at the University of California, Berkeley, and co-author of the study, also argues that YouTube can “do more” when it comes to reducing “problematic content” but won’t.

The study analyzed more than eight million recommendations from videos posted by over 1,000 YouTube channels during a 15 month period between October 2018 and February 2020.

While the researchers claim that the study looked at the most popular and recommended news-related channels, YouTube spokesperson Farshad Shadloo said “the list of channels the study used to collect recommendations was subjective and didn’t represent what’s popular on the site.”

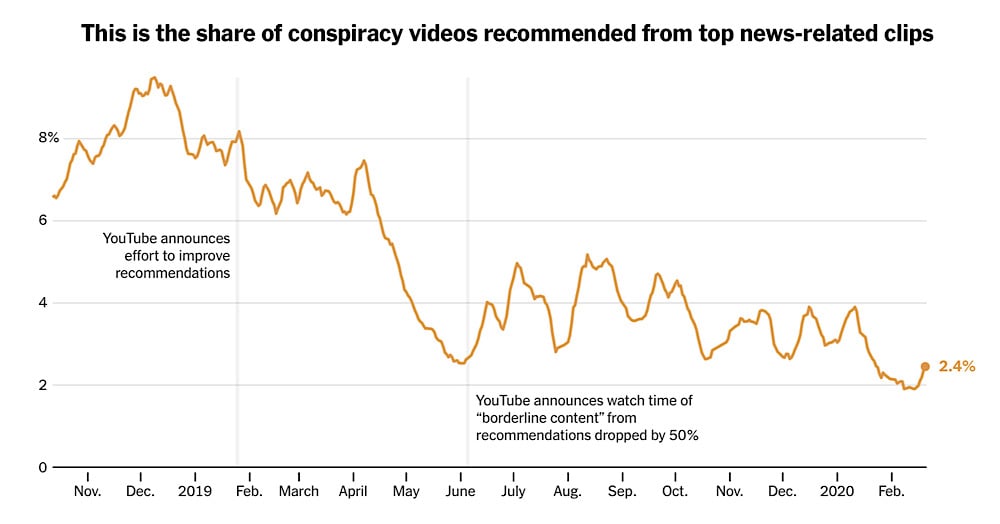

Regardless, the study concludes that YouTube recommendations to “conspiracy videos” have dropped from around 8% at the time when YouTube announced its January 2019 algorithm change to 2.4% in February 2020 – a decrease of almost 4x.

Since the introduction of this algorithm change, YouTube CEO Susan Wojcicki has also said that watchtime of borderline content from non-subscribed recommendations in the US has dropped by more than 70%.

Yet Farid contends that YouTube isn’t doing enough to reduce problematic content.

“If you have the ability to essentially drive some of the particularly problematic content close to zero, well then you can do more on lots of things,” Farid said. “They use the word ‘can’t’ when they mean ‘won’t.’”

The push for YouTube to further reduce recommendations through this algorithm which has boosted legacy media outlets while hurting independent creators comes just a couple of months after several media outlets used a flawed study to push for demonetization on YouTube.

In this instance, the study didn’t look directly at YouTube recommendations but still claimed that YouTube promotes “climate change misinformation” videos through its recommendations system.