During today’s US Senate Committee on Commerce, Science, and Transportation hearing titled “Does Section 230’s Sweeping Immunity Enable Big Tech Bad Behavior?,” Facebook CEO Mark Zuckerberg, Twitter CEO Jack Dorsey, and Google CEO Sundar Pichai testified on potential reforms to Section 230 of the Communications Decency Act (CDA).

Currently, Section 230 shields these companies from legal liability for user generated content on their platforms and for content moderation decisions that are made in “good faith” to remove or edit content that they or their users deem to be “obscene” or “objectionable.”

The goal of today’s hearing was to review Section 230 and establish whether new regulations are necessary to preserve a free and open internet.

One of the main arguments put forward by Zuckerberg and Dorsey was that more transparency around tech companies’ content moderation practices should be the driving force behind any potential Section 230 reforms.

In his written testimony, Zuckerberg stated:

“The debate about Section 230 shows that people of all political persuasions are unhappy with the status quo. People want to know that companies are taking responsibility for combatting harmful content – especially illegal activity – on their platforms. They want to know that when platforms remove content, they are doing so fairly and transparently. And they want to make sure that platforms are held accountable.”

Dorsey also focused on the importance of transparency in his written testimony:

“We believe increased transparency is the foundation to promote healthy public conversation on Twitter and to earn trust. It is critical that people understand our processes and that we are transparent about what happens as a result. Content moderation rules and their potential effects, as well as the process used to enforce those rules, should be simply explained and understandable by anyone.

We believe that companies like Twitter should publish their moderation process. We should be transparent about how cases are reported and reviewed, how decisions are made, and what tools are used to enforce. Publishing answers to questions like these will make our process more robust and accountable to the people we serve.”

Yet as Zuckerberg and Dorsey were questioned during the hearing, it quickly became apparent that while this push for transparency would make Big Tech’s arbitrary censorship even more prominent, it wouldn’t make the process fairer or help to hold these platforms accountable to their users.

Twitter’s policy on world leaders

Twitter’s decision to censor President Trump multiple times while allowing several tweets from Iran’s Supreme Leader came up several times during the hearing.

When quizzed by Senator Roger Wicker on why Twitter censors Trump and gives Khamenei a pass, Dorsey said that Khamenei’s tweets didn’t violate the terms of service because they’re considered “saber rattling which is part of the speech of world leaders in concert with other countries. Speech against our own people or a country’s own citizens, we believe is different and can cause more immediate harm.”

But when Senator Rick Scott highlighted that Twitter has failed to label tweets from Chinese state media outlets that push propaganda about the residents of Xinjiang, a region where over one million Uyghur muslims are estimated to be detained in concentration camps, Dorsey didn’t mention his principle of speech against a country’s own citizens causing immediate harm.

Scott pointed to a tweet from Chinese state media outlet the Global Times that claimed residents of Urumqi, the capital of Xinjiang, “now live a happy and peaceful life” and asked why it hadn’t been labeled.

Dorsey response was that: “We don’t have a policy around misleading information and misinformation. We don’t. We rely upon people calling that speech out, calling those reports out, and those ideas. And that’s part of the conversation is if there is something found to be in contest then people reply to it, people retweet it, and say that this is wrong.”

Scott pushed back and highlighted the double standard of Chinese Communist Party (CCP) propaganda about concentration camps being given a pass while tweets from Senate Majority Leader Mitch McConnell and Trump are blocked.

“You guys have set up policies that you don’t enforce consistently,” Scott said. “What’s the recourse to a user?”

But Dorsey just insisted that Twitter does enforce its policies consistently and that more transparency around processes is “critical.”

Dorsey’s answers provide more transparency on the confusing rationale Twitter uses to censor some world leaders and not others but they also highlight just how little accountability and fairness there is in the process.

Twitter users that don’t agree with the platform’s decision to censor Trump for speech against a country’s citizens that could cause “immediate harm” yet give CCP propaganda a pass have no recourse or means to challenge this decision making process.

Twitter’s policy on locked accounts

During the hearing, Dorsey admitted that Twitter’s decision to block links to the New York Post’s bombshell report alleging that presidential candidate Joe Biden had engaged in a corruption scandal was “incorrect.” Yet two weeks later, the New York Post is still locked out of its Twitter account for tweeting links to that same report.

When quizzed on this by Senator Ted Cruz, Dorsey said:

“Yes. They have to log in to their account, which they can do at this minute, delete the original tweet which fell under our original enforcement actions, and they can tweet the exact same material, tweet the exact same article, and it would go through.”

Dorsey didn’t explain why the New York Post is being forced to delete a tweet which Twitter admits it was incorrect to take enforcement action against in the first place. And if the New York Post is going to be allowed to tweet the exact same material when the account is unlocked, forcing the outlet to delete the original tweet doesn’t make sense.

The added transparency doesn’t result in more fairness or accountability. Twitter is insisting that the tweet has to be deleted to unlock the account. The New York Post and other Twitter users that disagree with this process can’t do anything to appeal the decision or change the process.

Twitter’s censorship of the New York Post story

Dorsey’s explanation for Twitter’s censorship of the New York post story was that the company initially thought it violated their “hacked materials” policy but that this was a mistake and so the censorship was reversed within 24 hours.

But when Senator Ron Johnson pressed Dorsey on whether he or his company had any evidence that the New York Post story was “Russian disinformation,” Dorsey admitted they had none and that the company “judged in the moment that it looked like it was hacked material.”

This additional transparency reveals that one of the biggest enforcement decisions Twitter has ever made made wasn’t based on evidence but instead based on an arbitrary internal judgement. Yet Twitter users have no power to hold the platform accountable and make sure it moderates based on evidence instead of in the moment suppositions.

Facebook’s censorship of the New York Post story

Zuckerberg’s attempts to explain the censorship of the New York Post story to Scott were no clearer than Dorsey’s and exposed just how arbitrary Facebook’s censorship of this story was.

Zuckerberg initially claimed that Facebook “relied heavily on the FBI’s intelligence” before admitting that the FBI hadn’t contacted Facebook about the New York Post story but instead had told the company to be on “heightened alert around a risk of hack and leak operations.”

Then in a bizarre follow up, Zuckerberg declared:

“To be clear on this, we didn’t censor the content, we flagged it for fact-checkers to review and pending that review, we temporarily constrained its distribution to make sure that it didn’t spread wildly while it was being reviewed. But it’s not up to us either to determine whether it’s Russian interference nor whether it’s true. We rely on the FBI and intelligence and fact-checkers to do that.”

So in the same sentence, Zuckerberg admitted that Facebook made the decision to suppress the post but somehow also insisted that this is not censorship.

And in the very next sentence, Zuckerberg said it’s not up to Facebook to decide whether something is true – that’s the job of the FBI (who he’s already admitted hadn’t contacted Facebook about the New York Post story), intelligence, and fact-checkers. Yet if it’s not up to Facebook to decide what’s true, why is the platform selectively intervening and deciding that some posts need to be flagged for the fact-checkers to review?

Zuckerberg’s statements reveal that the process of flagging the New York Post’s story was arbitrary, unfair, and selective. But even with this extra, Facebook users can’t change the process and there’s nothing stopping Facebook from continuing with this selective flagging process.

Zuckerberg’s claim that there are “no exceptions” to its incitement to violence policy

When Senator Ed Markey asked Zuckerberg whether he would commit to removing messages from the President if he “encourages violence after election results are announced,” Zuckerberg said: “Yes. Incitement to violence is against our policy and there are not exceptions to that including for politicians.”

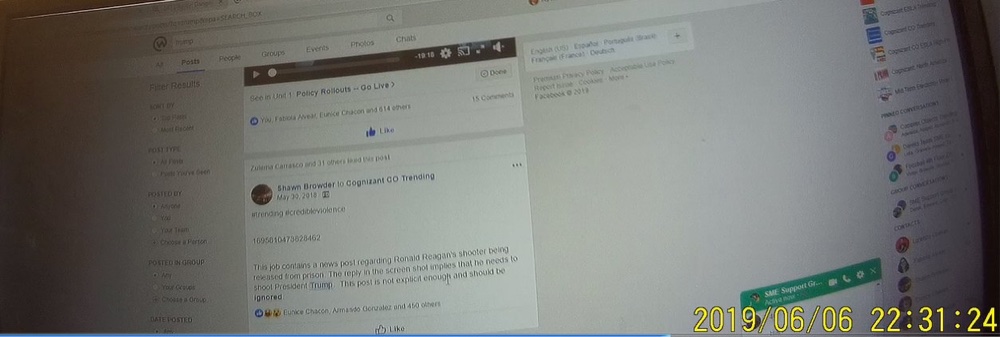

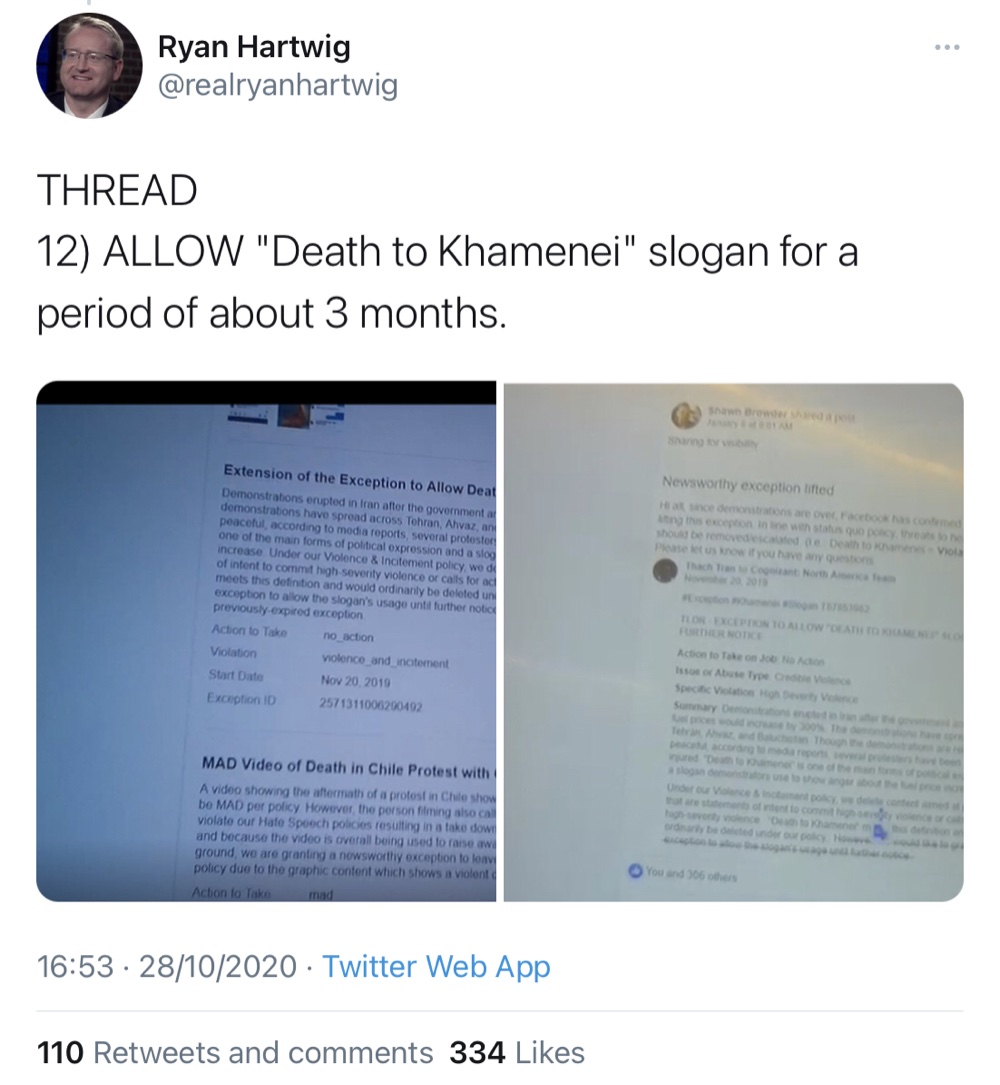

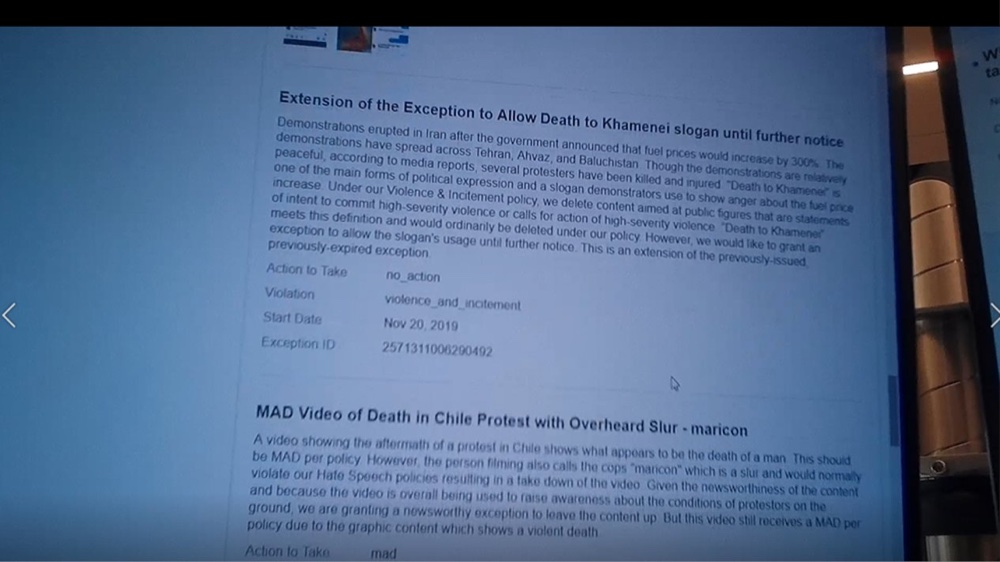

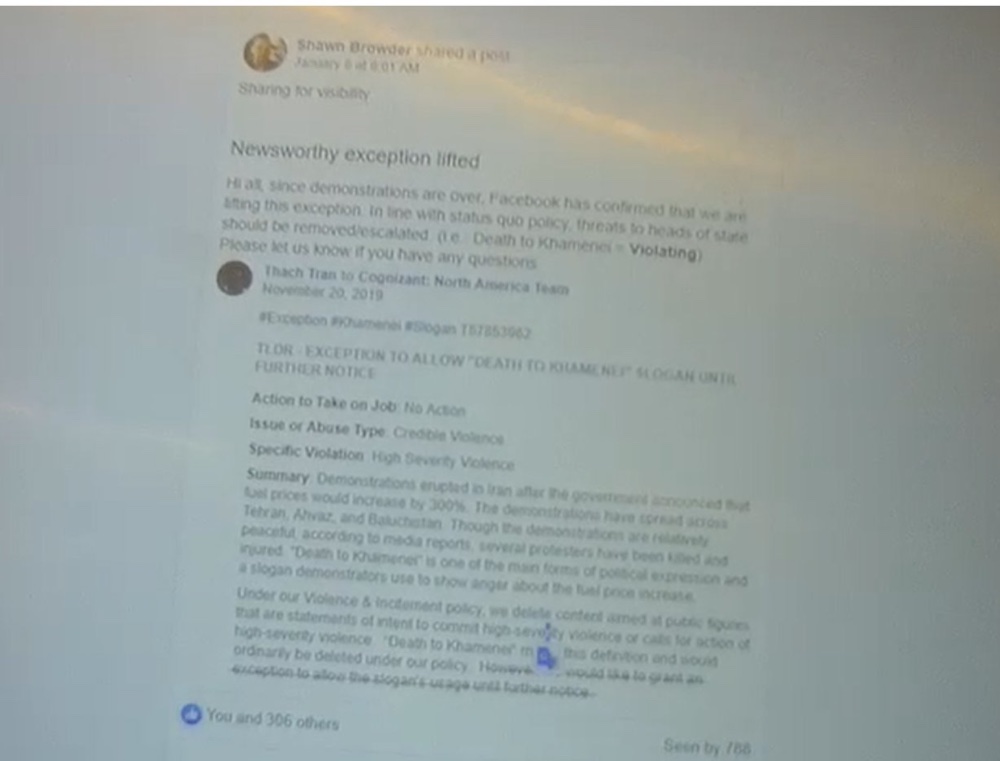

But former Facebook content moderator Ryan Hartwig, shared multiple screenshots of Cognizant, a contractor that moderates content for Facebook, instructing Facebook content moderators to ignore or carve out exceptions for several posts that had been flagged for inciting violence.

One of the screenshots provided by Hartwig shows Cognizant telling Facebook moderators to ignore a post implying Ronald Reagan’s shooter should shoot Trump because “it’s not explicit enough.”

Another set of screenshots shows Cognizant admitting that the phrase “Death to Khamenei” would normally be deleted under Facebook’s violence and incitement policy but that the company is carving out an exception to allow the slogan to be used until further notice.

Screenshots showcasing Facebook’s internal content moderation panels and the rationale that’s being used to justify specific content moderation decisions are one of the ultimate examples of transparency in this area.

Yet there’s no evidence of fairness or accountability in these leaked screenshots. Instead, they reveal more bias and more arbitrary and subjective decision making.

Increased transparency isn’t making Big Tech more accountable

Dorsey and Zuckerberg’s admissions during this hearing highlighted just how arbitrary and selective some of their biggest censorship decisions have been.

But their assertion that more transparency on their content moderation decisions will make the process fair and hold them accountable was proven not to be true by the answers they gave.

Throughout the hearing, they provided new information on the internal rationale that has led to them censoring the President, the New York Post, and more.

Not only did this added transparency reveal that the process isn’t fair and that there’s little to hold these platforms accountable to their users but by openly admitting to senators that they enforce based on in the moment judgments and that they censor without evidence of alleged wrongdoing, Dorsey and Zuckerberg showed that additional transparency has very little impact on the content moderation process.