A group of more than 500 experts in cybersecurity, cryptography, and computer science from 34 countries has issued a clear warning against the European Union’s proposed Chat Control 2.0 regulation.

In a joint open letter, the signatories describe the plan as “technically infeasible” and caution that it would open the door to “unprecedented capabilities for surveillance, control, and censorship.”

We obtained a copy of the open letter for you here.

Their statement arrives just days ahead of a critical European Council meeting on September 12, with a final vote set for October 14 that will determine whether the regulation moves forward.

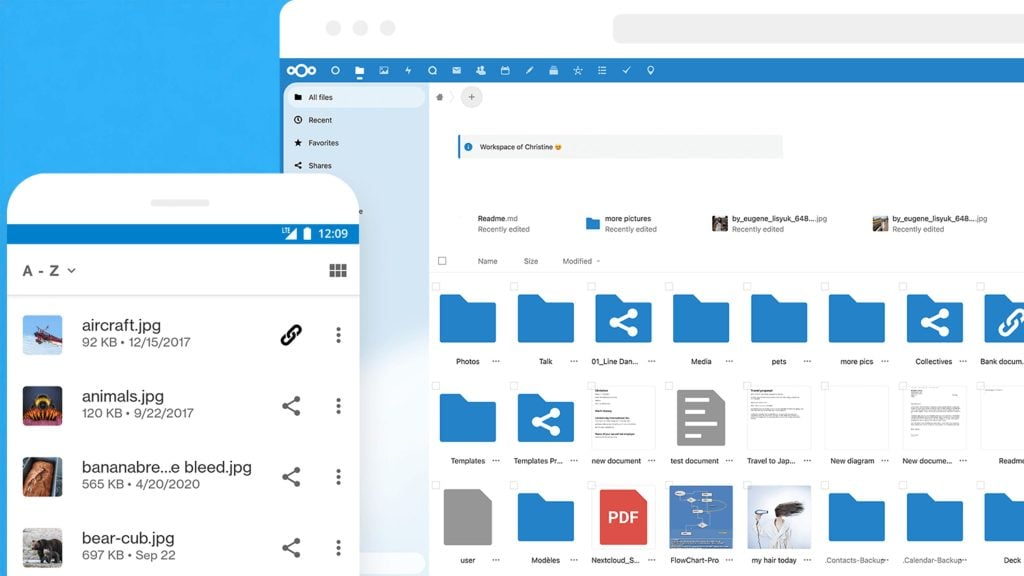

The proposed law would compel messaging apps, email platforms, cloud services, and even providers of end-to-end encrypted communication to scan all user content automatically. This would apply to texts, images, and videos, whether or not there is any suspicion of wrongdoing.

According to the researchers, such detection systems cannot coexist with secure communication. “On‑device detection, regardless of its technical implementation, inherently undermines the protections that end‑to‑end encryption is designed to guarantee.”

By forcing companies to monitor encrypted content, the regulation would introduce security weaknesses that could be exploited by malicious actors and hostile governments.

The scientists also emphasize the inaccuracy of the proposed approach. They argue that large-scale scanning systems produce unacceptable error rates and could generate enormous numbers of false reports.

“Existing research confirms that state‑of‑the‑art detectors would yield unacceptably high false positive and false negative rates, making them unsuitable for large‑scale detection campaigns at the scale of hundreds of millions of users.”

Ordinary individuals exchanging private messages could be falsely flagged and subjected to investigation, even in the absence of any illegal behavior.

Proponents of the law have argued that artificial intelligence and machine learning could be used to detect illegal content effectively.

The researchers dispute this claim, writing that “there is no machine‑learning algorithm that can [detect unknown CSAM] without committing a large number of errors … and all known algorithms are fundamentally susceptible to evasion.”

In their view, those who intend to spread harmful content could easily sidestep detection using simple technical workarounds, while the broader population would be exposed to widespread and error-prone monitoring.

While several countries, including Austria, Belgium, the Netherlands, and Finland, have already voiced opposition, the outcome now depends on the choices of governments that have not yet committed.

Germany, in particular, could play a decisive role. A vote against or an abstention from Berlin would be enough to block the proposal by helping form the required minority of member states representing at least 35 percent of the EU population.