Facebook, which has been pummeled for years know, mostly by the media and some political circles, as the prime purveyor of hoaxes (also known as fake news) powerful enough to influence a US election, seems to be looking for a way back into the good graces of some of its fiercest critics.

And what better way than to “attack” hoaxes around the hottest health, political, and economic topic in the world today: the coronavirus pandemic.

Facebook is positioning itself as the judge and jury of what content related to the outbreak is allowed on the platform, by removing whatever it sees fit. But the giant will also go a step further, tightly monitoring and keeping track (as ever) of the activities of its more than 2 billion users globally.

This time, this “nanny media platform” will inform users that they had interacted with what is determined to be harmful misinformation.

The announcement was made on Thursday in a company blog post, and states that messages will be sent to users’ news feeds – in case they’d dare to have liked, shared, commented, or reacted to content considered as harmful coronavirus-linked misinformation.

Naturally, the question arises of how Facebook determines what’s true and false in this massive and multi-faceted international controversy.

We learn that the US tech giant has decided to go with UN’s World Health Organization (WHO), itself embroiled in controversy these days, as its trusted source – along with other, unidentified, “credible sources.”

But forget about transparency, because Facebook apparently won’t actually disclose to users which misinformation they had allegedly reacted with – or even “what was wrong with it.”

So what is the purpose of this digital policing exercise?

It looks likely to be to direct people, worried that they had been interacting with dangerous content, towards “authoritative sources.”

Facebook did not miss the opportunity to use that never properly explained phrase – ambiguous to the point of itself becoming untrustworthy.

“We want to connect people who may have interacted with harmful misinformation about the virus with the truth from authoritative sources in case they see or hear these claims again off of Facebook,” Facebook’s VP for Integrity Guy Rosen wrote in the blog post.

So far, “hundreds of thousands” of posts have been removed from Facebook under this policy, on the strength of the platform’s third-party “fact-checking” partners’ opinions.

Right or wrong, these fact-checkers seem to be fulfilling their role: steer users towards some sources, and away from others. 40 million pandemic-related posts have been slapped with warning labels in March alone.

And here’s the shocker – when people see them, Rosen writes, “95 percent of the time they did not go on to view the original content,” meaning that just fact-checking a piece of content is almost as good as deleting the whole thing.

It is also revealed that the fact-checked content is in a separate section that is at this time “available for people in the US.”

Fact-checkers, who have recently themselves been discredited, are one piece of the puzzle of the emerging problem of information control.

And it’s not just what’s removed from Facebook these days – it’s also about what’s allowed to stay up.

Many have fact-checked Facebook itself on this point, to conclude that it often allows misinformation if it suits a political or ideological agenda – or if it comes from one of the so-called “authoritative sources.”

One of the examples is the misattribution of imagery in news stories by major US networks, such as CBS using videos from miserably crisis-stricken Italian hospitals when reporting about the situation US hospitals.

Another is the ABC saying that Trump knew about coronavirus all the way back in November – which turned out to be fake news debunked by the National Center for Medical Intelligence.

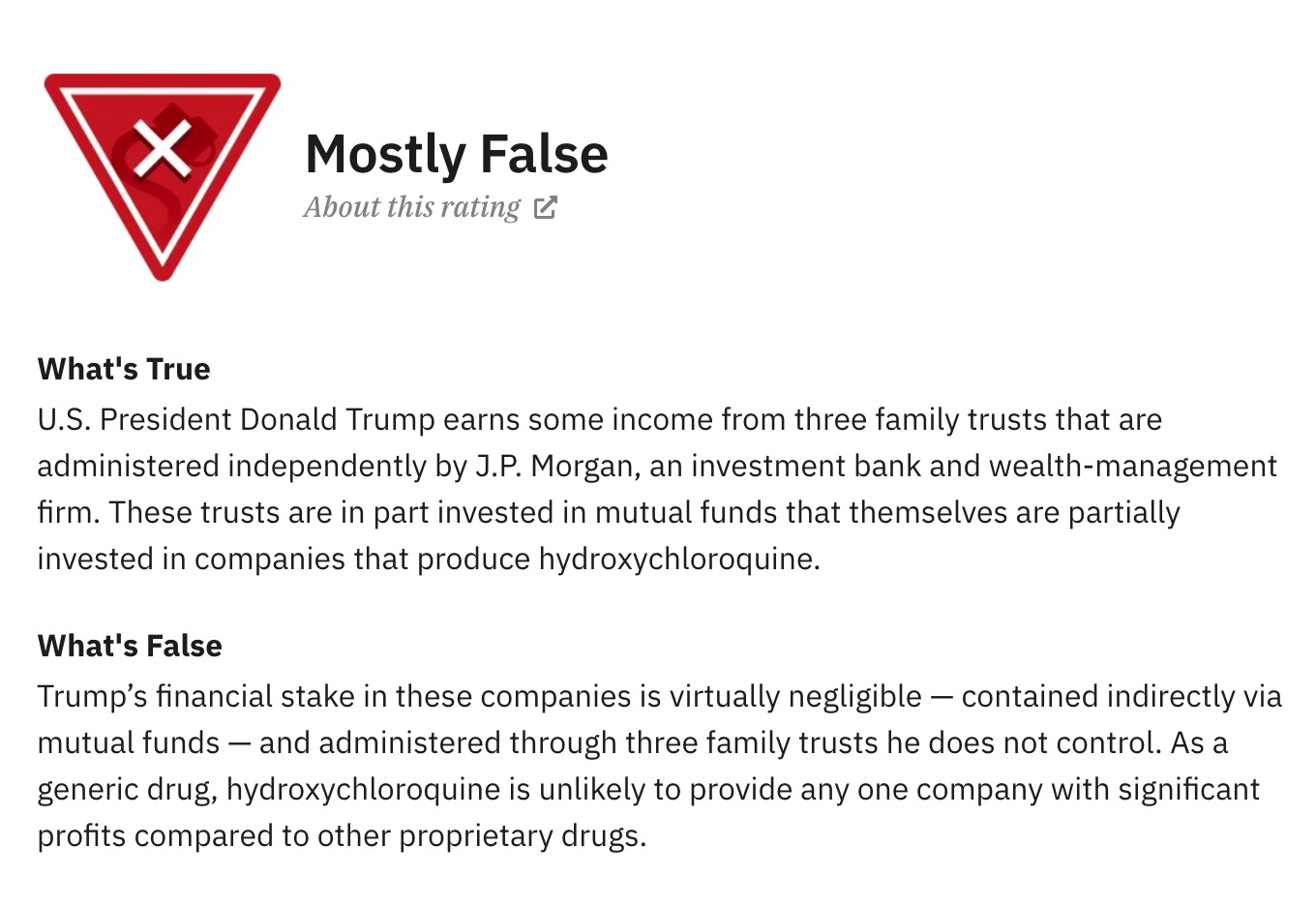

Huffpost also tried its hand in this “genre” by claiming President Trump was profiting from the use of the hydroxychloroquine medication to treat Covid-19 patients – a report that came from The New York Times.

But Trump’s stake in a company manufacturing it is “negligible” so much so that even Snopes rated the claim that Trump benefits financially from promoting the drug as “mostly false“.

All these examples have one thing in common: Facebook is yet to label these stories as dangerous coronavirus misinformation. And, it looks like corporate media – which still have the biggest reach and can do the most damage in case they are broadcasting untruths – are the ones getting away with a lot.

Then there’s China itself – whose anti-US propaganda around the virus outbreak is going by and large unchecked on Facebook.