Dutch authorities are once again leaning on tech companies to act as speech enforcers in the run-up to national elections. The country’s competition regulator, the Authority for Consumers and Markets (ACM), has summoned a dozen major digital platforms, including X, Facebook, and TikTok, to a meeting on September 15.

The goal is to pressure these companies into clamping down on whatever officials define as “disinformation” or “illegal hate content” before voters cast their ballots on October 29.

EU regulators and civil society groups will be present at the session, reinforcing a growing trend where unelected bureaucrats, activist organizations, and corporate gatekeepers coordinate to shape public conversation online.

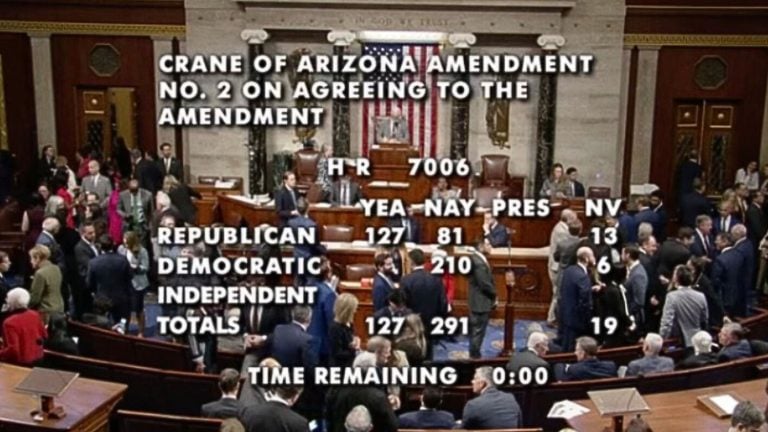

Central to the effort is the EU’s new online censorship law, the Digital Services Act (DSA), a law that hands governments broad authority to demand content removals based on vague and shifting definitions like “harmful” or “illegal.”

The ACM, tasked with enforcing the DSA in the Netherlands, is using this legislation to demand stronger content censorship from platforms in the lead-up to the vote.

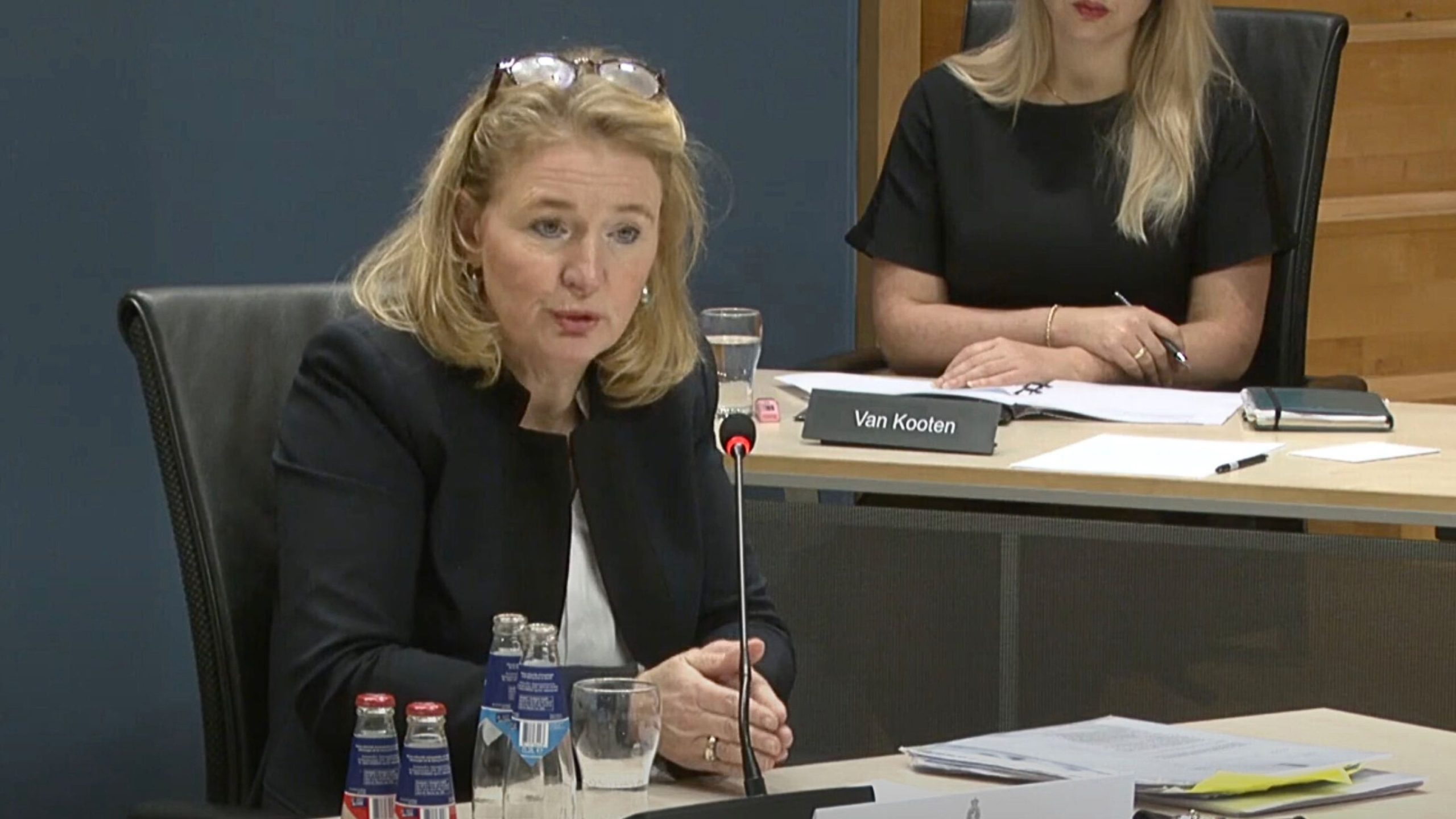

ACM director Manon Leijten said that platforms designated as Very Large Online Platforms (VLOPs) must “take effective measures against illegal content” and operate with “transparent and diligent policies.” Beneath the bureaucratic language is a clear push to sanitize online discussion before the public has a chance to weigh the issues themselves.

Back in July, the ACM had already contacted these platforms and asked them to outline their strategies for ensuring what it calls “electoral integrity.”

The upcoming September meeting is designed to examine how these companies plan to enforce that goal, particularly regarding user-generated content during the campaign season. What is being marketed as a safeguard against threats to democracy is, in practice, a tightening of the boundaries around what people are allowed to say.

The regulator is also encouraging users to report directly if they believe platforms have mishandled flagged content, setting up a system that could easily be gamed to suppress dissent. While officials claim this is about transparency, the real issue remains unaddressed. Who exactly gets to determine what counts as dangerous or misleading?