A growing effort to link online activity with verified identity is gaining momentum, with OpenAI becoming the latest tech company to embrace stricter digital ID measures.

In response to several lawsuits and reports tying chatbots to teen suicides, the company announced it will begin estimating users’ ages and, in some cases, demand government-issued identification to confirm users are over 18.

Lawmakers such as Missouri’s Senator Josh Hawley have also been pushing for the measures.

More: A Death, A Lawsuit, and Infinite Surveillance

OpenAI framed the shift as a necessary concession. “We know this is a privacy compromise for adults but believe it is a worthy tradeoff,” the company stated.

CEO Sam Altman added on X, “I don’t expect that everyone will agree with these tradeoffs, but given the conflict it is important to explain our decisionmaking.”

The move aligns with a broader industry trend toward reducing anonymity online, all under the premise of protecting young users.

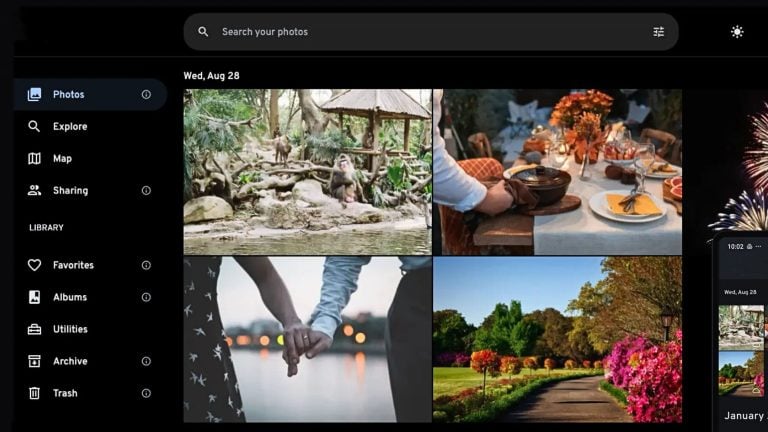

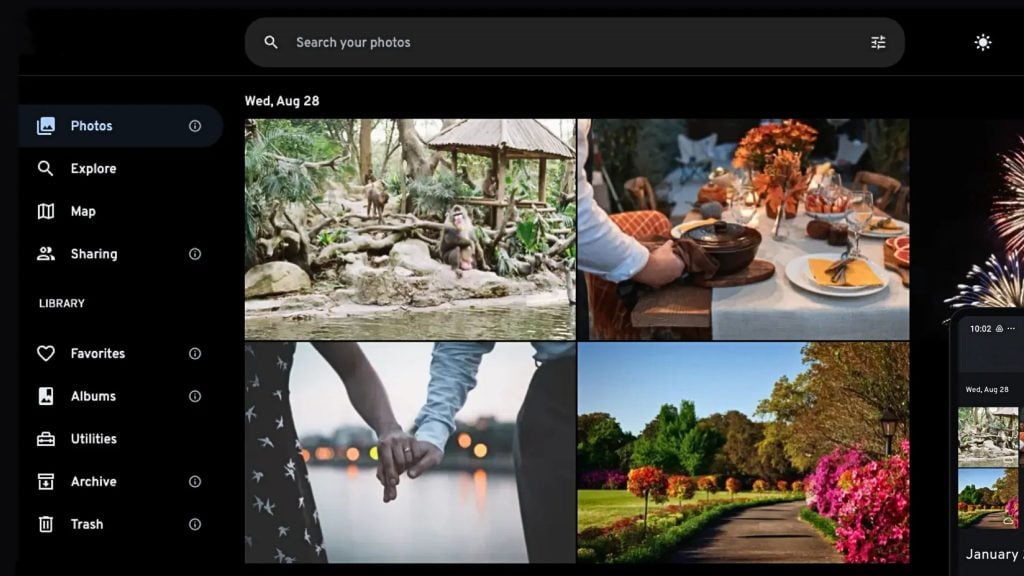

What was once a fringe idea is now being baked into mainstream platforms. In July, YouTube announced it would also begin using AI tools to guess users’ ages and restrict access to certain accounts unless they pass a digital ID test.

OpenAI’s announcement comes amid heightened legal pressure.

One lawsuit filed in August by the parents of 17-year-old Adam Raine claims that ChatGPT played an active role in his death by suicide.

According to the suit, the chatbot drafted an initial version of a suicide note, suggested methods of self-harm, dismissed warning signs, and advised the teen not to involve adults.

Other recent cases have followed.

In response, OpenAI has expanded its safety measures. After adding parental controls earlier in September, the company has now introduced rules that will restrict how minors interact with ChatGPT.

These changes go well beyond content filtering.

Users will constantly be monitored, and when the system suspects a user is under 18, it will enforce stricter behavioral limits, blocking topics related to self-harm, refusing flirtatious or romantic dialogue, and escalating some cases to law enforcement.

“If an under-18 user is having suicidal ideation, we will attempt to contact the users’ parents and if unable, will contact the authorities in case of imminent harm,” the company said.

OpenAI’s leadership has acknowledged the growing tension between maintaining open access to powerful AI tools and preventing misuse.

Earlier versions of ChatGPT were designed to avoid a broad set of sensitive topics.

But in recent years, competitive pressure from looser models and increasing political resistance to moderation led the company to relax those restrictions.

“We want users to be able to use our tools in the way that they want, within very broad bounds of safety,” OpenAI said.

Internally, the company claims to follow a principle of “‘Treat our adult users like adults’,” while still drawing the line at situations where harm might occur.

What’s becoming more evident is that privacy is being traded for control. As governments and companies look to prevent online “harm,” they are turning more frequently to identity verification systems.

The push for digital ID, once pitched as a tool to combat fraud or improve safety, is increasingly being used to regulate access to online spaces, including those that were once anonymous by design.

The implications for online privacy are far-reaching.

As more platforms introduce age detection and identity verification, users may face growing barriers to anonymous access, particularly when using generative AI tools.

For those concerned about how personal data is collected and stored, the move toward ID-based systems signals a major change in how the internet operates.