More people than ever are turning away from Google’s tightly controlled ecosystem in favor of independent, privacy-focused tools. That growing change makes the recent blocking of Immich, one of the most respected self-hosted photo management platforms, look especially bad for Google.

More: Ditching Google: Practical Self-Hosted Replacements

Immich has earned a reputation as a simple, powerful alternative to Google Photos, letting users store and organize images on their own servers without surrendering data to a corporation.

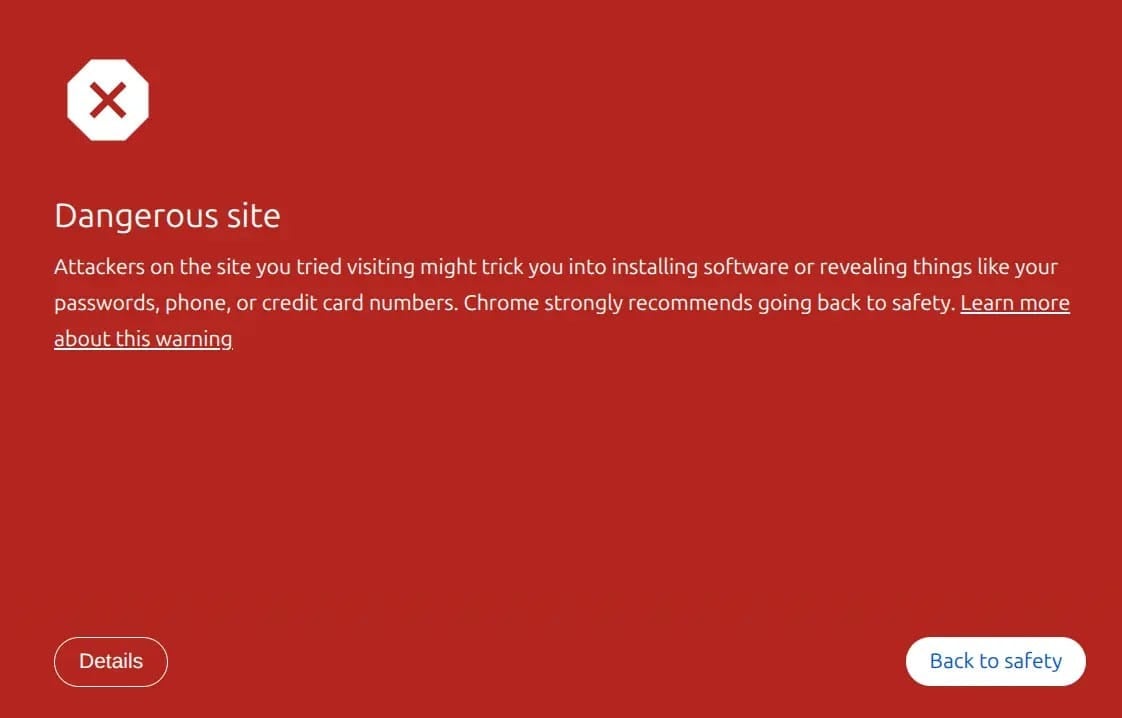

Yet despite being an open-source project with a transparent codebase, Google’s Safe Browsing system abruptly labeled Immich’s .immich.cloud sites as dangerous, locking out both users and developers behind a red “deceptive site” warning screen.

Safe Browsing is designed to protect people from malicious links and scams, but in this case, the automated system effectively crippled a legitimate privacy-respecting service.

Many users, unaware of how to bypass the warning, were left assuming the site was unsafe. Even the Immich team found themselves struggling to access their own tools.

For a company that markets itself as a champion of security and trust, this episode paints a poor picture. It raises uncomfortable questions about how much power Google’s automated filters wield over the open web and whether smaller, independent projects that promote user control are being unfairly swept aside.

Related: The Easiest Photo Self-Hosting Exit Ramp from Big Cloud

After the initial flagging, the Immich team submitted a full review, clarifying that the flagged pages were their own internal test environments and not phishing sites.

Google briefly lifted the block, only to reinstate it days later, this time blacklisting the entire domain.

The developers have now migrated their preview systems to a new domain, immich.build, in an effort to avoid further disruption.

This problem extends well beyond Immich. Other open-source platforms like Jellyfin, Nextcloud, and YunoHost have faced similar treatment.

Each case highlights the risk of allowing one corporate filter to decide what the public can safely view.