Last month, MIT Technology Review went after the Internet Archive’s Wayback Machine – a service that preserves historical versions of webpages and allows users to access archives of these pages when they’re deleted.

MIT Technology Review complained that these archives were allowing coronavirus “misinformation” to evade moderators and fact-checkers and that these archived coronavirus articles had “better performance than most mainstream media news stories.”

Now, just a few weeks later, the Wayback Machine has started to add warning labels to some of its archives, while also forcing users to log in to view some of the archived content on the site.

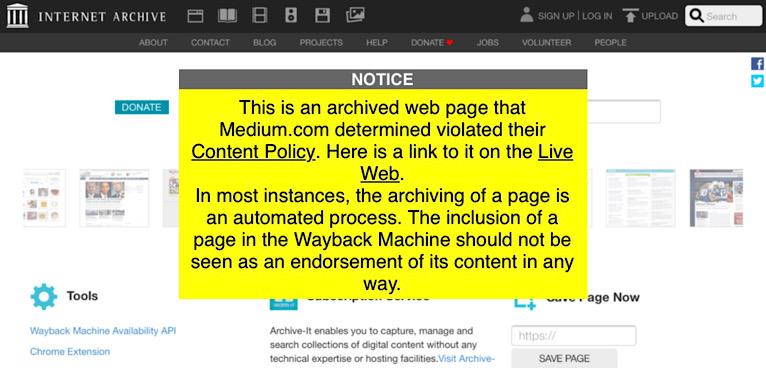

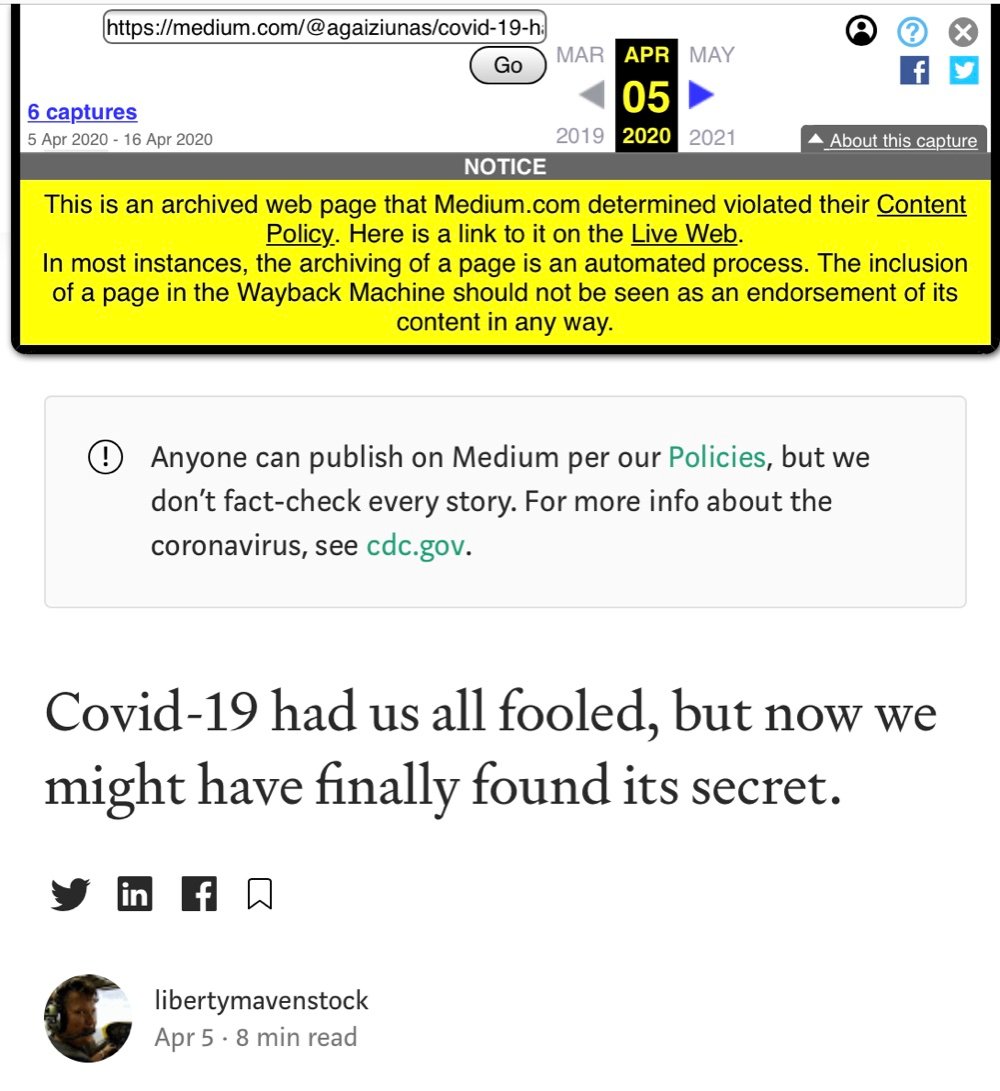

The warning labels are bright yellow, appear at the top of some archived pages, and tell users when a post was removed for violating a site’s content policy.

When users are forced to log in to view archived content, the thumbnails are blurred out in search results.

Mark Graham, the Director of the Wayback Machine, has also been using the review section of the Wayback Machine to manually add links to articles that claim some archived posts are misinformation.

These changes to the Wayback Machine are reflective of a growing trend where popular platforms and sites that would once allow their users to share or archive content without editorializing the experience are now starting to insert warnings into the content.

This began with the major Big Tech platforms partnering with third-party fact-checking companies and allowing them to fact-check posts from users – a partnership that has resulted in posts that are fact-checked and labeled “False” being suppressed and hidden behind warning messages.

Recently Facebook took this one step further by warning users who interact with what it deems to be coronavirus misinformation and sending them articles from its preferred source of truth – the World Health Organization (WHO).

Since Facebook made this change, House Intelligence Chair Adam Schiff has urged Google, YouTube, and Twitter to implement similar measures.

The increased editorializing of posts on social media has compounded the problems associated with platforms attempting to act as arbiters of truth for their users.

Under these programs, those who are chosen to act as arbiters of truth have been themselves caught spreading false information and those who are chosen to fact-check the truth have been accused of bias.