The internet and social media were transformative technologies that enabled the distribution of speech at a speed and scale that the world had never seen before.

Generative AI, a technology that can quickly construct various types of media with a few prompts, has a similar transformative potential because it allows people to create text, images, art, music, videos, and more at a speed and scale that was never possible before.

As the internet and social media became more ubiquitous, some lawmakers attempted to put the genie back in the bottle by ignoring First Amendment concerns and demanding that online platforms censor content that lawmakers deem to be “misinformation.” And on Tuesday, during the first Senate hearing on generative AI, some lawmakers made similar demands of this new technology.

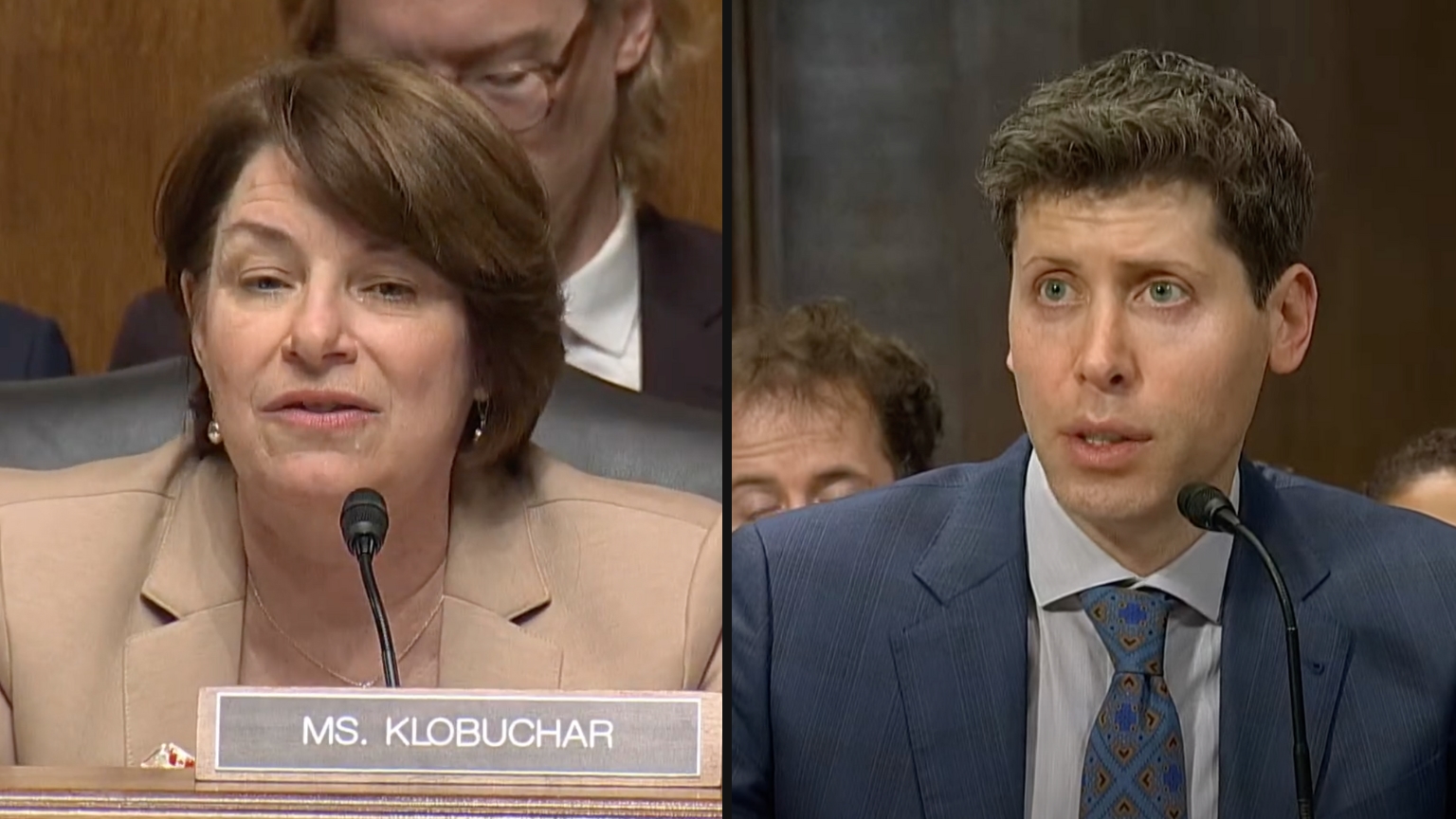

The hearing was titled “Oversight of A.I.: Rules for Artificial Intelligence” and several senators quizzed the witnesses, OpenAI CEO Sam Altman, IBM Chief Privacy & Trust Officer Christina Montgomery, and NYU Professor Emeritus Gary Marcus, on how generative AI could be censored or restricted so that it couldn’t create content that they deem to be misinformation.

Senator Amy Klobuchar (D-MN) said: “We just can’t let people make stuff up and then not have any consequence.”

She also complained that the OpenAI’s generative AI chatbot, ChatGPT, had produced false information after her team asked it to create a tweet about a polling location in Bloomington, Minnesota, and used this example to suggest that “all kinds of misinformation” could be generated during elections.

Altman told Klobuchar that OpenAI is concerned about the impact on elections and said: “Hopefully the entire industry and government can work together quickly.”

While he noted that ChatGPT is different to social media because it’s used to generate content, not distribute it, Altman still assured Klobuchar that OpenAI is censoring ChatGPT outputs by refusing to generate certain things and monitoring activity so that it can detect when users generate lots of content.

Ironically, Klobuchar responded to Altman by invoking “what happened in the past with Russian interference and the like” as a justification for cracking down.

The claim that Russia interfered with the 2016 presidential election and manipulated social media has been pushed by several pro-censorship politicians who argue that false claims that are unsupported by facts are dangerous. Yet there’s very little evidence to support this mass Russian interference claim, with both Twitter officials and a report from Special Counsel John Durham finding a lack of evidence when investigating claims.

Senator Mazie Hirono (D-HI) suggested that the AI-generated images of former President Donald Trump being arrested that went viral in March were “harmful,” complained that such images may not be classed as harmful by OpenAI, and pushed for “guardrails that we need to protect…literally our country from harmful content.”

Senator Richard Blumenthal (D-CT) indicated that he supported banning generative AI model outputs “involving elections” and asked the witnesses what other outputs they think should be banned or restricted.

Montgomery said “misinformation” is a “hugely important” area to look at. Marcus said “medical misinformation” is “something to really worry about” and called for “tight regulation.”

Senator Josh Hawley (R-MO) proposed creating a federal right of action that “will allow private individuals who are harmed by this technology to get into court” and sue generative AI companies when they’re given “medical misinformation” or “election misinformation.”

Marcus pushed back against the suggestion, suggesting that it would “make a lot of lawyers wealthy” but be “too slow to affect a lot of the things we care about.”

Marcus added that it’s not clear whether Section 230 of the Communications Decency Act (CDA), which gives online platforms immunity from civil liability for user-generated content on their platform if they moderate the content in “good faith,” does or does not apply to generative AI. This led Hawley to suggest that lawmakers will create a new law that specifies Section 230 doesn’t apply to generative AI.

Senator Chris Coon (D-DE) said “disinformation that can influence elections” is “entirely predictable” and lamented that Congress has so far been unsuccessful in applying social media disinformation regulations. He asked the witnesses whether the EU’s direction is the right path to follow (a reference to the European Union’s far-reaching AI Act which regulates AI based on risk and bans certain uses of AI).

Montgomery said the EU approach “makes a ton of sense” and that “guardrails need to be in place.”

Coons also characterized AI’s ability to deliver “wildly incorrect information” as a “substantial risk” and said AI needs to be regulated. He asked Altman how OpenAI decides whether a model is “safe enough to deploy.”

Altman said OpenAI spent well over six months setting the safety standards for GPT-4, the latest version of the large language model that powers ChatGPT, and trying to find the “harms.”

Senator Lindsey Graham (R-SC) suggested that Section 230 was a “mistake.” He inferred that users should be able to sue platforms if they allow “slander” or fail to enforce their terms of service against actions such as “bullying.”

He asked all of the witnesses to agree that “we don’t want to do that again” and that there should be “liability where people are harmed.”

Marcus noted that there’s a “fundamental distinction between reproducing content and generating content” but still agreed there should be liability when people are harmed. Montgomery said IBM wants to “condition liability on a reasonable care standard.” Altman said Section 230 isn’t the right framework and pushed for “a totally new approach.”

Related: The censors are already beginning to target AI technology

While senators from both sides of the aisle are eager to force AI companies to censor content that’s deemed to be misinformation, none of them mentioned that social media censorship based on this vague, subjective term has led to mass censorship of truthful content, such as the Hunter Biden laptop story and statements about the Covid vaccine not preventing transmission.

Senator Hirono’s comments also indicate that lawmakers have no qualms about applying these sweeping censorship rules to AI-generated memes or similar content that’s not intended to be taken seriously. While some people did think the AI-generated Trump arrest images that Hirono referenced were real, many knew they weren’t real and saw them as a fun way to experiment with AI as Trump’s arrest loomed.

Not only did concerns about over-censorship never get addressed during this hearing but the dominant AI companies are already starting to censor what their tools will output.

The CEO of Midjourney, the generative AI tool that was used to create the viral Trump arrest images, has admitted that Midjourney blocks various prompts, including prompts that attempt to create images featuring Chinese President Xi Jinping.

OpenAI already censors some outputs and has vowed to implement “safeguards” that address “misinformation.”

Censorship is just one of the restrictions lawmakers are attempting to impose on this potentially transformative technology. Many senators are also big fans of only allowing companies that have a government-approved license to offer generative AI tools.