The UK government is moving towards making the much-criticized Online Safety Act (OSA) even more contentious, this time by trying to use it to outright enforce online censorship of “misinformation” – even though the law has no provision that explicitly imposes a legal duty of that kind on social platforms.

Nevertheless, Secretary of State for the Department of Science, Innovation and Technology Peter Kyle appears to be the one hard at work to make this “mission creep” happen by expanding the act’s scope to punish those platforms considered as not removing “misinformation” – the way the authorities define that.

In a post on the UK government site, Kyle spoke about OSA allowing for the government to come up with a Statement of Strategic Priorities (SSP) – really, just reinforcing the argument that the law is seriously flawed.

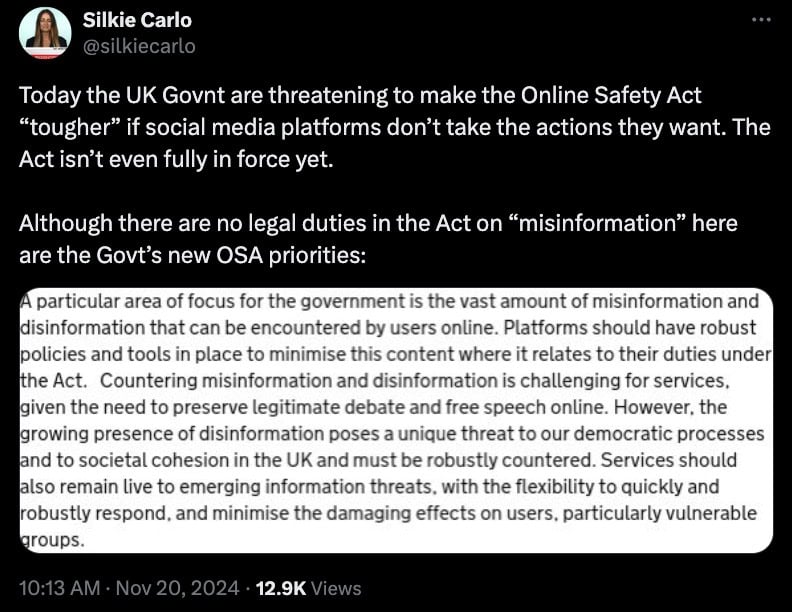

And so, this is where a draft to make OSA “tougher” – as civil rights group Big Brother Watch Director Silkie Carlo warned in a post – comes in.

Carlo noted that at this time, while OSA isn’t even fully enforced, the government is coming up with “priorities.”

It all comes down to first pushing a bad piece of legislation, that due to its nature, leaves room for getting even worse (in this case, expanded).

Writes Peter Kyle: “A particular area of focus for the government is the vast amount of misinformation and disinformation that can be encountered by users online.”

Kyle justifies the obvious rush to add more stringent rules to OSA – moving to require platforms to have “robust” tools to counter “dis/misinformation.”

This government official says this new, and no doubt incoming interpretation of OSA is necessary at a time when the allegedly growing presence of disinformation “poses a unique threat to our democratic processes and to societal cohesion in the UK.”

The UK government must be confident that it will be able to add this to what OSA currently requires of platforms – and that is, “safety by design, age assurance” – and, the one thing that can be linked to “misinformation” which is the offense of “false communications.”

But it specifically cites “non-trivial” psychological or physical harm as the threshold and even so, applies to a person posting that communication, without actually legally binding platforms to censor content.

And yet, the new government OSA priorities, as cited in the draft changes, state that, “a particular area of focus for the government is the vast amount of misinformation and disinformation that can be encountered by users online.”

The document continues: “Platforms should have robust policies and tools in place to minimize this content where it relates to their duties under the Act.”