X is urging European governments to reject a major surveillance proposal that the company warns would strip EU citizens of core privacy rights.

In a public statement ahead of a key Council vote scheduled for October 14, the platform called on member states to “vigorously oppose measures to normalize surveillance of its citizens,” condemning the proposed regulation as a direct threat to end-to-end encryption and private communication.

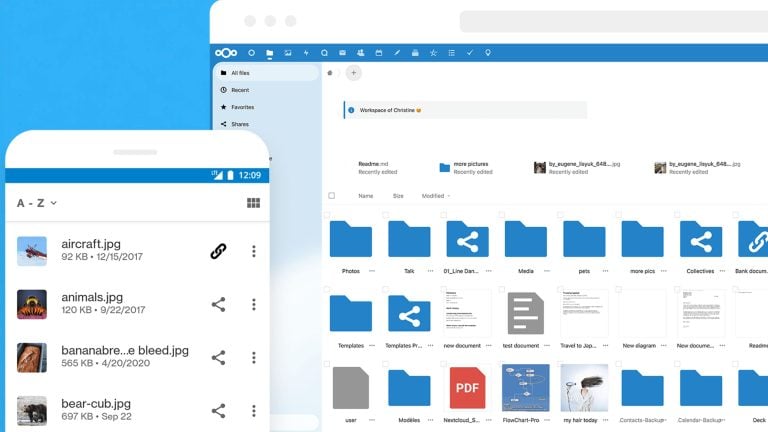

The draft legislation, widely referred to as “Chat Control 2.0,” would require providers of messaging and cloud services to scan users’ content, including messages, photos, and links, for signs of child sexual abuse material (CSAM).

Central to the proposal is “client-side scanning” (CSS), a method that inspects content directly on a user’s device before it is encrypted.

X stated plainly that it cannot support any policy that would force the creation of “de facto backdoors for government snooping,” even as it reaffirmed its longstanding commitment to fighting child exploitation.

The company has invested heavily in detection and removal systems, but draws a clear line at measures that dismantle secure encryption for everyone.

Privacy experts, researchers, and technologists across Europe have echoed these warnings.

By mandating that scans occur before encryption is applied, the regulation would effectively neutralize end-to-end encryption, opening private conversations to potential access not only by providers but also by governments and malicious third parties.

The implications reach far beyond targeted investigations. Once CSS is implemented, any digital platform subject to the regulation would be forced to scrutinize every message and file sent by its users.

This approach could also override legal protections enshrined in the EU Charter of Fundamental Rights, specifically Articles 7 and 8, which safeguard privacy and the protection of personal data.

A coalition of scientists issued a public letter warning that detection tools of this kind are technically flawed and unreliable at scale.

High error rates could lead to false accusations against innocent users, while actual abuse material could evade detection.

The letter also raises concern over the potential for repurposing or leaking flagged content, increasing the risks rather than reducing them.

Contrary to claims that CSS is similar to spam or malware filters, privacy advocates note a crucial difference. Existing filters are voluntary and serve the user’s interests without reporting to law enforcement.

CSS, however, would be mandatory and turn communication services into instruments of state monitoring.

Even if implemented, the system’s effectiveness remains questionable. Individuals seeking to avoid detection could shift to services outside the EU jurisdiction or use VPNs.

Meanwhile, everyday users would be left exposed to false positives, privacy breaches, and unwarranted government scrutiny.

With less than two weeks before the vote, X and others are urging EU governments to reject the regulation and seek alternatives that protect children without dismantling privacy protections for millions.

The outcome of this decision will help define whether Europe moves toward preserving secure digital communication or embedding surveillance into the core of everyday online life.