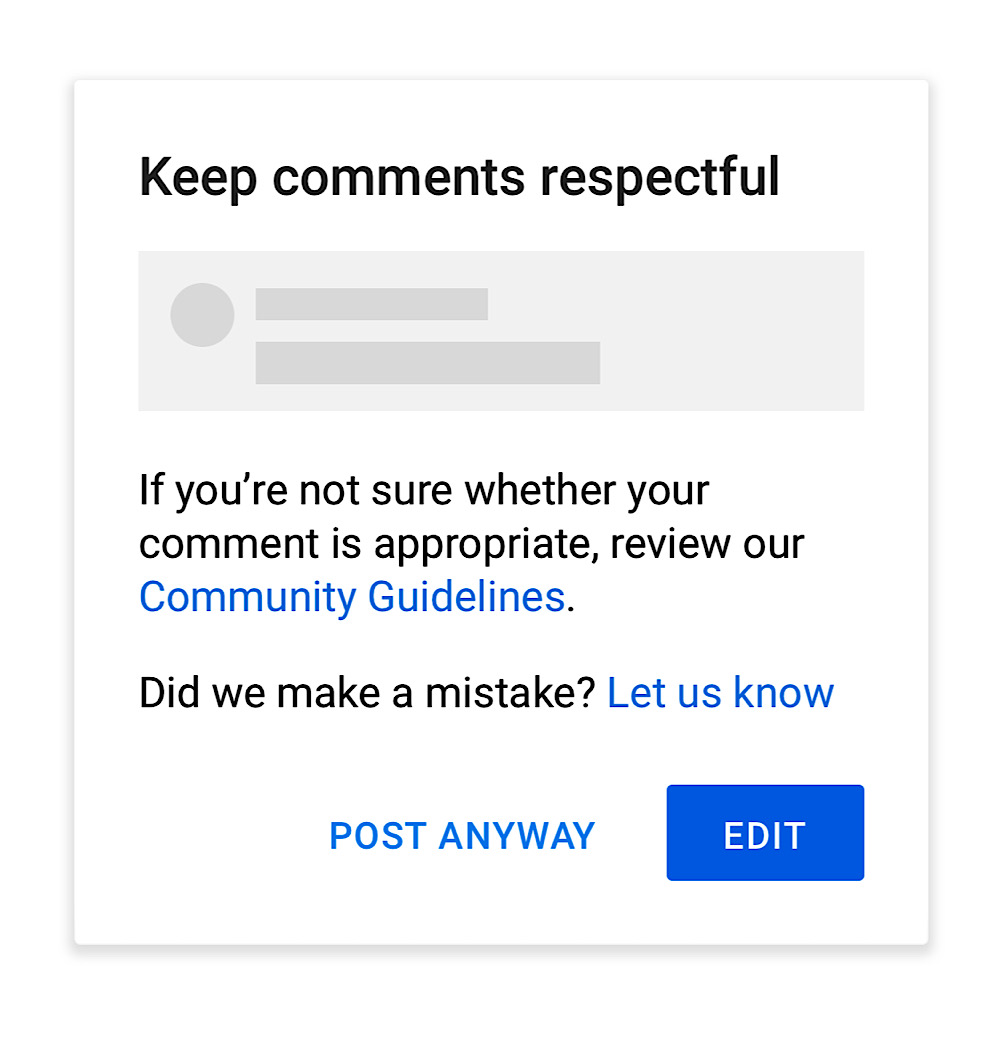

YouTube has launched a new alert that will warn users their comment “may be offensive to others” and encourage them to edit the comment in order to be “respectful.”

This warning system is powered by YouTube’s systems and will continuously learn which comments “may be considered offensive” based on user reports.

YouTube has also started testing a new filter in YouTube Studio for “potentially inappropriate and hurtful comments that have been automatically held for review.” The company states that this new filter is being introduced “so that creators don’t ever need to read” potentially inappropriate or hurtful comments.

Additionally, YouTube announced that it has invested in technology that helps its systems “better detect and remove hateful comments by taking into account the topic of the video and the context of a comment.”

YouTube also reflected on the impact this technology has had on overall comment removals and noted that since early 2019, the number of daily “hate speech” comment removals has increased by 46x.

While the number of hate speech comment removals on YouTube has increased drastically over the last couple of years, this new warning on potentially offensive comments is likely to reduce both dissenting comments and overall user engagement on the platform because it forces users to take an extra step when their comments are flagged.

Facebook CEO Mark Zuckerberg has revealed that when users are forced to take an additional step to view content that’s deemed to be “false,” only 5% of the users actually follow through and view the post.

This restriction on user comments is the latest development in YouTube’s multi-year crackdown on the comments system.

Since 2019, YouTube has said that comments left by other users can result in creators being demonetized, started to automatically hold some comments for review, and disabled comments on some channels as part of its Children’s Online Privacy Protection Act (COPPA) changes.

This year, YouTube also auto-censored some comments before lifting the censorship and claiming that it was an “error.”

YouTube is one of several Big Tech companies to start warning users about their comments before they’ve been posted.

Instagram now shows warning notifications when users attempt to post comments that “may be considered offensive.” And Twitter is currently testing a system that prompts users to “rethink” what they’re about to say.