Government officials in Australia have moved to tighten their grip on online discussion following the Bondi Beach terror attack, urging citizens and tech platforms to suppress footage and commentary deemed distressing.

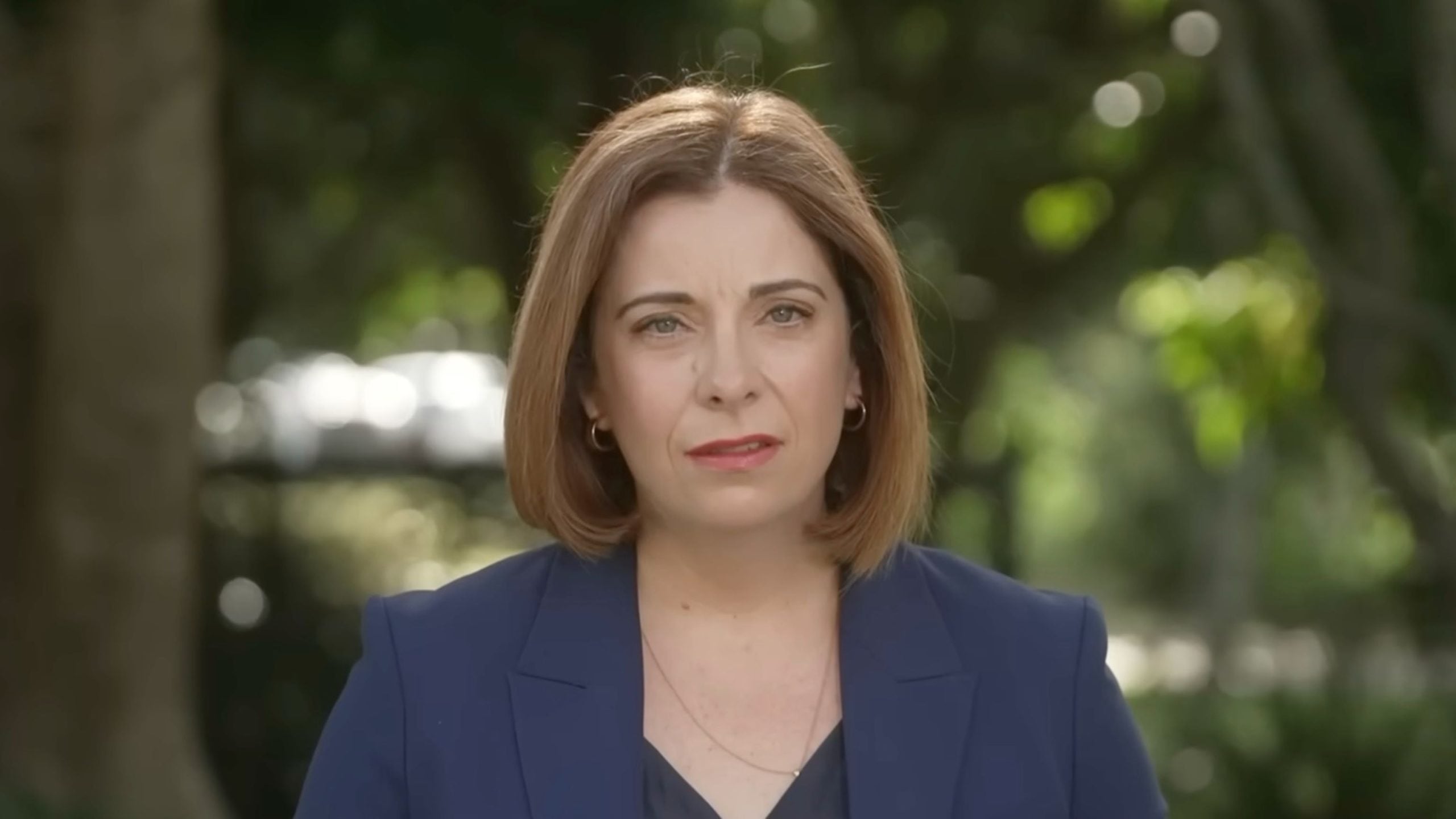

Communications Minister Anika Wells and eSafety Commissioner Julie Inman Grant both directed attention toward “violent, harmful or distressing” posts, calling for social media users to report such content and for companies to act swiftly in taking it down.

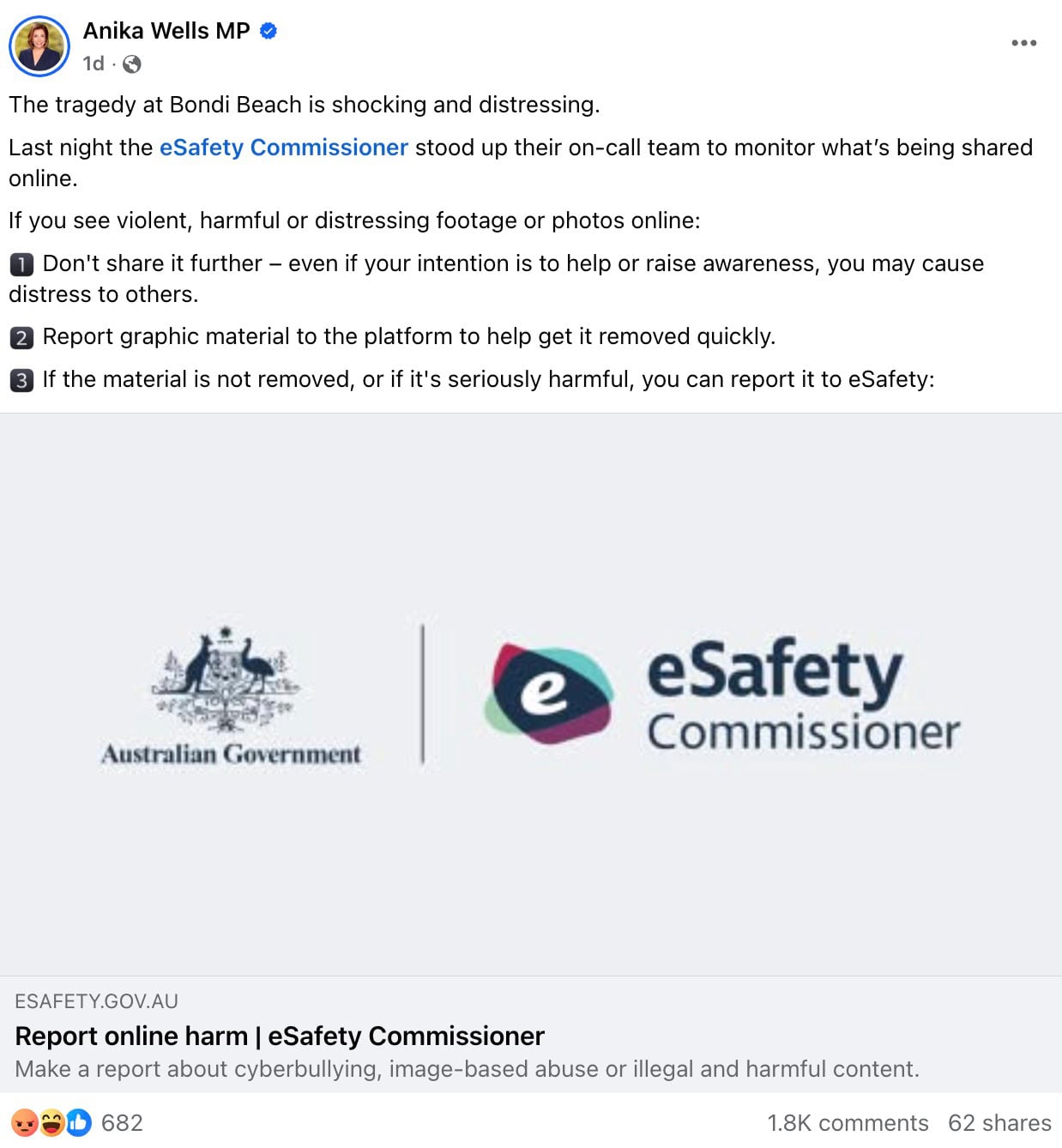

In a Facebook post, Wells announced that eSafety had “activated its on-call team to monitor what’s being shared online.”

She appealed to users to “report graphic material to the platform to help get it removed quickly” and to alert eSafety if “it is not removed, or if it’s seriously harmful.”

Shortly after, eSafety echoed the same message through its own post, repeating the instruction to report content to platforms and to the regulator itself.

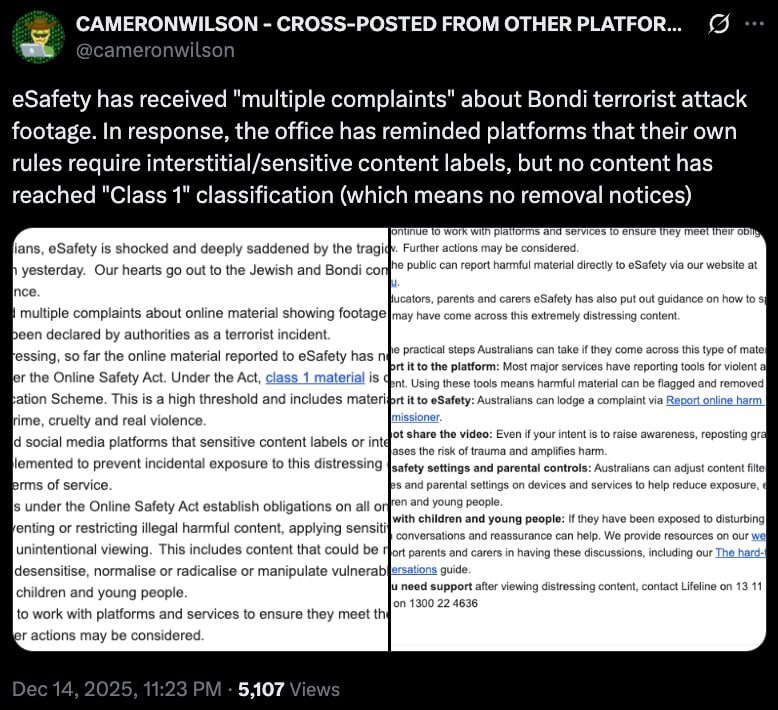

A separate statement, shared by journalist Cameron Wilson, confirmed that eSafety had “received multiple complaints about online material showing footage of the mass shooting at Bondi” but that this content “has not met the threshold for Class 1 material under the Online Safety Act.”

Under the 2021 Online Safety Act, a broad censorship law granting the commissioner broad takedown powers, “Class 1” status determines whether eSafety can compel platforms to remove material entirely.

The regulator added that it would “continue to work with platforms and services to ensure they meet their obligations under Australian law,” leaving open the option that “further actions may be considered.”

Those powers, however, are under increasing legal pressure. The Free Speech Union (FSU) of Australia has formally requested that eSafety provide copies of any Section 109 notices it has issued or plans to issue, documents that authorize the removal of “Class 1” material.

The FSU has warned the regulator that “it can expect it to be challenged” if such orders are made.

eSafety’s authority has already been tested in the courts.

In 2024, a federal judge rejected its effort to maintain a global blocking order on graphic footage from a Sydney church stabbing, ruling instead that X’s choice to limit access inside Australia through geo-blocking was a reasonable approach.

Another dispute is still before the courts. In October 2025, the FSU of Australia filed a case questioning the legality of eSafety’s directives to remove or geo-block footage of the killing of Iryna Zarutska. The group argues that these orders cut Australians off from viewing material of genuine news value.

The FSU of Australia has formally written to the eSafety Commissioner’s Office demanding transparency over future and past censorship directives issued under Section 109 of the Online Safety Act 2021.

In the letter dated 15 December 2025, addressed through the Australian Government Solicitor, FSU stated that it was acting “to ensure that the horrors of yesterday’s terrorist attack in Bondi Beach against the Jewish community are not hidden or censored.”

We obtained a copy of the letter for you here.

The group referred to eSafety’s recent acknowledgment of an earlier wrongful censorship order, noting that “on Friday 12 December 2025, your client indicated they accepted the recent decision of the Classification Review Board in eSafety INV-202505242 concerning the murder of Iryna Zartuska which your client now accepts that they wrongly issued a Section 109 notice in respect of.”

That decision, according to the FSU’s letter, included the Board’s finding that “the film is a factual record of a significant event that is not presented in a gratuitous, exploitative or offensive manner to the extent that it should be classified RC.”

The FSU also accused eSafety of procedural misconduct, writing, “We note that your client misled ourselves and the Tribunal about the existence of those parallel proceedings.”

The Union is now seeking a formal undertaking that eSafety will “promptly provide to the Free Speech Union of Australia copies of any further Section 109 (Online Safety Act 2021(Cth)) notices she issues in the future, including a copy of the censored content.”

It further insisted that “these notices should identify the decision maker in question” and that “Ms Inman Grant will take personal responsibility for any such notices issued, rather than using any delegate.”

Following the Bondi Beach terror attack, the Australian government has turned sharply toward expanding digital surveillance and online content control under the banner of combating antisemitism.

Jillian Segal, Australia’s Special Envoy to Combat Antisemitism, has urged an immediate acceleration of her July recommendations to the government, which include extensive measures targeting speech, algorithms, and online anonymity.

She told Guardian Australia that “calling it out is not enough” and insisted that “we need a whole series of actions that involve the public sector and government ministers, in education in schools, universities, on social media and among community leaders… It has got to be a whole society approach.”

Prime Minister Anthony Albanese publicly embraced Segal’s appeal, promising to dedicate “every single resource required” to eliminate antisemitism.

His office confirmed that several of Segal’s proposals are already under implementation or review, including those directed at digital communication, social media platforms, and emerging technologies such as AI.

The proposals, set out in Segal’s Plan to Combat Antisemitism, seek to harden legal and technical mechanisms to restrict online speech.

Among them are recommendations to strengthen hate crime legislation to address “antisemitic and other hateful or intimidating conduct, including with respect to serious vilification offences and the public promotion of hatred and antisemitic sentiment,” and to establish a national database of “antisemitic hate crimes and incidents.”

The plan also calls for the “broad adoption of the International Holocaust Remembrance Alliance’s working definition of antisemitism… across all levels of government and public institutions.”

This particular definition has drawn criticism internationally for its potential use in classifying political commentary about Israel as antisemitic.

A large portion of Segal’s framework deals specifically with digital regulation.

It proposes “regulatory parameters for algorithms” to “prevent the amplification of online hate,” mandates that social media platforms “reduce the reach of those who peddle hate behind a veil of anonymity,” and calls for “considering new online censorship and age verification laws” modeled on the UK’s Online Safety Act and the EU’s Digital Services Act.

Segal’s plan further insists on “ensuring that AI does not amplify antisemitic content,” effectively linking artificial intelligence moderation systems to government oversight of acceptable expression online.

Another element, described as a national security measure, seeks to “screen visa applicants for antisemitic views or affiliations” and deny entry to individuals for “antisemitic conduct and rhetoric.”

These measures amount to a major proposal for central oversight of both digital platforms and individual speech. While the government frames the package as a response to violent extremism, it would substantially expand censorship authority across online environments, media, and AI systems.