During a US Senate Select Committee on Intelligence hearing titled “Foreign Threats to Elections in 2024 – Roles and Responsibilities of U.S. Tech Providers,” several senators urged Big Tech executives to increase their censorship of foreign “disinformation” and share more information with the federal government.

While the senators pushing these measures insisted that such efforts would be focused on foreign adversaries who are creating inauthentic content, previous incidents, most notably the censorship of the infamous Hunter Biden laptop story, have shown that measures claiming to combat foreign disinformation often result in the speech of Americans being censored.

Related: Shadow Games: Questioning America’s Battle Against “Foreign Disinformation” in the Upcoming Election

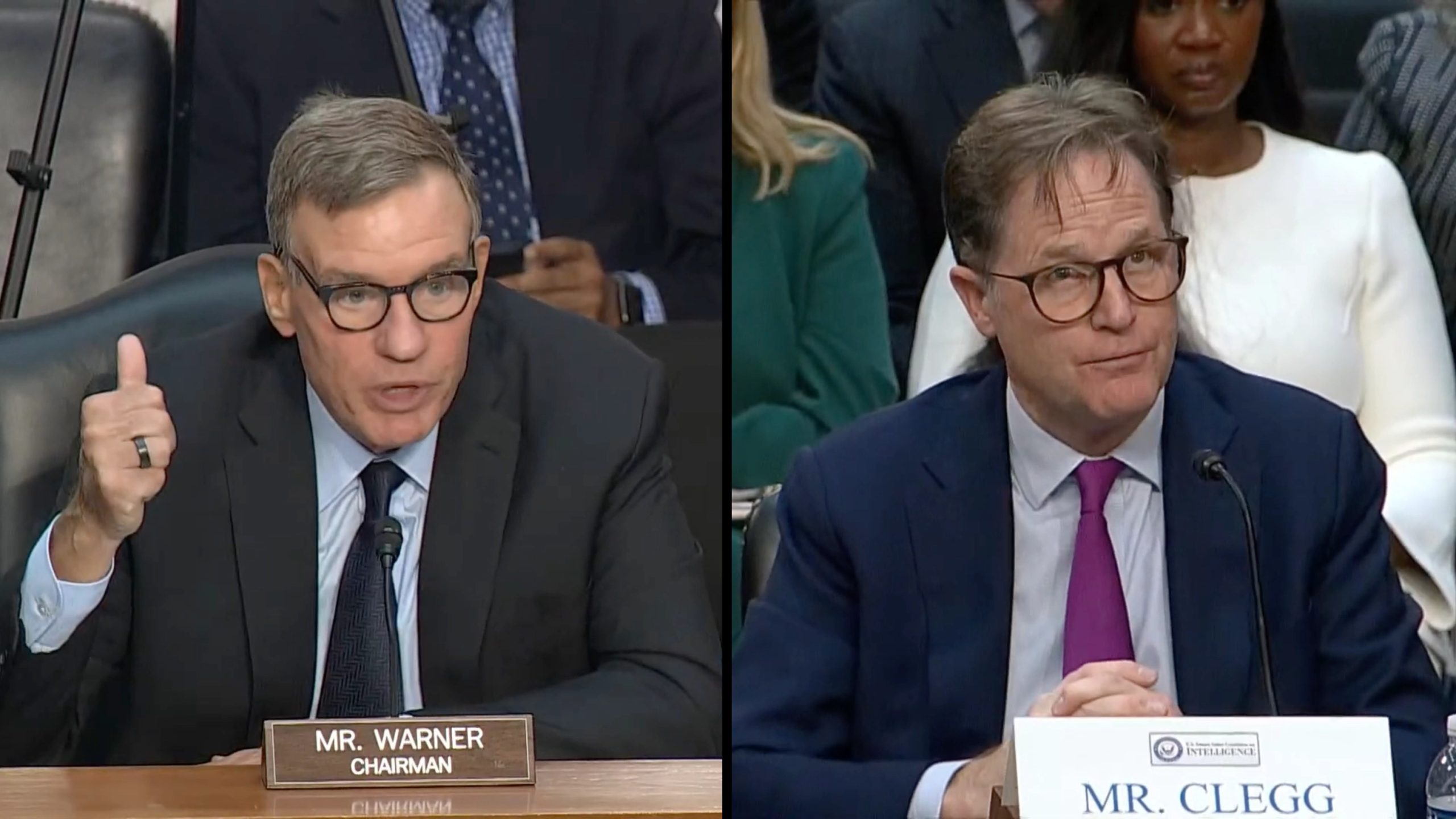

In his opening statement, Senator Mark Warner (D-VA) told the witnesses, Meta President of Global Affairs Nick Clegg, Microsoft Vice Chair and President Brad Smith, and Alphabet (Google’s parent company) President and Chief Legal Officer Kent Walker, that he hoped the hearing would result in greater information between Big Tech and government.

“Our committee’s bipartisan efforts also…resulted in a set of recommendations for government, for the private sector, and for political…campaigns,” Warner said. “Recommendations for which I hope today’s hearing will serve as a status check. These recommendations included greater information sharing between the US government and the private sector…about foreign malicious activity. Not domestic, foreign malicious activity.”

He continued by claiming that there’s already been “a pretty successful effort to share threat information about foreign influence activity with the private sector” and complained that X (which has reduced censorship on its platform since entrepreneur Elon Musk took over) didn’t send a representative to the hearing.

Warner also praised the companies in attendance for signing the “Tech Accord to Combat Deceptive Use of AI in 2024 Elections” in February. This accord was signed by most of the leading US tech companies and the signatories have vowed to deploy technology that counters “harmful AI-generated content meant to deceive voters.”

While Warner lauded Big Tech-government collaboration, he wasn’t happy that Americans are turning to the internet to escape the censorship clutches of this ever-expanding fusion of corporations and state.

“Unfortunately, we now have a case where too many Americans frankly don’t trust key US institutions, from federal agencies to local law enforcement, to traditional media,” Warner said. “There is increased reliance on…the internet. I think most of us would try to tell our kids just because you saw it on the internet doesn’t mean it’s true.”

He also lamented that Republicans are pushing back against entities that have been part of government-linked censorship coalitions, such as the Stanford Internet Observatory, and that Big Tech companies “have dramatically cut back on their own efforts to prohibit false information” from “foreign sources.”

As the hearing progressed, the talking point that increased censorship is needed in the 48 hours before and after the polls for the 2024 US presidential election close was discussed repeatedly.

Microsoft’s Brad Smith said “the 48 hours before the election” and “the 48 hours after the polls close, particularly if we have as close to an election as we anticipate, could be equally if not more significant in terms of spreading false information, disinformation, and literally undermining the tenants of our democracy.”

Warner latched on to this talking point and asked the tech executives to provide him with information on “what kind of surge capacity” their companies have to moderate content as the election approaches.

Senator Martin Heinrich (D-NM) pressed the witnesses on how they’re using artificial intelligence (AI) to “proactively” target “fake news outlets” and remove content that’s deemed to be “harassment.”

Smith replied that Microsoft is increasingly using AI to “detect these kinds of problems,” while Walker said that Google has policies to take down material that’s deemed to be “incitements to violence, direct threats, bullying, cyberattacks.”

While Heinrich’s question about the proactive use of AI was related to fake sites that were using the logos of legitimate sites, the increased use of AI to proactively target content that’s deemed to be “fake” could still create a slippery slope and lead to AI proactively censoring legitimate but disfavored content, without human oversight.

Senator Mark Kelly (D-AZ) told the witnesses that he hopes “that we can also count on the partnership of the American tech industry to “aggressively counter” foreign disinformation campaigns.

He also pushed the witnesses to implement a solution that ensures users of Big Tech platforms can’t navigate to “fake” content.

Although Kelly’s questions were focused on fake sites that were impersonating the North Atlantic Treaty Organization (NATO), this focus on memory-holing content that’s deemed to be fake by governments or tech companies opens the door for a new mechanism that these powerful institutions can use to censor other types of content they don’t want their users to see.

This potential for abuse was made apparent when Google’s Kent Walker told Kelly that it censors “cheap fakes” — a term that has been used by the Biden-Harris admin to downplay critical videos.

Senator Michael Bennett (D-CO) warned the tech executives that they “better be making the right decisions…to safeguard America’s democracy” against propaganda and pressed them on how much they’re spending on content moderation.

While many senators pushed for increased Big Tech censorship and data sharing with the federal government, some did push back and warn that previous plans to censor so-called foreign disinformation had resulted in the censorship of Americans.

Senator Marco Rubio (R-FL) noted that the topic is complicated and that Russian bots will sometimes amplify political views expressed by Americans, such as opposition to America’s involvement in the Russia-Ukraine war. He also pointed to the censorship of the Hunter Biden laptop story after 51 former intelligence officials signed a letter implying that it was “Russian disinformation” and the censorship that occurred during Covid.

Senator Tom Cotton (R-AR) also acknowledged that Russia, China, Iran, and North Korea are likely engaged in influence operations but said that “memes and YouTube videos” aren’t a major threat and argued that the censorship of the Hunter Biden laptop story is a much more “egregious” example of election interference. He also grilled the witnesses on more recent censorship that has occurred in relation to the first Trump assassination attempt and a recent California AI censorship law.

You can watch the full hearing here.